Denis G. Pelli

Spatial-frequency channels, shape bias, and adversarial robustness

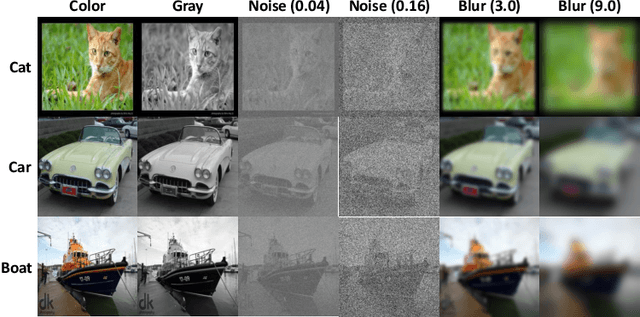

Sep 22, 2023Abstract:What spatial frequency information do humans and neural networks use to recognize objects? In neuroscience, critical band masking is an established tool that can reveal the frequency-selective filters used for object recognition. Critical band masking measures the sensitivity of recognition performance to noise added at each spatial frequency. Existing critical band masking studies show that humans recognize periodic patterns (gratings) and letters by means of a spatial-frequency filter (or "channel'') that has a frequency bandwidth of one octave (doubling of frequency). Here, we introduce critical band masking as a task for network-human comparison and test 14 humans and 76 neural networks on 16-way ImageNet categorization in the presence of narrowband noise. We find that humans recognize objects in natural images using the same one-octave-wide channel that they use for letters and gratings, making it a canonical feature of human object recognition. On the other hand, the neural network channel, across various architectures and training strategies, is 2-4 times as wide as the human channel. In other words, networks are vulnerable to high and low frequency noise that does not affect human performance. Adversarial and augmented-image training are commonly used to increase network robustness and shape bias. Does this training align network and human object recognition channels? Three network channel properties (bandwidth, center frequency, peak noise sensitivity) correlate strongly with shape bias (53% variance explained) and with robustness of adversarially-trained networks (74% variance explained). Adversarial training increases robustness but expands the channel bandwidth even further away from the human bandwidth. Thus, critical band masking reveals that the network channel is more than twice as wide as the human channel, and that adversarial training only increases this difference.

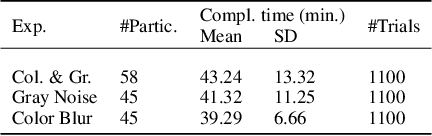

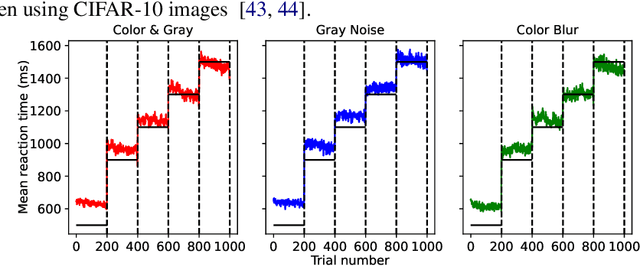

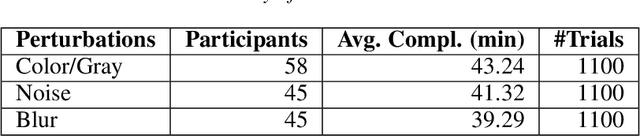

SATBench: Benchmarking the speed-accuracy tradeoff in object recognition by humans and dynamic neural networks

Jun 16, 2022

Abstract:The core of everyday tasks like reading and driving is active object recognition. Attempts to model such tasks are currently stymied by the inability to incorporate time. People show a flexible tradeoff between speed and accuracy and this tradeoff is a crucial human skill. Deep neural networks have emerged as promising candidates for predicting peak human object recognition performance and neural activity. However, modeling the temporal dimension i.e., the speed-accuracy tradeoff (SAT), is essential for them to serve as useful computational models for how humans recognize objects. To this end, we here present the first large-scale (148 observers, 4 neural networks, 8 tasks) dataset of the speed-accuracy tradeoff (SAT) in recognizing ImageNet images. In each human trial, a beep, indicating the desired reaction time, sounds at a fixed delay after the image is presented, and observer's response counts only if it occurs near the time of the beep. In a series of blocks, we test many beep latencies, i.e., reaction times. We observe that human accuracy increases with reaction time and proceed to compare its characteristics with the behavior of several dynamic neural networks that are capable of inference-time adaptive computation. Using FLOPs as an analog for reaction time, we compare networks with humans on curve-fit error, category-wise correlation, and curve steepness, and conclude that cascaded dynamic neural networks are a promising model of human reaction time in object recognition tasks.

Anytime Prediction as a Model of Human Reaction Time

Nov 25, 2020

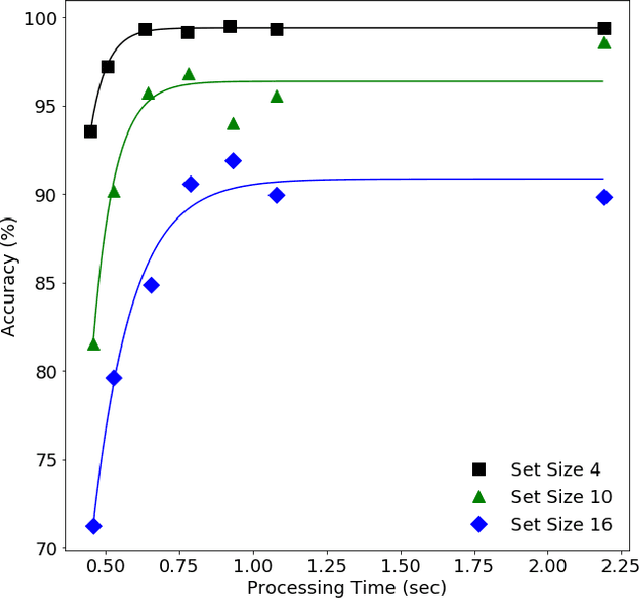

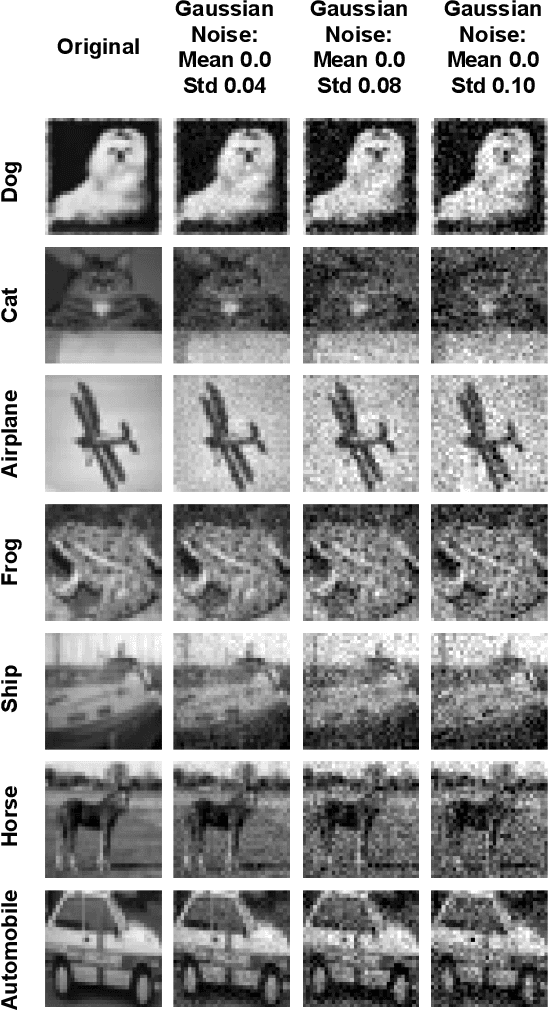

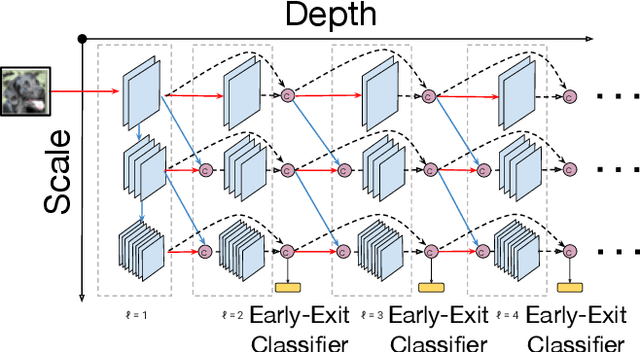

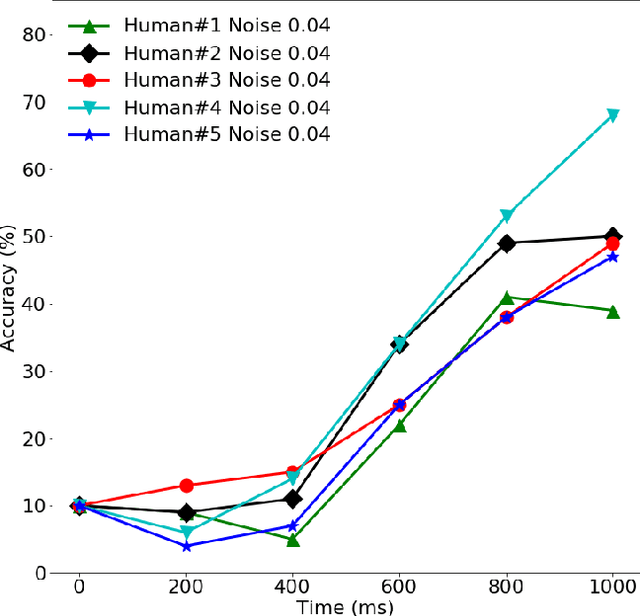

Abstract:Neural networks today often recognize objects as well as people do, and thus might serve as models of the human recognition process. However, most such networks provide their answer after a fixed computational effort, whereas human reaction time varies, e.g. from 0.2 to 10 s, depending on the properties of stimulus and task. To model the effect of difficulty on human reaction time, we considered a classification network that uses early-exit classifiers to make anytime predictions. Comparing human and MSDNet accuracy in classifying CIFAR-10 images in added Gaussian noise, we find that the network equivalent input noise SD is 15 times higher than human, and that human efficiency is only 0.6\% that of the network. When appropriate amounts of noise are present to bring the two observers (human and network) into the same accuracy range, they show very similar dependence on duration or FLOPS, i.e. very similar speed-accuracy tradeoff. We conclude that Anytime classification (i.e. early exits) is a promising model for human reaction time in recognition tasks.

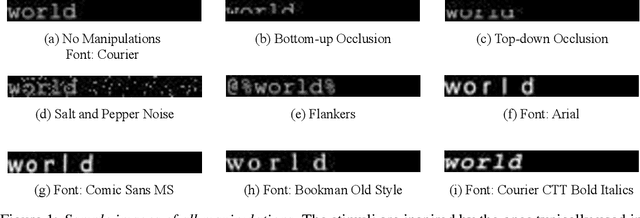

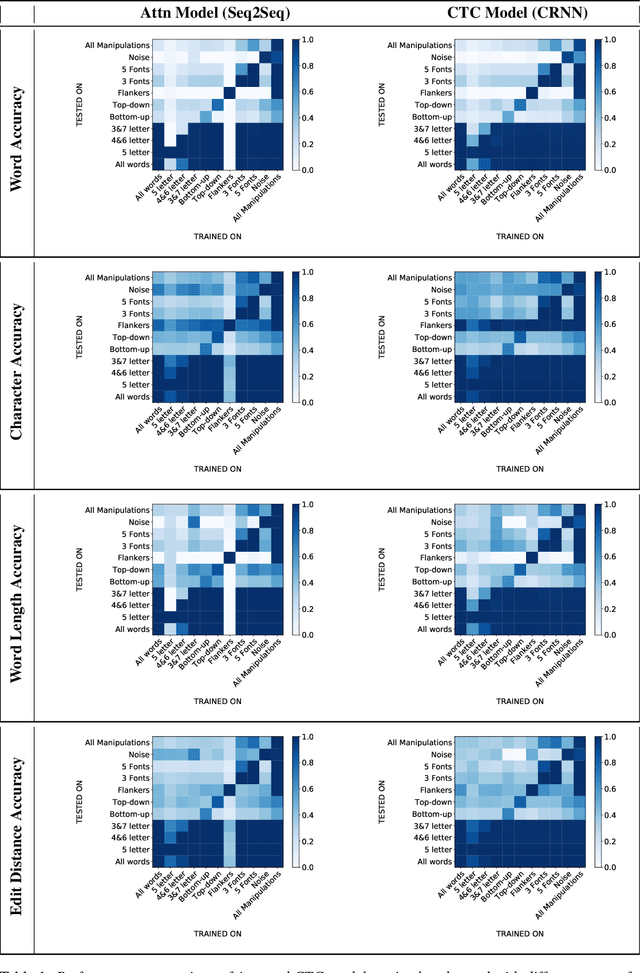

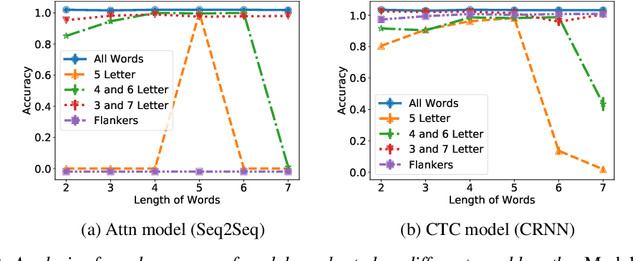

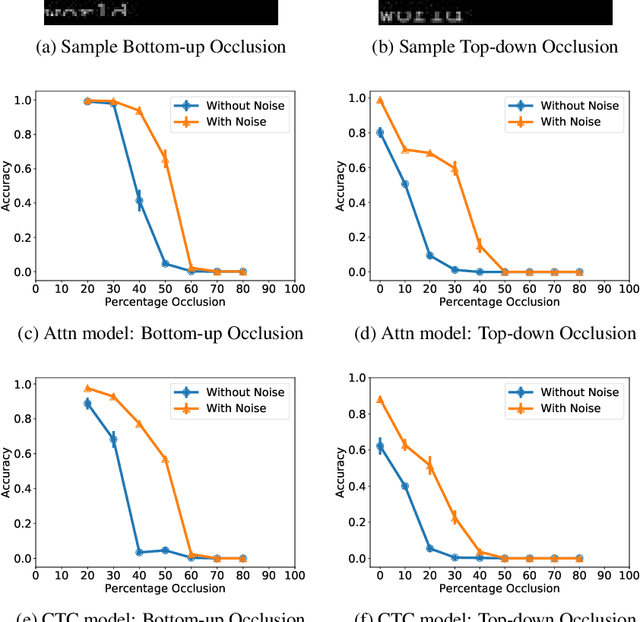

Using Human Psychophysics to Evaluate Generalization in Scene Text Recognition Models

Jun 30, 2020

Abstract:Scene text recognition models have advanced greatly in recent years. Inspired by human reading we characterize two important scene text recognition models by measuring their domains i.e. the range of stimulus images that they can read. The domain specifies the ability of readers to generalize to different word lengths, fonts, and amounts of occlusion. These metrics identify strengths and weaknesses of existing models. Relative to the attention-based (Attn) model, we discover that the connectionist temporal classification (CTC) model is more robust to noise and occlusion, and better at generalizing to different word lengths. Further, we show that in both models, adding noise to training images yields better generalization to occlusion. These results demonstrate the value of testing models till they break, complementing the traditional data science focus on optimizing performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge