Deepak Srivatsav

COBRA: Contrastive Bi-Modal Representation Algorithm

May 24, 2020

Abstract:There are a wide range of applications that involve multi-modal data, such as cross-modal retrieval, visual question-answering, and image captioning. Such applications are primarily dependent on aligned distributions of the different constituent modalities. Existing approaches generate latent embeddings for each modality in a joint fashion by representing them in a common manifold. However these joint embedding spaces fail to sufficiently reduce the modality gap, which affects the performance in downstream tasks. We hypothesize that these embeddings retain the intra-class relationships but are unable to preserve the inter-class dynamics. In this paper, we present a novel framework COBRA that aims to train two modalities (image and text) in a joint fashion inspired by the Contrastive Predictive Coding (CPC) and Noise Contrastive Estimation (NCE) paradigms which preserve both inter and intra-class relationships. We empirically show that this framework reduces the modality gap significantly and generates a robust and task agnostic joint-embedding space. We outperform existing work on four diverse downstream tasks spanning across seven benchmark cross-modal datasets.

Attentional Road Safety Networks

Dec 12, 2018

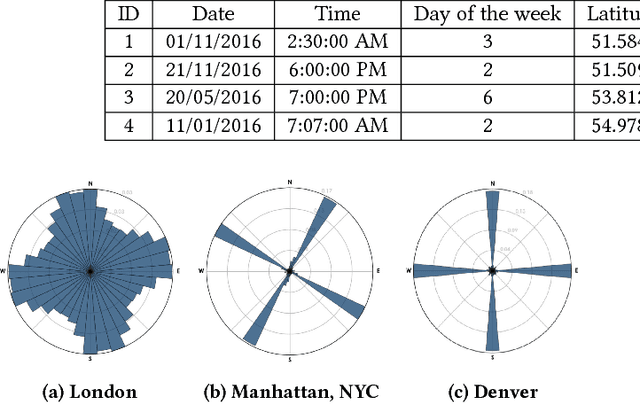

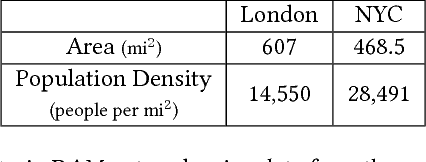

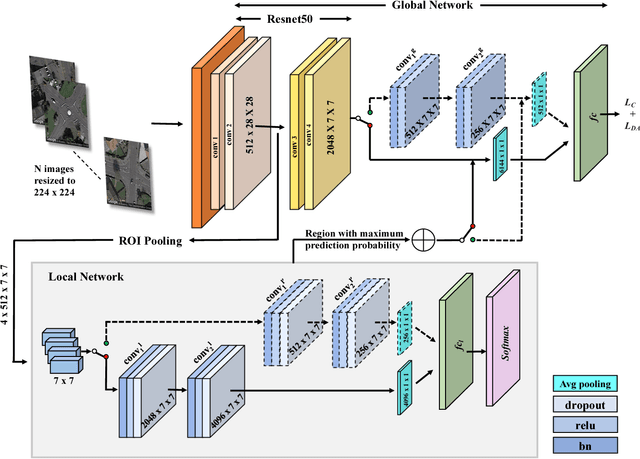

Abstract:Road safety mapping using satellite images is a cost-effective but a challenging problem for smart city planning. The scarcity of labeled data, misalignment and ambiguity makes it hard for supervised deep networks to learn efficient embeddings in order to classify between safe and dangerous road segments. In this paper, we address the challenges using a region guided attention network. In our model, we extract global features from a base network and augment it with local features obtained using the region guided attention network. In addition, we perform domain adaptation for unlabeled target data. In order to bridge the gap between safe samples and dangerous samples from source and target respectively, we propose a loss function based on within and between class covariance matrices. We conduct experiments on a public dataset of London to show that the algorithm achieves significant results with the classification accuracy of 86.21%. We obtain an increase of 4% accuracy for NYC using domain adaptation network. Besides, we perform a user study and demonstrate that our proposed algorithm achieves 23.12% better accuracy compared to subjective analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge