Davide Montella

Institute of Cognitive Sciences and Technologies

An open-ended learning architecture to face the REAL 2020 simulated robot competition

Nov 27, 2020

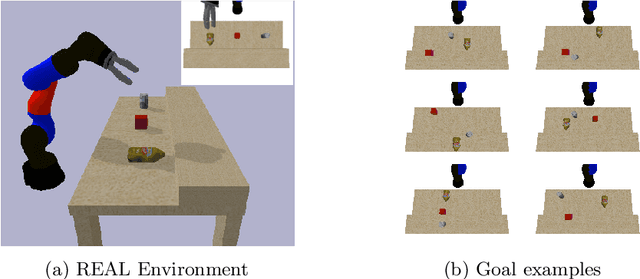

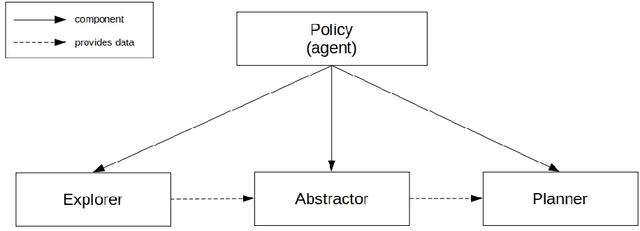

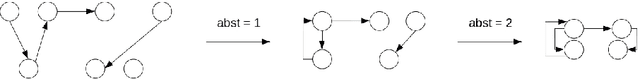

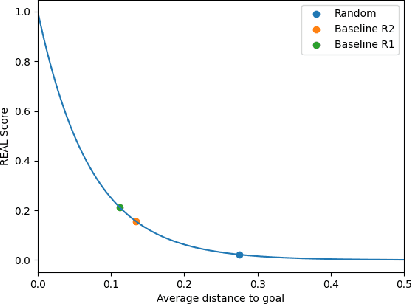

Abstract:Open-ended learning is a core research field of machine learning and robotics aiming to build learning machines and robots able to autonomously acquire knowledge and skills and to reuse them to solve novel tasks. The multiple challenges posed by open-ended learning have been operationalized in the robotic competition REAL 2020. This requires a simulated camera-arm-gripper robot to (a) autonomously learn to interact with objects during an intrinsic phase where it can learn how to move objects and then (b) during an extrinsic phase, to re-use the acquired knowledge to accomplish externally given goals requiring the robot to move objects to specific locations unknown during the intrinsic phase. Here we present a 'baseline architecture' for solving the challenge, provided as baseline model for REAL 2020. Few models have all the functionalities needed to solve the REAL 2020 benchmark and none has been tested with it yet. The architecture we propose is formed by three components: (1) Abstractor: abstracting sensory input to learn relevant control variables from images; (2) Explorer: generating experience to learn goals and actions; (3) Planner: formulating and executing action plans to accomplish the externally provided goals. The architecture represents the first model to solve the simpler REAL 2020 'Round 1' allowing the use of a simple parameterised push action. On Round 2, the architecture was used with a more general action (sequence of joints positions) achieving again higher than chance level performance. The baseline software is well documented and available for download and use at https://github.com/AIcrowd/REAL2020_starter_kit.

Autonomous learning of multiple, context-dependent tasks

Nov 27, 2020

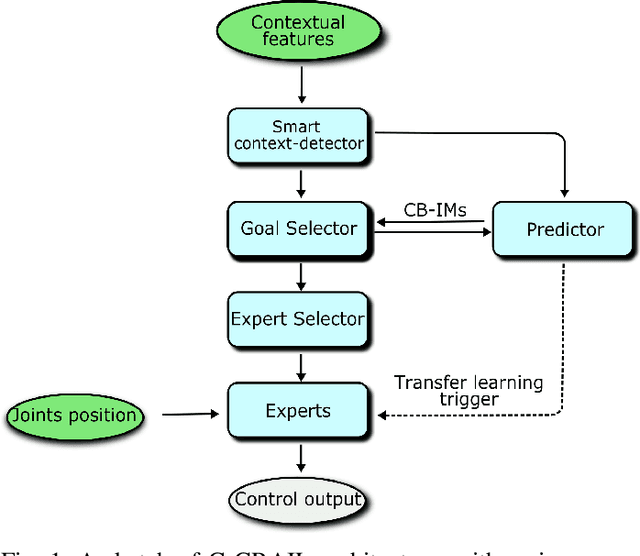

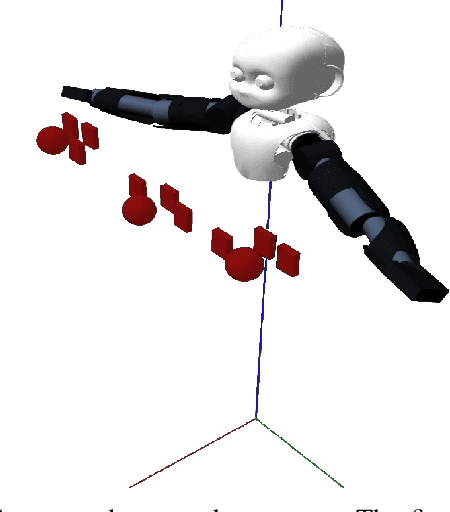

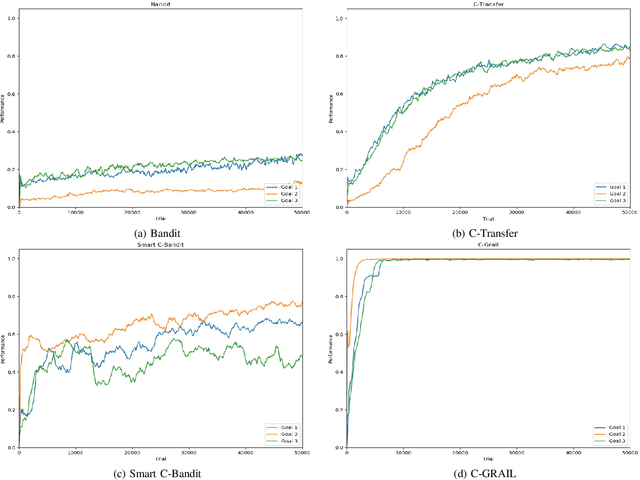

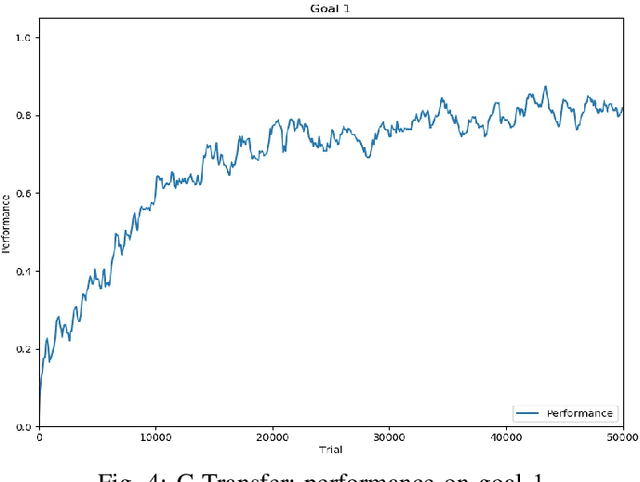

Abstract:When facing the problem of autonomously learning multiple tasks with reinforcement learning systems, researchers typically focus on solutions where just one parametrised policy per task is sufficient to solve them. However, in complex environments presenting different contexts, the same task might need a set of different skills to be solved. These situations pose two challenges: (a) to recognise the different contexts that need different policies; (b) quickly learn the policies to accomplish the same tasks in the new discovered contexts. These two challenges are even harder if faced within an open-ended learning framework where an agent has to autonomously discover the goals that it might accomplish in a given environment, and also to learn the motor skills to accomplish them. We propose a novel open-ended learning robot architecture, C-GRAIL, that solves the two challenges in an integrated fashion. In particular, the architecture is able to detect new relevant contests, and ignore irrelevant ones, on the basis of the decrease of the expected performance for a given goal. Moreover, the architecture can quickly learn the policies for the new contexts by exploiting transfer learning importing knowledge from already acquired policies. The architecture is tested in a simulated robotic environment involving a robot that autonomously learns to reach relevant target objects in the presence of multiple obstacles generating several different obstacles. The proposed architecture outperforms other models not using the proposed autonomous context-discovery and transfer-learning mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge