Autonomous learning of multiple, context-dependent tasks

Paper and Code

Nov 27, 2020

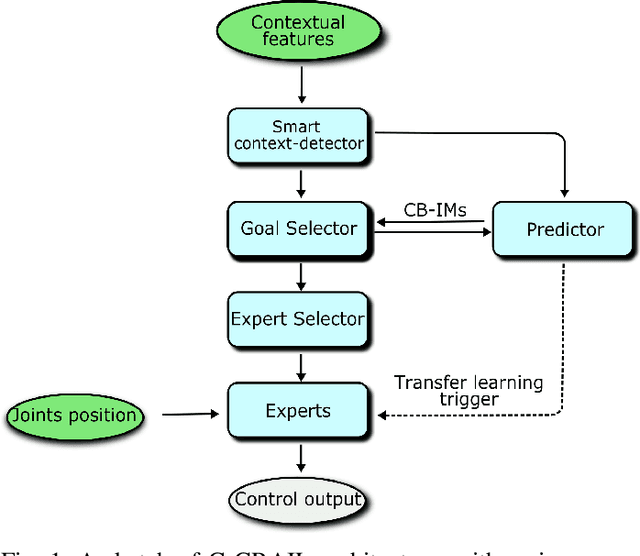

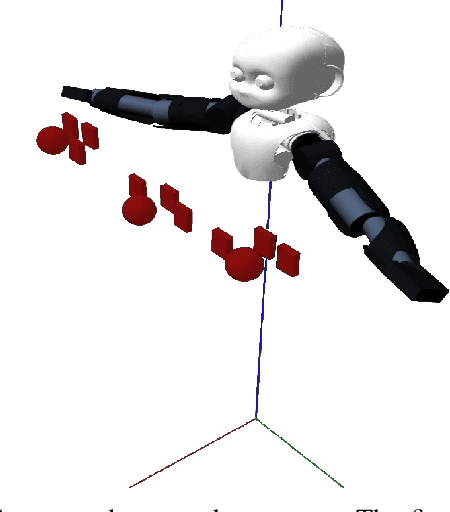

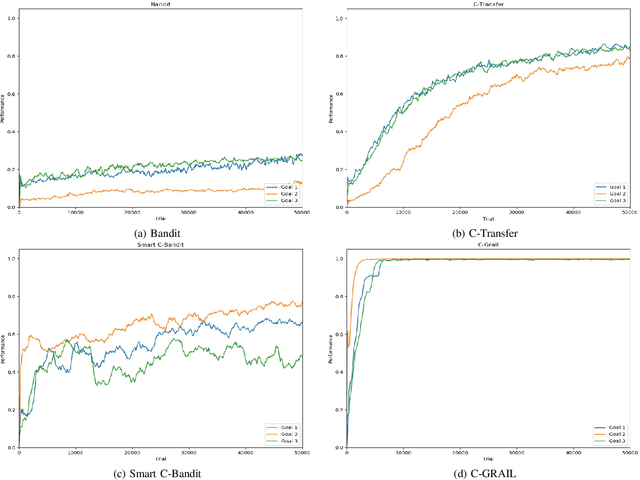

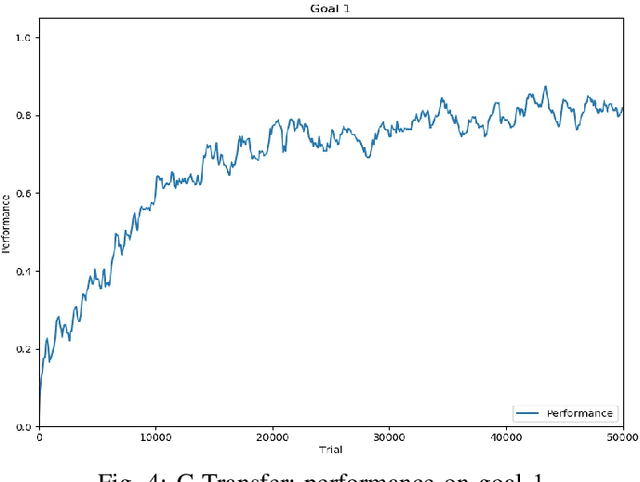

When facing the problem of autonomously learning multiple tasks with reinforcement learning systems, researchers typically focus on solutions where just one parametrised policy per task is sufficient to solve them. However, in complex environments presenting different contexts, the same task might need a set of different skills to be solved. These situations pose two challenges: (a) to recognise the different contexts that need different policies; (b) quickly learn the policies to accomplish the same tasks in the new discovered contexts. These two challenges are even harder if faced within an open-ended learning framework where an agent has to autonomously discover the goals that it might accomplish in a given environment, and also to learn the motor skills to accomplish them. We propose a novel open-ended learning robot architecture, C-GRAIL, that solves the two challenges in an integrated fashion. In particular, the architecture is able to detect new relevant contests, and ignore irrelevant ones, on the basis of the decrease of the expected performance for a given goal. Moreover, the architecture can quickly learn the policies for the new contexts by exploiting transfer learning importing knowledge from already acquired policies. The architecture is tested in a simulated robotic environment involving a robot that autonomously learns to reach relevant target objects in the presence of multiple obstacles generating several different obstacles. The proposed architecture outperforms other models not using the proposed autonomous context-discovery and transfer-learning mechanisms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge