David V. Pynadath

Operational Collective Intelligence of Humans and Machines

Feb 16, 2024Abstract:We explore the use of aggregative crowdsourced forecasting (ACF) as a mechanism to help operationalize ``collective intelligence'' of human-machine teams for coordinated actions. We adopt the definition for Collective Intelligence as: ``A property of groups that emerges from synergies among data-information-knowledge, software-hardware, and individuals (those with new insights as well as recognized authorities) that enables just-in-time knowledge for better decisions than these three elements acting alone.'' Collective Intelligence emerges from new ways of connecting humans and AI to enable decision-advantage, in part by creating and leveraging additional sources of information that might otherwise not be included. Aggregative crowdsourced forecasting (ACF) is a recent key advancement towards Collective Intelligence wherein predictions (X\% probability that Y will happen) and rationales (why I believe it is this probability that X will happen) are elicited independently from a diverse crowd, aggregated, and then used to inform higher-level decision-making. This research asks whether ACF, as a key way to enable Operational Collective Intelligence, could be brought to bear on operational scenarios (i.e., sequences of events with defined agents, components, and interactions) and decision-making, and considers whether such a capability could provide novel operational capabilities to enable new forms of decision-advantage.

Spontaneous Theory of Mind for Artificial Intelligence

Feb 16, 2024Abstract:Existing approaches to Theory of Mind (ToM) in Artificial Intelligence (AI) overemphasize prompted, or cue-based, ToM, which may limit our collective ability to develop Artificial Social Intelligence (ASI). Drawing from research in computer science, cognitive science, and related disciplines, we contrast prompted ToM with what we call spontaneous ToM -- reasoning about others' mental states that is grounded in unintentional, possibly uncontrollable cognitive functions. We argue for a principled approach to studying and developing AI ToM and suggest that a robust, or general, ASI will respond to prompts \textit{and} spontaneously engage in social reasoning.

Multiagent Inverse Reinforcement Learning via Theory of Mind Reasoning

Mar 01, 2023Abstract:We approach the problem of understanding how people interact with each other in collaborative settings, especially when individuals know little about their teammates, via Multiagent Inverse Reinforcement Learning (MIRL), where the goal is to infer the reward functions guiding the behavior of each individual given trajectories of a team's behavior during some task. Unlike current MIRL approaches, we do not assume that team members know each other's goals a priori; rather, that they collaborate by adapting to the goals of others perceived by observing their behavior, all while jointly performing a task. To address this problem, we propose a novel approach to MIRL via Theory of Mind (MIRL-ToM). For each agent, we first use ToM reasoning to estimate a posterior distribution over baseline reward profiles given their demonstrated behavior. We then perform MIRL via decentralized equilibrium by employing single-agent Maximum Entropy IRL to infer a reward function for each agent, where we simulate the behavior of other teammates according to the time-varying distribution over profiles. We evaluate our approach in a simulated 2-player search-and-rescue operation where the goal of the agents, playing different roles, is to search for and evacuate victims in the environment. Our results show that the choice of baseline profiles is paramount to the recovery of the ground-truth rewards, and that MIRL-ToM is able to recover the rewards used by agents interacting both with known and unknown teammates.

Comparing Psychometric and Behavioral Predictors of Compliance During Human-AI Interactions

Feb 03, 2023Abstract:Optimization of human-AI teams hinges on the AI's ability to tailor its interaction to individual human teammates. A common hypothesis in adaptive AI research is that minor differences in people's predisposition to trust can significantly impact their likelihood of complying with recommendations from the AI. Predisposition to trust is often measured with self-report inventories that are administered before interactions. We benchmark a popular measure of this kind against behavioral predictors of compliance. We find that the inventory is a less effective predictor of compliance than the behavioral measures in datasets taken from three previous research projects. This suggests a general property that individual differences in initial behavior are more predictive than differences in self-reported trust attitudes. This result also shows a potential for easily accessible behavioral measures to provide an AI with more accurate models without the use of (often costly) survey instruments.

The Role of Heuristics and Biases During Complex Choices with an AI Teammate

Jan 14, 2023Abstract:Behavioral scientists have classically documented aversion to algorithmic decision aids, from simple linear models to AI. Sentiment, however, is changing and possibly accelerating AI helper usage. AI assistance is, arguably, most valuable when humans must make complex choices. We argue that classic experimental methods used to study heuristics and biases are insufficient for studying complex choices made with AI helpers. We adapted an experimental paradigm designed for studying complex choices in such contexts. We show that framing and anchoring effects impact how people work with an AI helper and are predictive of choice outcomes. The evidence suggests that some participants, particularly those in a loss frame, put too much faith in the AI helper and experienced worse choice outcomes by doing so. The paradigm also generates computational modeling-friendly data allowing future studies of human-AI decision making.

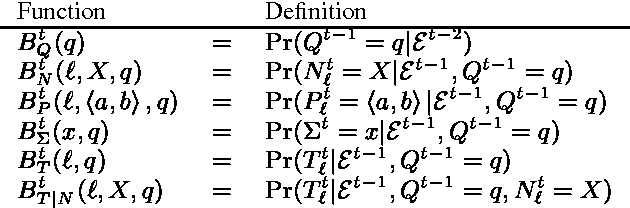

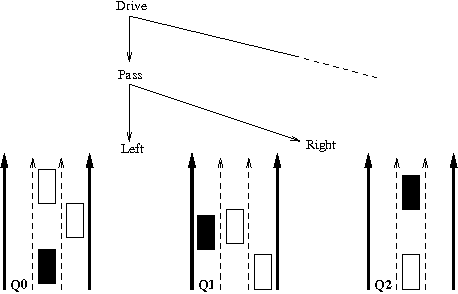

Accounting for Context in Plan Recognition, with Application to Traffic Monitoring

Feb 20, 2013

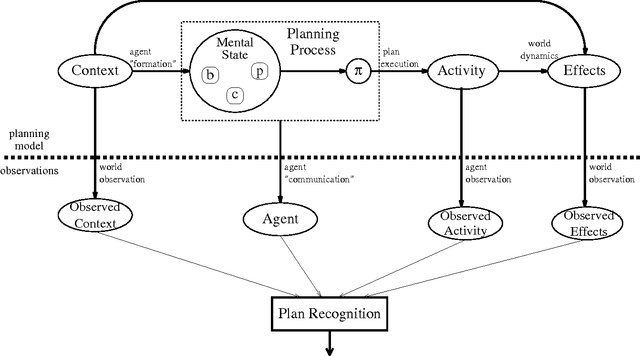

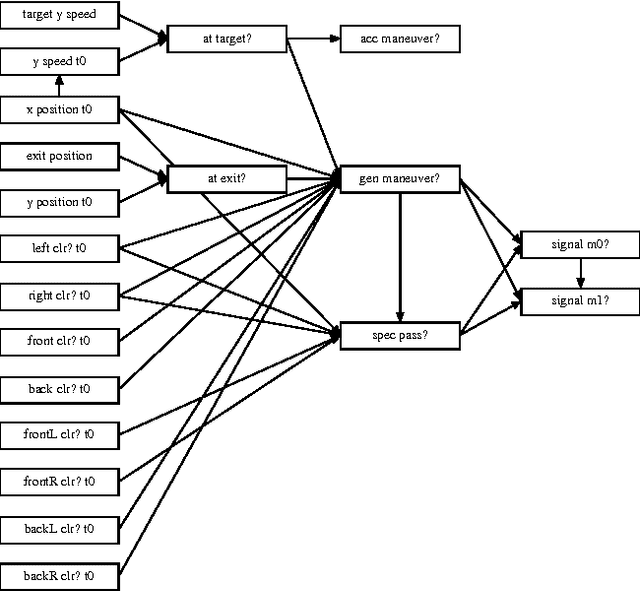

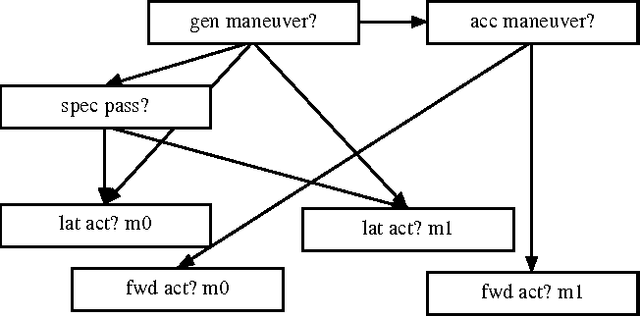

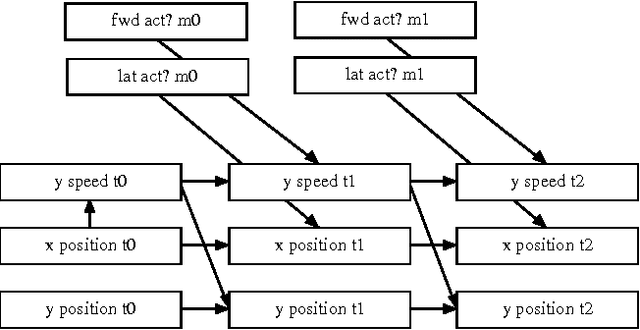

Abstract:Typical approaches to plan recognition start from a representation of an agent's possible plans, and reason evidentially from observations of the agent's actions to assess the plausibility of the various candidates. A more expansive view of the task (consistent with some prior work) accounts for the context in which the plan was generated, the mental state and planning process of the agent, and consequences of the agent's actions in the world. We present a general Bayesian framework encompassing this view, and focus on how context can be exploited in plan recognition. We demonstrate the approach on a problem in traffic monitoring, where the objective is to induce the plan of the driver from observation of vehicle movements. Starting from a model of how the driver generates plans, we show how the highway context can appropriately influence the recognizer's interpretation of observed driver behavior.

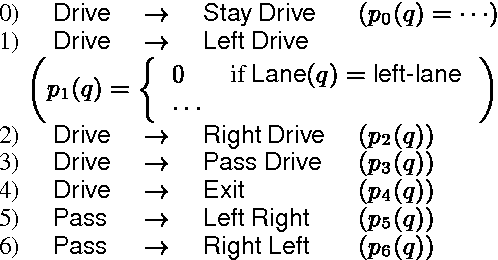

Probabilistic State-Dependent Grammars for Plan Recognition

Jan 16, 2013

Abstract:Techniques for plan recognition under uncertainty require a stochastic model of the plan-generation process. We introduce Probabilistic State-Dependent Grammars (PSDGs) to represent an agent's plan-generation process. The PSDG language model extends probabilistic context-free grammars (PCFGs) by allowing production probabilities to depend on an explicit model of the planning agent's internal and external state. Given a PSDG description of the plan-generation process, we can then use inference algorithms that exploit the particular independence properties of the PSDG language to efficiently answer plan-recognition queries. The combination of the PSDG language model and inference algorithms extends the range of plan-recognition domains for which practical probabilistic inference is possible, as illustrated by applications in traffic monitoring and air combat.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge