David Eng

A Generalizable Deep Learning System for Cardiac MRI

Dec 01, 2023

Abstract:Cardiac MRI allows for a comprehensive assessment of myocardial structure, function, and tissue characteristics. Here we describe a foundational vision system for cardiac MRI, capable of representing the breadth of human cardiovascular disease and health. Our deep learning model is trained via self-supervised contrastive learning, by which visual concepts in cine-sequence cardiac MRI scans are learned from the raw text of the accompanying radiology reports. We train and evaluate our model on data from four large academic clinical institutions in the United States. We additionally showcase the performance of our models on the UK BioBank, and two additional publicly available external datasets. We explore emergent zero-shot capabilities of our system, and demonstrate remarkable performance across a range of tasks; including the problem of left ventricular ejection fraction regression, and the diagnosis of 35 different conditions such as cardiac amyloidosis and hypertrophic cardiomyopathy. We show that our deep learning system is capable of not only understanding the staggering complexity of human cardiovascular disease, but can be directed towards clinical problems of interest yielding impressive, clinical grade diagnostic accuracy with a fraction of the training data typically required for such tasks.

Autonomous Driving in the Lung using Deep Learning for Localization

Jul 16, 2019

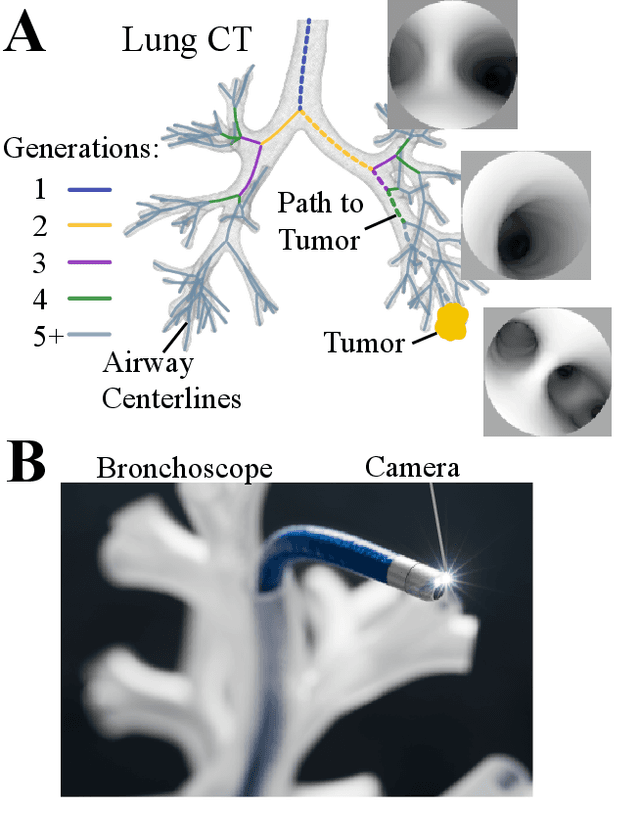

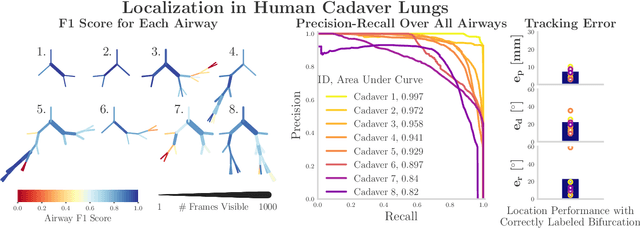

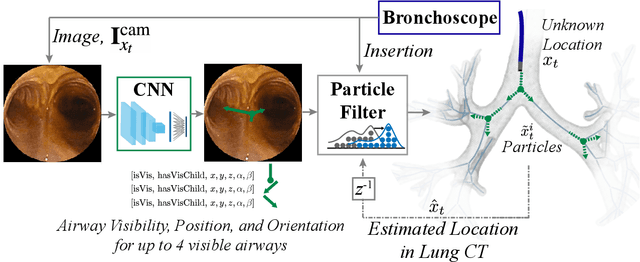

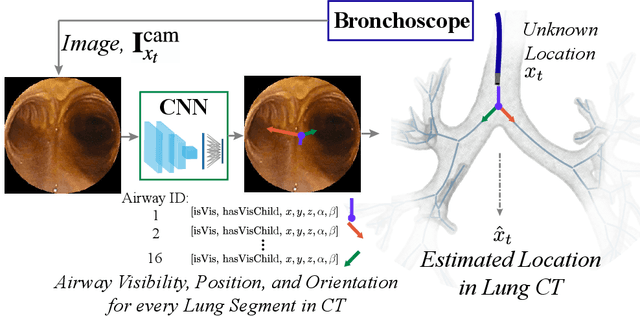

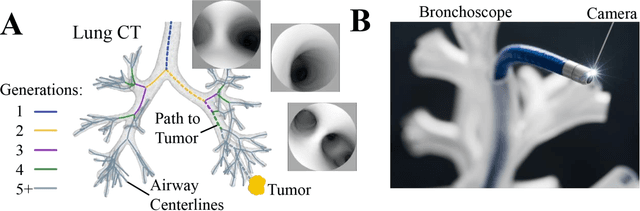

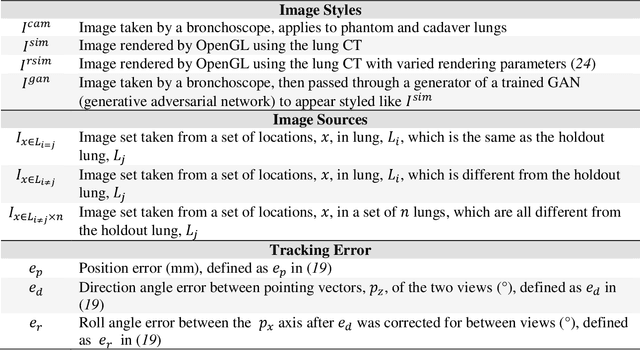

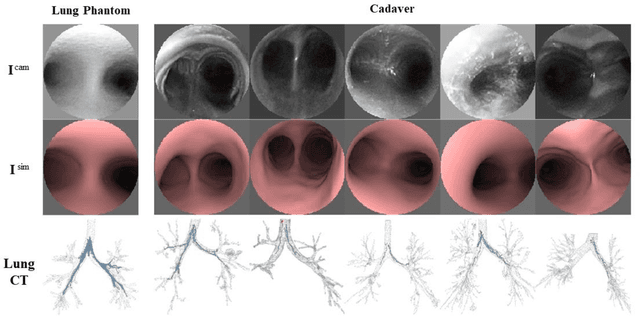

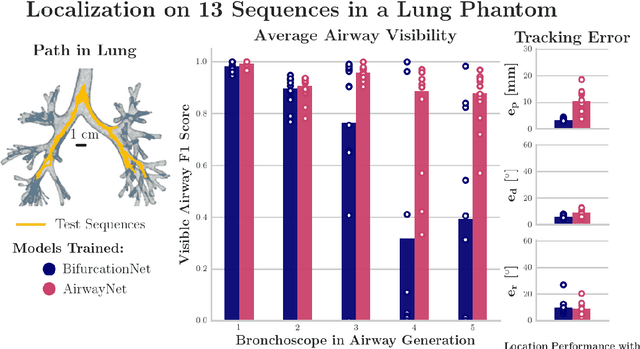

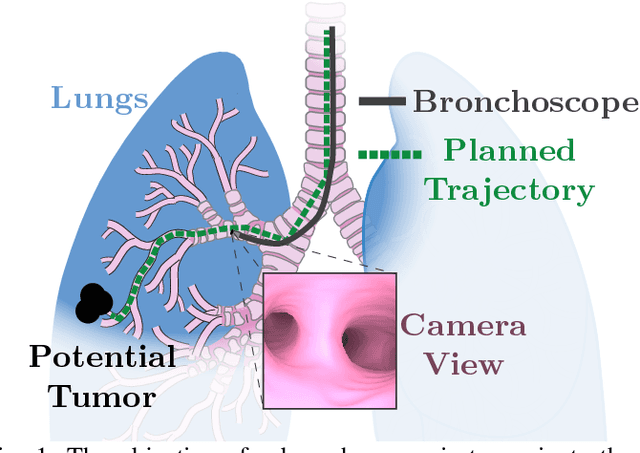

Abstract:Lung cancer is the leading cause of cancer-related death worldwide, and early diagnosis is critical to improving patient outcomes. To diagnose cancer, a highly trained pulmonologist must navigate a flexible bronchoscope deep into the branched structure of the lung for biopsy. The biopsy fails to sample the target tissue in 26-33% of cases largely because of poor registration with the preoperative CT map. To improve intraoperative registration, we develop two deep learning approaches to localize the bronchoscope in the preoperative CT map based on the bronchoscopic video in real-time, called AirwayNet and BifurcationNet. The networks are trained entirely on simulated images derived from the patient-specific CT. When evaluated on recorded bronchoscopy videos in a phantom lung, AirwayNet outperforms other deep learning localization algorithms with an area under the precision-recall curve of 0.97. Using AirwayNet, we demonstrate autonomous driving in the phantom lung based on video feedback alone. The robot reaches four targets in the left and right lungs in 95% of the trials. On recorded videos in eight human cadaver lungs, AirwayNet achieves areas under the precision-recall curve ranging from 0.82 to 0.997.

Deep Learning for Localization in the Lung

Mar 25, 2019

Abstract:Lung cancer is the leading cause of cancer-related death worldwide, and early diagnosis is critical to improving patient outcomes. To diagnose cancer, a highly trained pulmonologist must navigate a flexible bronchoscope deep into the branched structure of the lung for biopsy. The biopsy fails to sample the target tissue in 26-33% of cases largely because of poor registration with the preoperative CT map. We developed two deep learning approaches to localize the bronchoscope in the preoperative CT map in real time and tested the algorithms across 13 trajectories in a lung phantom and 68 trajectories in 11 human cadaver lungs. In the lung phantom, we observe performance reaching 95% precision and recall of visible airways and 3 mm average position error. On a successful cadaver lung sequence, the algorithms trained on simulation alone achieved 77%-94% precision and recall of visible airways and 4-6 mm average position error. We also compare the effect of GAN-stylizing images and we look at aggregate statistics over the entire set of trajectories.

OffsetNet: Deep Learning for Localization in the Lung using Rendered Images

Sep 15, 2018

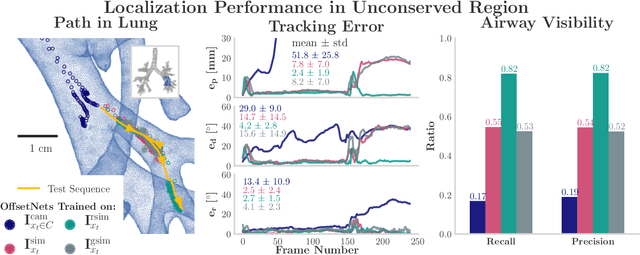

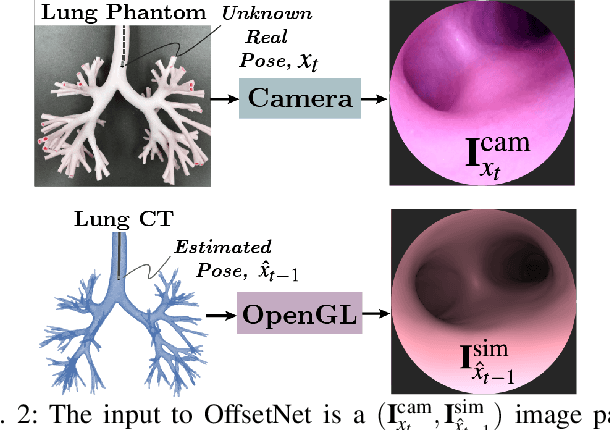

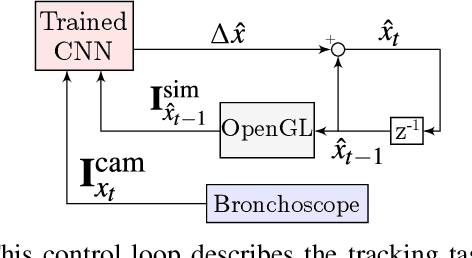

Abstract:Navigating surgical tools in the dynamic and tortuous anatomy of the lung's airways requires accurate, real-time localization of the tools with respect to the preoperative scan of the anatomy. Such localization can inform human operators or enable closed-loop control by autonomous agents, which would require accuracy not yet reported in the literature. In this paper, we introduce a deep learning architecture, called OffsetNet, to accurately localize a bronchoscope in the lung in real-time. After training on only 30 minutes of recorded camera images in conserved regions of a lung phantom, OffsetNet tracks the bronchoscope's motion on a held-out recording through these same regions at an update rate of 47 Hz and an average position error of 1.4 mm. Because this model performs poorly in less conserved regions, we augment the training dataset with simulated images from these regions. To bridge the gap between camera and simulated domains, we implement domain randomization and a generative adversarial network (GAN). After training on simulated images, OffsetNet tracks the bronchoscope's motion in less conserved regions at an average position error of 2.4 mm, which meets conservative thresholds required for successful tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge