Autonomous Driving in the Lung using Deep Learning for Localization

Paper and Code

Jul 16, 2019

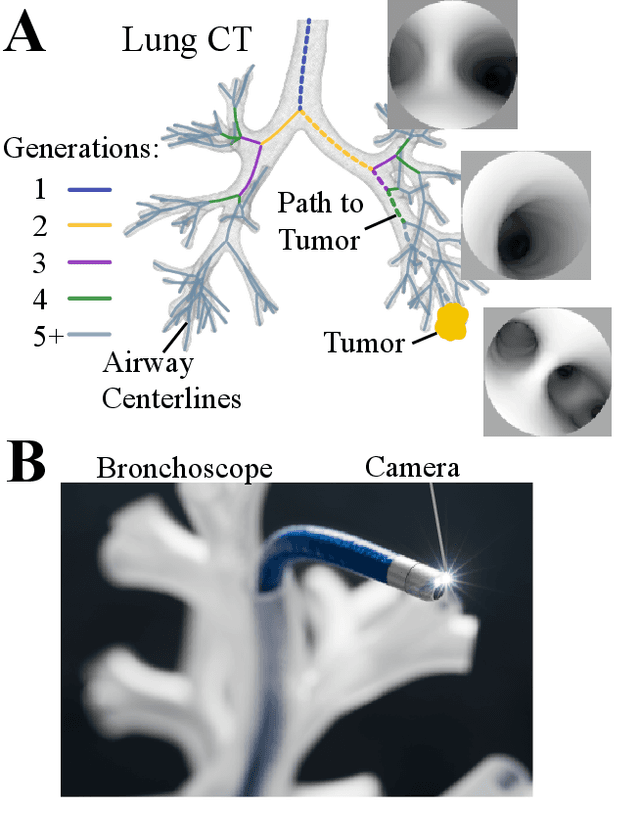

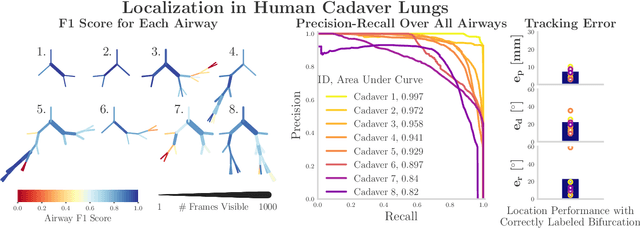

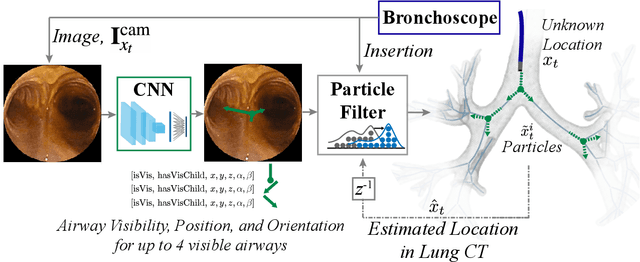

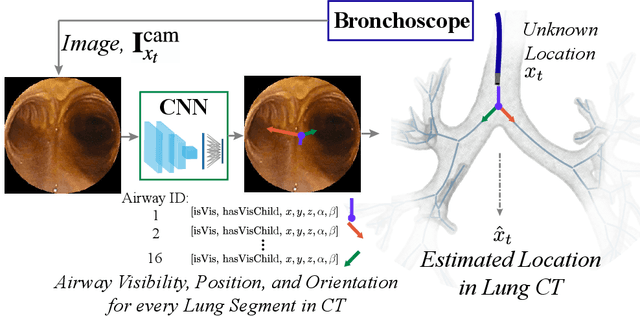

Lung cancer is the leading cause of cancer-related death worldwide, and early diagnosis is critical to improving patient outcomes. To diagnose cancer, a highly trained pulmonologist must navigate a flexible bronchoscope deep into the branched structure of the lung for biopsy. The biopsy fails to sample the target tissue in 26-33% of cases largely because of poor registration with the preoperative CT map. To improve intraoperative registration, we develop two deep learning approaches to localize the bronchoscope in the preoperative CT map based on the bronchoscopic video in real-time, called AirwayNet and BifurcationNet. The networks are trained entirely on simulated images derived from the patient-specific CT. When evaluated on recorded bronchoscopy videos in a phantom lung, AirwayNet outperforms other deep learning localization algorithms with an area under the precision-recall curve of 0.97. Using AirwayNet, we demonstrate autonomous driving in the phantom lung based on video feedback alone. The robot reaches four targets in the left and right lungs in 95% of the trials. On recorded videos in eight human cadaver lungs, AirwayNet achieves areas under the precision-recall curve ranging from 0.82 to 0.997.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge