OffsetNet: Deep Learning for Localization in the Lung using Rendered Images

Paper and Code

Sep 15, 2018

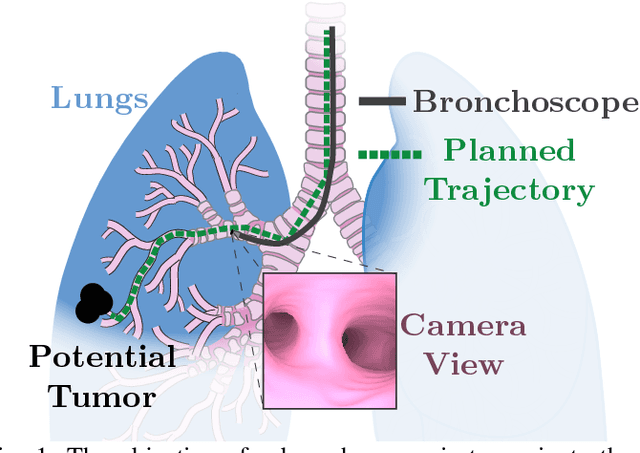

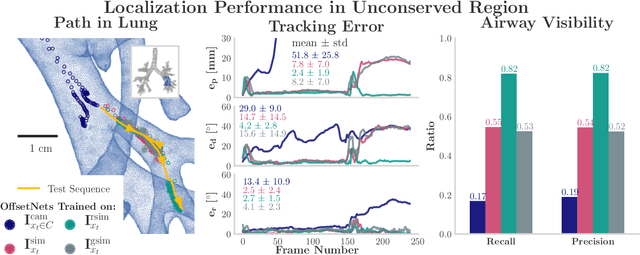

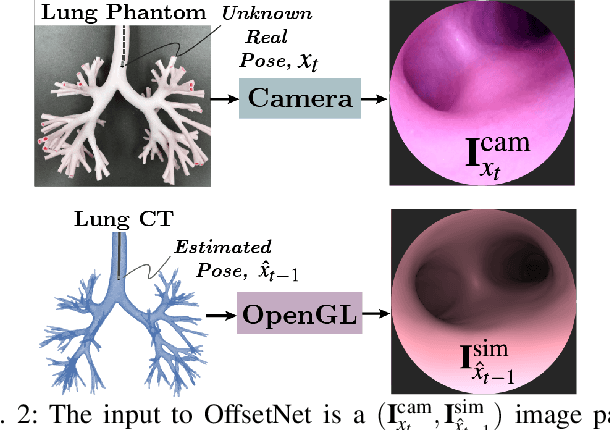

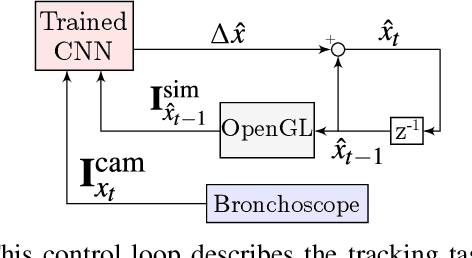

Navigating surgical tools in the dynamic and tortuous anatomy of the lung's airways requires accurate, real-time localization of the tools with respect to the preoperative scan of the anatomy. Such localization can inform human operators or enable closed-loop control by autonomous agents, which would require accuracy not yet reported in the literature. In this paper, we introduce a deep learning architecture, called OffsetNet, to accurately localize a bronchoscope in the lung in real-time. After training on only 30 minutes of recorded camera images in conserved regions of a lung phantom, OffsetNet tracks the bronchoscope's motion on a held-out recording through these same regions at an update rate of 47 Hz and an average position error of 1.4 mm. Because this model performs poorly in less conserved regions, we augment the training dataset with simulated images from these regions. To bridge the gap between camera and simulated domains, we implement domain randomization and a generative adversarial network (GAN). After training on simulated images, OffsetNet tracks the bronchoscope's motion in less conserved regions at an average position error of 2.4 mm, which meets conservative thresholds required for successful tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge