Daniela Ushizima

Ascribe New Dimensions to Scientific Data Visualization with VR

Apr 18, 2025Abstract:For over half a century, the computer mouse has been the primary tool for interacting with digital data, yet it remains a limiting factor in exploring complex, multi-scale scientific images. Traditional 2D visualization methods hinder intuitive analysis of inherently 3D structures. Virtual Reality (VR) offers a transformative alternative, providing immersive, interactive environments that enhance data comprehension. This article introduces ASCRIBE-VR, a VR platform of Autonomous Solutions for Computational Research with Immersive Browsing \& Exploration, which integrates AI-driven algorithms with scientific images. ASCRIBE-VR enables multimodal analysis, structural assessments, and immersive visualization, supporting scientific visualization of advanced datasets such as X-ray CT, Magnetic Resonance, and synthetic 3D imaging. Our VR tools, compatible with Meta Quest, can consume the output of our AI-based segmentation and iterative feedback processes to enable seamless exploration of large-scale 3D images. By merging AI-generated results with VR visualization, ASCRIBE-VR enhances scientific discovery, bridging the gap between computational analysis and human intuition in materials research, connecting human-in-the-loop with digital twins.

A Review on Generative AI For Text-To-Image and Image-To-Image Generation and Implications To Scientific Images

Feb 28, 2025Abstract:This review surveys the state-of-the-art in text-to-image and image-to-image generation within the scope of generative AI. We provide a comparative analysis of three prominent architectures: Variational Autoencoders, Generative Adversarial Networks and Diffusion Models. For each, we elucidate core concepts, architectural innovations, and practical strengths and limitations, particularly for scientific image understanding. Finally, we discuss critical open challenges and potential future research directions in this rapidly evolving field.

An Ensemble Approach for Brain Tumor Segmentation and Synthesis

Nov 26, 2024Abstract:The integration of machine learning in magnetic resonance imaging (MRI), specifically in neuroimaging, is proving to be incredibly effective, leading to better diagnostic accuracy, accelerated image analysis, and data-driven insights, which can potentially transform patient care. Deep learning models utilize multiple layers of processing to capture intricate details of complex data, which can then be used on a variety of tasks, including brain tumor classification, segmentation, image synthesis, and registration. Previous research demonstrates high accuracy in tumor segmentation using various model architectures, including nn-UNet and Swin-UNet. U-Mamba, which uses state space modeling, also achieves high accuracy in medical image segmentation. To leverage these models, we propose a deep learning framework that ensembles these state-of-the-art architectures to achieve accurate segmentation and produce finely synthesized images.

Lithium Metal Battery Quality Control via Transformer-CNN Segmentation

Feb 09, 2023

Abstract:Lithium metal battery (LMB) has the potential to be the next-generation battery system because of their high theoretical energy density. However, defects known as dendrites are formed by heterogeneous lithium (Li) plating, which hinder the development and utilization of LMBs. Non-destructive techniques to observe the dendrite morphology often use computerized X-ray tomography (XCT) imaging to provide cross-sectional views. To retrieve three-dimensional structures inside a battery, image segmentation becomes essential to quantitatively analyze XCT images. This work proposes a new binary semantic segmentation approach using a transformer-based neural network (T-Net) model capable of segmenting out dendrites from XCT data. In addition, we compare the performance of the proposed T-Net with three other algorithms, such as U-Net, Y-Net, and E-Net, consisting of an Ensemble Network model for XCT analysis. Our results show the advantages of using T-Net in terms of object metrics, such as mean Intersection over Union (mIoU) and mean Dice Similarity Coefficient (mDSC) as well as qualitatively through several comparative visualizations.

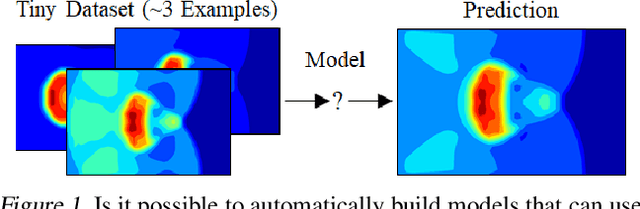

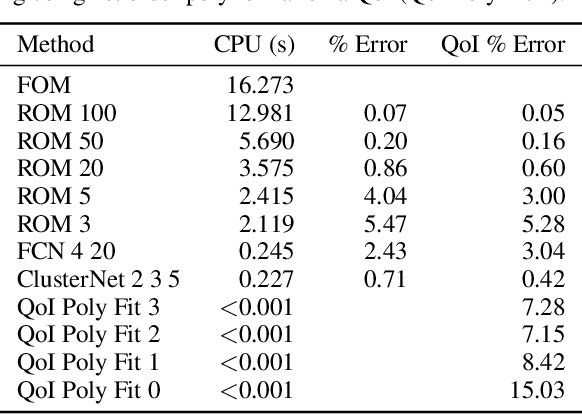

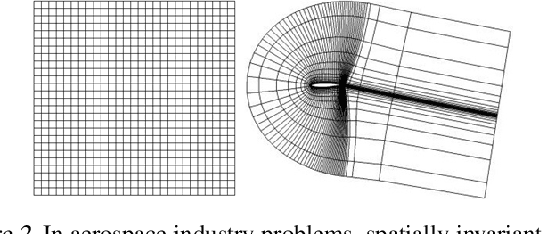

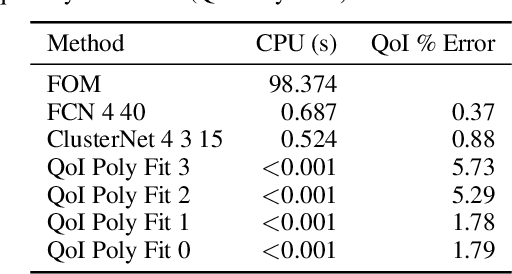

Neural Networks Predict Fluid Dynamics Solutions from Tiny Datasets

Feb 05, 2019

Abstract:In computational fluid dynamics, it often takes days or weeks to simulate the aerodynamic behavior of designs such as jets, spacecraft, or gas turbine engines. One of the biggest open problems in the field is how to simulate such systems much more quickly with sufficient accuracy. Many approaches have been tried; some involve models of the underlying physics, while others are model-free and make predictions based only on existing simulation data. However, all previous approaches have severe shortcomings or limitations. We present a novel approach: we reformulate the prediction problem to effectively increase the size of the otherwise tiny datasets, and we introduce a new neural network architecture (called a cluster network) with an inductive bias well-suited to fluid dynamics problems. Compared to state-of-the-art model-based approximations, we show that our approach is nearly as accurate, an order of magnitude faster and vastly easier to apply. Moreover, our method outperforms previous model-free approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge