Daniel Ostler

MITI: SLAM Benchmark for Laparoscopic Surgery

Feb 23, 2022

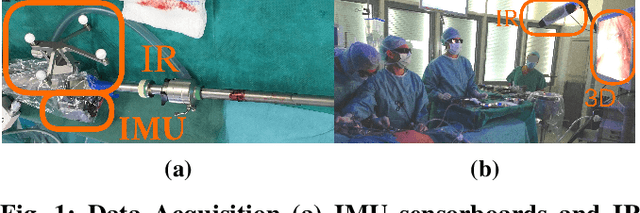

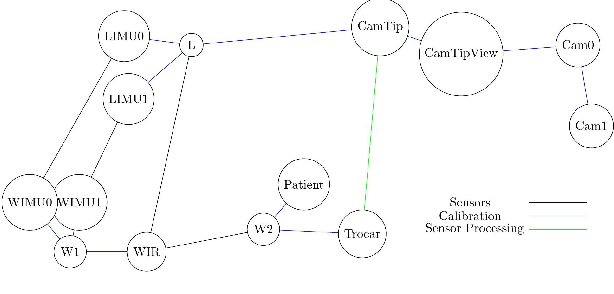

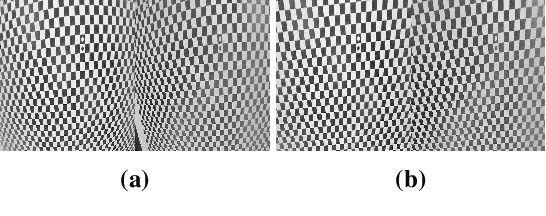

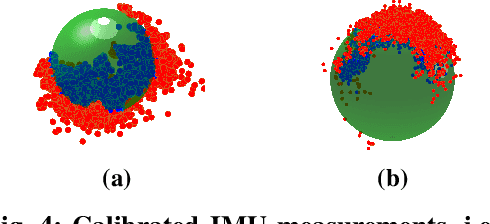

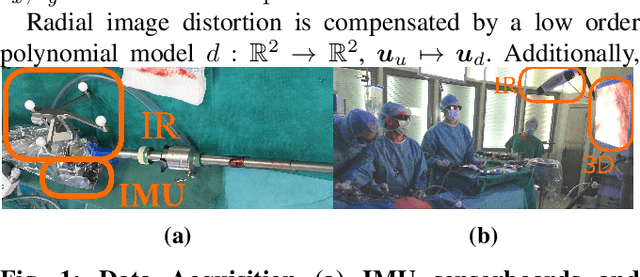

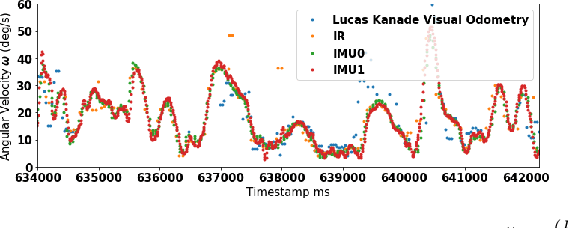

Abstract:We propose a new benchmark for evaluating stereoscopic visual-inertial computer vision algorithms (SLAM/ SfM/ 3D Reconstruction/ Visual-Inertial Odometry) for minimally invasive surgical (MIS) interventions in the abdomen. Our MITI Dataset available at [https://mediatum.ub.tum.de/1621941] provides all the necessary data by a complete recording of a handheld surgical intervention at Research Hospital Rechts der Isar of TUM. It contains multimodal sensor information from IMU, stereoscopic video, and infrared (IR) tracking as ground truth for evaluation. Furthermore, calibration for the stereoscope, accelerometer, magnetometer, the rigid transformations in the sensor setup, and time-offsets are available. We wisely chose a suitable intervention that contains very few cutting and tissue deformation and shows a full scan of the abdomen with a handheld camera such that it is ideal for testing SLAM algorithms. Intending to promote the progress of visual-inertial algorithms designed for MIS application, we hope that our clinical training dataset helps and enables researchers to enhance algorithms.

Constrained Visual-Inertial Localization With Application And Benchmark in Laparoscopic Surgery

Feb 22, 2022

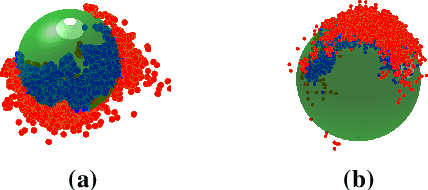

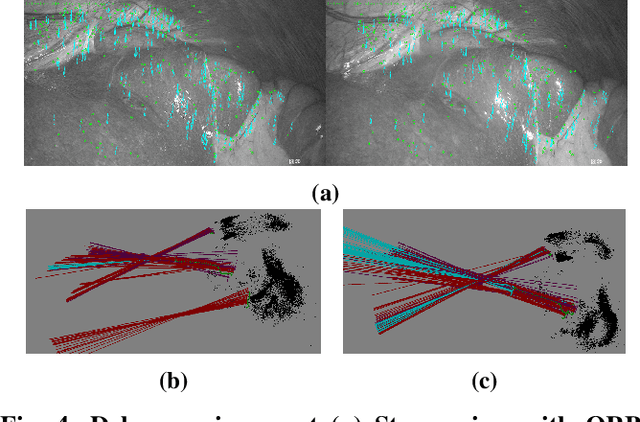

Abstract:We propose a novel method to tackle the visual-inertial localization problem for constrained camera movements. We use residuals from the different modalities to jointly optimize a global cost function. The residuals emerge from IMU measurements, stereoscopic feature points, and constraints on possible solutions in SE(3). In settings where dynamic disturbances are frequent, the residuals reduce the complexity of the problem and make localization feasible. We verify the advantages of our method in a suitable medical use case and produce a dataset capturing a minimally invasive surgery in the abdomen. Our novel clinical dataset MITI is comparable to state-of-the-art evaluation datasets, contains calibration and synchronization and is available at https://mediatum.ub.tum.de/1621941.

OperA: Attention-Regularized Transformers for Surgical Phase Recognition

Mar 05, 2021

Abstract:In this paper we introduce OperA, a transformer-based model that accurately predicts surgical phases from long video sequences. A novel attention regularization loss encourages the model to focus on high-quality frames during training. Moreover, the attention weights are utilized to identify characteristic high attention frames for each surgical phase, which could further be used for surgery summarization. OperA is thoroughly evaluated on two datasets of laparoscopic cholecystectomy videos, outperforming various state-of-the-art temporal refinement approaches.

The TUM LapChole dataset for the M2CAI 2016 workflow challenge

Aug 31, 2017

Abstract:In this technical report we present our collected dataset of laparoscopic cholecystectomies (LapChole). Laparoscopic videos of a total of 20 surgeries were recorded and annotated with surgical phase labels, of which 15 were randomly pre-determined as training data, while the remaining 5 videos are selected as test data. This dataset was later included as part of the M2CAI 2016 workflow detection challenge during MICCAI 2016 in Athens.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge