Daniel Kent

Michigan State University

Optical Lens Attack on Monocular Depth Estimation for Autonomous Driving

Oct 31, 2024

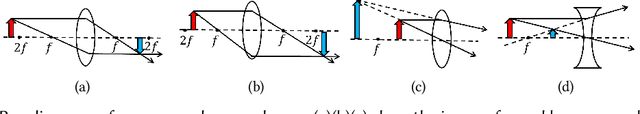

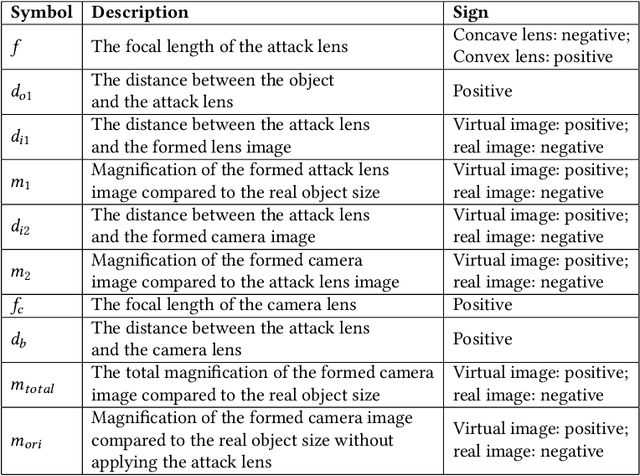

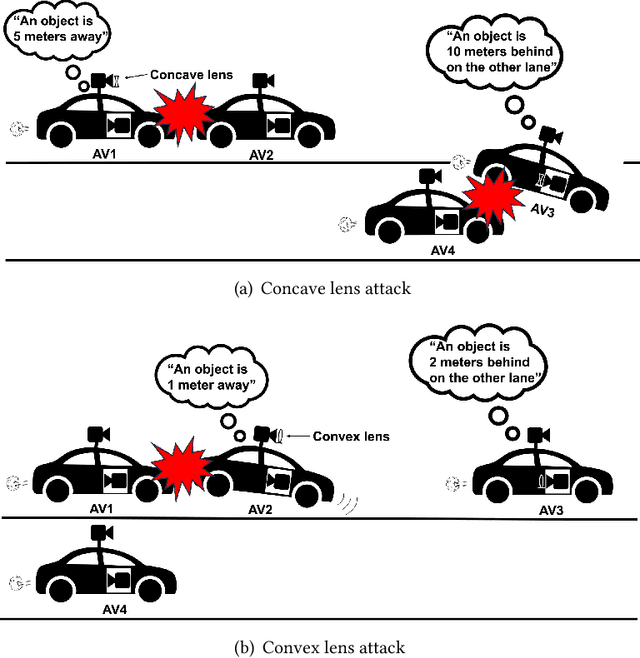

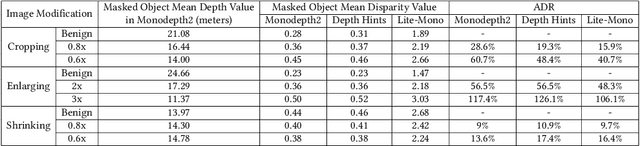

Abstract:Monocular Depth Estimation (MDE) is a pivotal component of vision-based Autonomous Driving (AD) systems, enabling vehicles to estimate the depth of surrounding objects using a single camera image. This estimation guides essential driving decisions, such as braking before an obstacle or changing lanes to avoid collisions. In this paper, we explore vulnerabilities of MDE algorithms in AD systems, presenting LensAttack, a novel physical attack that strategically places optical lenses on the camera of an autonomous vehicle to manipulate the perceived object depths. LensAttack encompasses two attack formats: concave lens attack and convex lens attack, each utilizing different optical lenses to induce false depth perception. We first develop a mathematical model that outlines the parameters of the attack, followed by simulations and real-world evaluations to assess its efficacy on state-of-the-art MDE models. Additionally, we adopt an attack optimization method to further enhance the attack success rate by optimizing the attack focal length. To better evaluate the implications of LensAttack on AD, we conduct comprehensive end-to-end system simulations using the CARLA platform. The results reveal that LensAttack can significantly disrupt the depth estimation processes in AD systems, posing a serious threat to their reliability and safety. Finally, we discuss some potential defense methods to mitigate the effects of the proposed attack.

Optical Lens Attack on Deep Learning Based Monocular Depth Estimation

Sep 25, 2024

Abstract:Monocular Depth Estimation (MDE) plays a crucial role in vision-based Autonomous Driving (AD) systems. It utilizes a single-camera image to determine the depth of objects, facilitating driving decisions such as braking a few meters in front of a detected obstacle or changing lanes to avoid collision. In this paper, we investigate the security risks associated with monocular vision-based depth estimation algorithms utilized by AD systems. By exploiting the vulnerabilities of MDE and the principles of optical lenses, we introduce LensAttack, a physical attack that involves strategically placing optical lenses on the camera of an autonomous vehicle to manipulate the perceived object depths. LensAttack encompasses two attack formats: concave lens attack and convex lens attack, each utilizing different optical lenses to induce false depth perception. We begin by constructing a mathematical model of our attack, incorporating various attack parameters. Subsequently, we simulate the attack and evaluate its real-world performance in driving scenarios to demonstrate its effect on state-of-the-art MDE models. The results highlight the significant impact of LensAttack on the accuracy of depth estimation in AD systems.

Using Quantum Solved Deep Boltzmann Machines to Increase the Data Efficiency of RL Agents

Aug 30, 2024

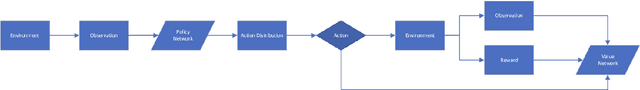

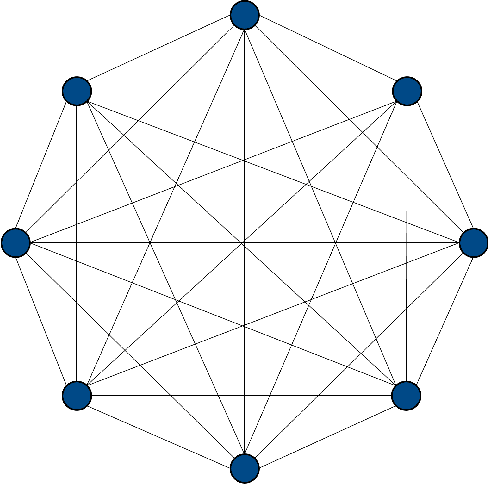

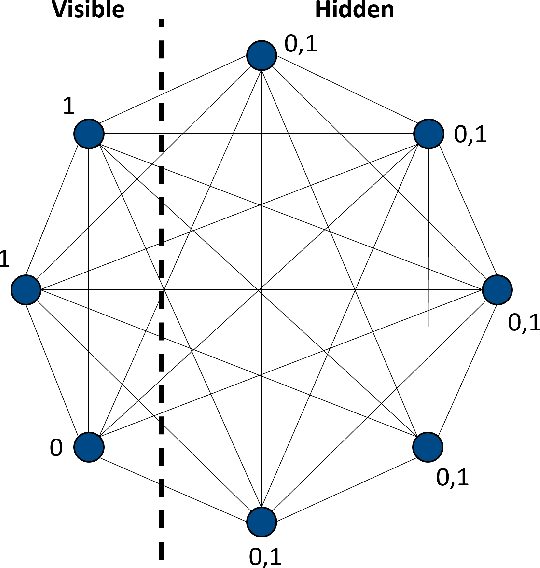

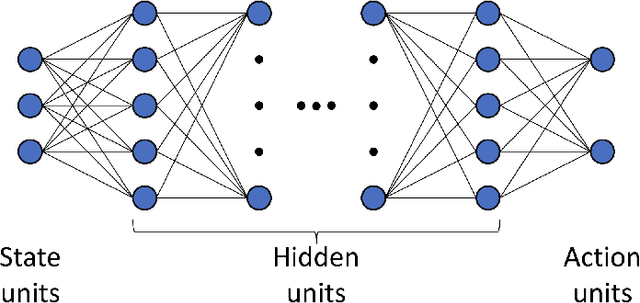

Abstract:Deep Learning algorithms, such as those used in Reinforcement Learning, often require large quantities of data to train effectively. In most cases, the availability of data is not a significant issue. However, for some contexts, such as in autonomous cyber defence, we require data efficient methods. Recently, Quantum Machine Learning and Boltzmann Machines have been proposed as solutions to this challenge. In this work we build upon the pre-existing work to extend the use of Deep Boltzmann Machines to the cutting edge algorithm Proximal Policy Optimisation in a Reinforcement Learning cyber defence environment. We show that this approach, when solved using a D-WAVE quantum annealer, can lead to a two-fold increase in data efficiency. We therefore expect it to be used by the machine learning and quantum communities who are hoping to capitalise on data-efficient Reinforcement Learning methods.

Performance of Three Slim Variants of The Long Short-Term Memory Layer

Jan 02, 2019

Abstract:The Long Short-Term Memory (LSTM) layer is an important advancement in the field of neural networks and machine learning, allowing for effective training and impressive inference performance. LSTM-based neural networks have been successfully employed in various applications such as speech processing and language translation. The LSTM layer can be simplified by removing certain components, potentially speeding up training and runtime with limited change in performance. In particular, the recently introduced variants, called SLIM LSTMs, have shown success in initial experiments to support this view. Here, we perform computational analysis of the validation accuracy of a convolutional plus recurrent neural network architecture using comparatively the standard LSTM and three SLIM LSTM layers. We have found that some realizations of the SLIM LSTM layers can potentially perform as well as the standard LSTM layer for our considered architecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge