Daniel Gibert

Assessing the Impact of Packing on Machine Learning-Based Malware Detection and Classification Systems

Oct 31, 2024

Abstract:The proliferation of malware, particularly through the use of packing, presents a significant challenge to static analysis and signature-based malware detection techniques. The application of packing to the original executable code renders extracting meaningful features and signatures challenging. To deal with the increasing amount of malware in the wild, researchers and anti-malware companies started harnessing machine learning capabilities with very promising results. However, little is known about the effects of packing on static machine learning-based malware detection and classification systems. This work addresses this gap by investigating the impact of packing on the performance of static machine learning-based models used for malware detection and classification, with a particular focus on those using visualisation techniques. To this end, we present a comprehensive analysis of various packing techniques and their effects on the performance of machine learning-based detectors and classifiers. Our findings highlight the limitations of current static detection and classification systems and underscore the need to be proactive to effectively counteract the evolving tactics of malware authors.

Certified Adversarial Robustness of Machine Learning-based Malware Detectors via (De)Randomized Smoothing

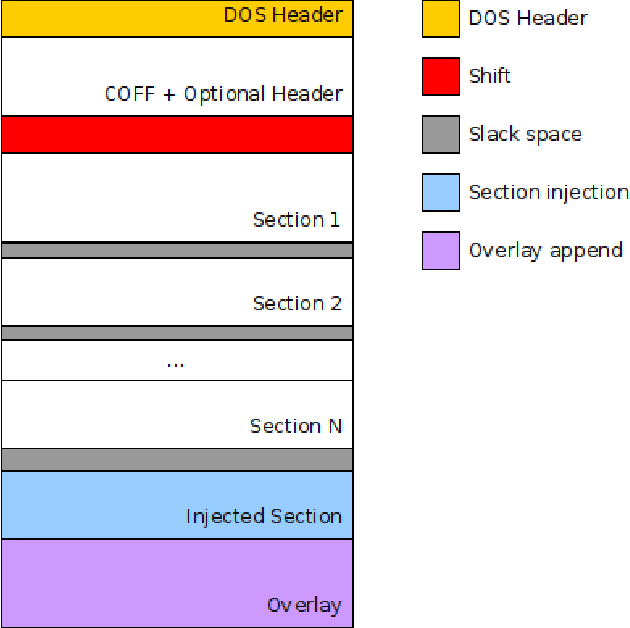

May 01, 2024Abstract:Deep learning-based malware detection systems are vulnerable to adversarial EXEmples - carefully-crafted malicious programs that evade detection with minimal perturbation. As such, the community is dedicating effort to develop mechanisms to defend against adversarial EXEmples. However, current randomized smoothing-based defenses are still vulnerable to attacks that inject blocks of adversarial content. In this paper, we introduce a certifiable defense against patch attacks that guarantees, for a given executable and an adversarial patch size, no adversarial EXEmple exist. Our method is inspired by (de)randomized smoothing which provides deterministic robustness certificates. During training, a base classifier is trained using subsets of continguous bytes. At inference time, our defense splits the executable into non-overlapping chunks, classifies each chunk independently, and computes the final prediction through majority voting to minimize the influence of injected content. Furthermore, we introduce a preprocessing step that fixes the size of the sections and headers to a multiple of the chunk size. As a consequence, the injected content is confined to an integer number of chunks without tampering the other chunks containing the real bytes of the input examples, allowing us to extend our certified robustness guarantees to content insertion attacks. We perform an extensive ablation study, by comparing our defense with randomized smoothing-based defenses against a plethora of content manipulation attacks and neural network architectures. Results show that our method exhibits unmatched robustness against strong content-insertion attacks, outperforming randomized smoothing-based defenses in the literature.

Machine Learning for Windows Malware Detection and Classification: Methods, Challenges and Ongoing Research

Apr 29, 2024Abstract:In this chapter, readers will explore how machine learning has been applied to build malware detection systems designed for the Windows operating system. This chapter starts by introducing the main components of a Machine Learning pipeline, highlighting the challenges of collecting and maintaining up-to-date datasets. Following this introduction, various state-of-the-art malware detectors are presented, encompassing both feature-based and deep learning-based detectors. Subsequent sections introduce the primary challenges encountered by machine learning-based malware detectors, including concept drift and adversarial attacks. Lastly, this chapter concludes by providing a brief overview of the ongoing research on adversarial defenses.

A Robust Defense against Adversarial Attacks on Deep Learning-based Malware Detectors via (De)Randomized Smoothing

Feb 26, 2024

Abstract:Deep learning-based malware detectors have been shown to be susceptible to adversarial malware examples, i.e. malware examples that have been deliberately manipulated in order to avoid detection. In light of the vulnerability of deep learning detectors to subtle input file modifications, we propose a practical defense against adversarial malware examples inspired by (de)randomized smoothing. In this work, we reduce the chances of sampling adversarial content injected by malware authors by selecting correlated subsets of bytes, rather than using Gaussian noise to randomize inputs like in the Computer Vision (CV) domain. During training, our ablation-based smoothing scheme trains a base classifier to make classifications on a subset of contiguous bytes or chunk of bytes. At test time, a large number of chunks are then classified by a base classifier and the consensus among these classifications is then reported as the final prediction. We propose two strategies to determine the location of the chunks used for classification: (1) randomly selecting the locations of the chunks and (2) selecting contiguous adjacent chunks. To showcase the effectiveness of our approach, we have trained two classifiers with our chunk-based ablation schemes on the BODMAS dataset. Our findings reveal that the chunk-based smoothing classifiers exhibit greater resilience against adversarial malware examples generated with state-of-the-are evasion attacks, outperforming a non-smoothed classifier and a randomized smoothing-based classifier by a great margin.

Towards a Practical Defense against Adversarial Attacks on Deep Learning-based Malware Detectors via Randomized Smoothing

Aug 17, 2023Abstract:Malware detectors based on deep learning (DL) have been shown to be susceptible to malware examples that have been deliberately manipulated in order to evade detection, a.k.a. adversarial malware examples. More specifically, it has been show that deep learning detectors are vulnerable to small changes on the input file. Given this vulnerability of deep learning detectors, we propose a practical defense against adversarial malware examples inspired by randomized smoothing. In our work, instead of employing Gaussian or Laplace noise when randomizing inputs, we propose a randomized ablation-based smoothing scheme that ablates a percentage of the bytes within an executable. During training, our randomized ablation-based smoothing scheme trains a base classifier based on ablated versions of the executable files. At test time, the final classification for a given input executable is taken as the class most commonly predicted by the classifier on a set of ablated versions of the original executable. To demonstrate the suitability of our approach we have empirically evaluated the proposed ablation-based model against various state-of-the-art evasion attacks on the BODMAS dataset. Results show greater robustness and generalization capabilities to adversarial malware examples in comparison to a non-smoothed classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge