Daniel G. McClement

Meta-Reinforcement Learning for Adaptive Control of Second Order Systems

Sep 19, 2022

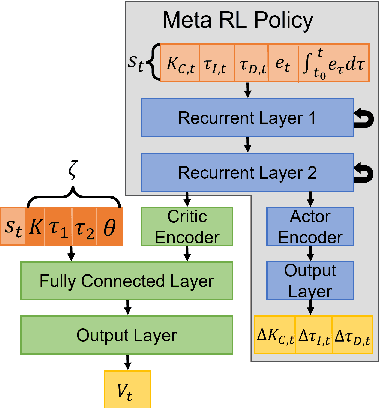

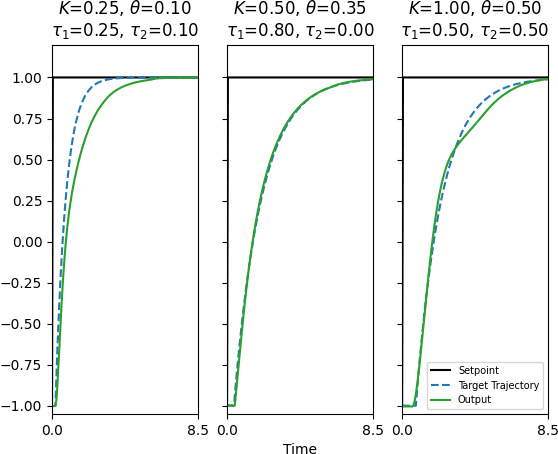

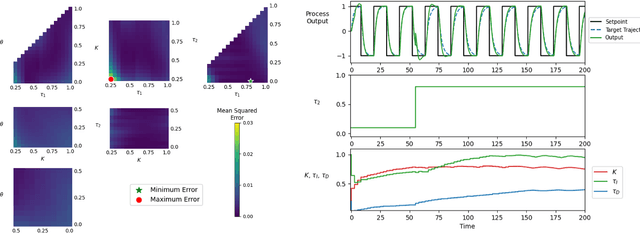

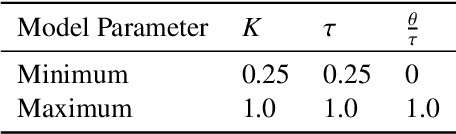

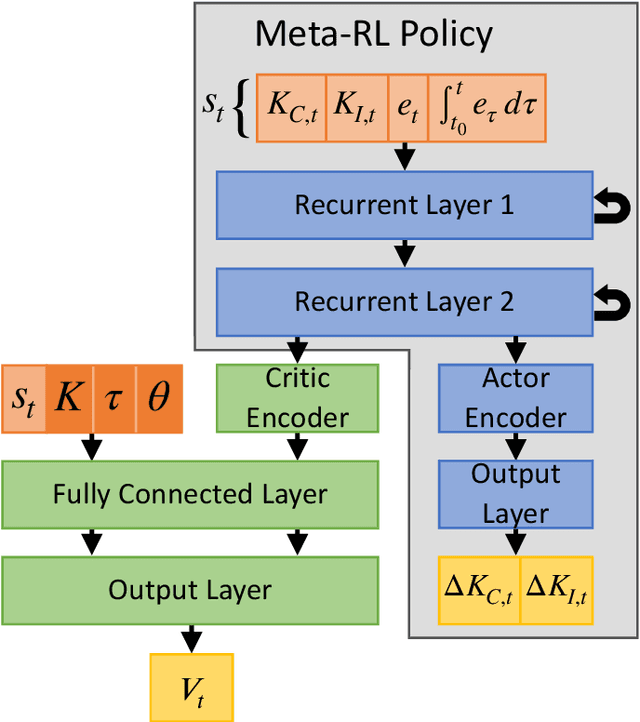

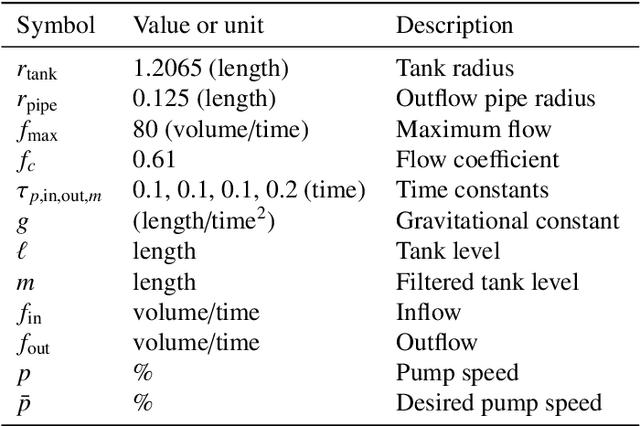

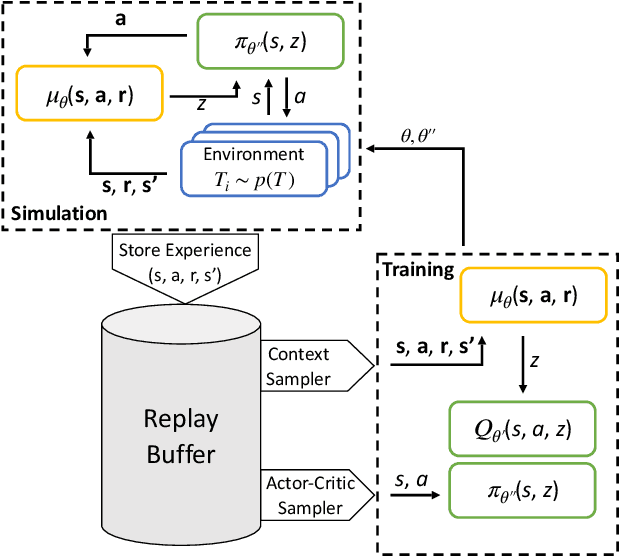

Abstract:Meta-learning is a branch of machine learning which aims to synthesize data from a distribution of related tasks to efficiently solve new ones. In process control, many systems have similar and well-understood dynamics, which suggests it is feasible to create a generalizable controller through meta-learning. In this work, we formulate a meta reinforcement learning (meta-RL) control strategy that takes advantage of known, offline information for training, such as a model structure. The meta-RL agent is trained over a distribution of model parameters, rather than a single model, enabling the agent to automatically adapt to changes in the process dynamics while maintaining performance. A key design element is the ability to leverage model-based information offline during training, while maintaining a model-free policy structure for interacting with new environments. Our previous work has demonstrated how this approach can be applied to the industrially-relevant problem of tuning proportional-integral controllers to control first order processes. In this work, we briefly reintroduce our methodology and demonstrate how it can be extended to proportional-integral-derivative controllers and second order systems.

Meta Reinforcement Learning for Adaptive Control: An Offline Approach

Mar 17, 2022

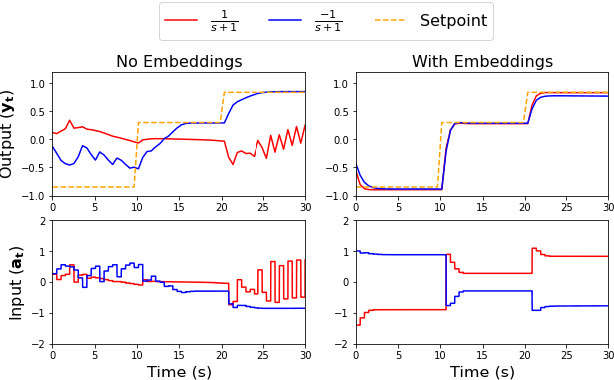

Abstract:Meta-learning is a branch of machine learning which trains neural network models to synthesize a wide variety of data in order to rapidly solve new problems. In process control, many systems have similar and well-understood dynamics, which suggests it is feasible to create a generalizable controller through meta-learning. In this work, we formulate a meta reinforcement learning (meta-RL) control strategy that takes advantage of known, offline information for training, such as the system gain or time constant, yet efficiently controls novel systems in a completely model-free fashion. Our meta-RL agent has a recurrent structure that accumulates "context" for its current dynamics through a hidden state variable. This end-to-end architecture enables the agent to automatically adapt to changes in the process dynamics. Moreover, the same agent can be deployed on systems with previously unseen nonlinearities and timescales. In tests reported here, the meta-RL agent was trained entirely offline, yet produced excellent results in novel settings. A key design element is the ability to leverage model-based information offline during training, while maintaining a model-free policy structure for interacting with novel environments. To illustrate the approach, we take the actions proposed by the meta-RL agent to be changes to gains of a proportional-integral controller, resulting in a generalized, adaptive, closed-loop tuning strategy. Meta-learning is a promising approach for constructing sample-efficient intelligent controllers.

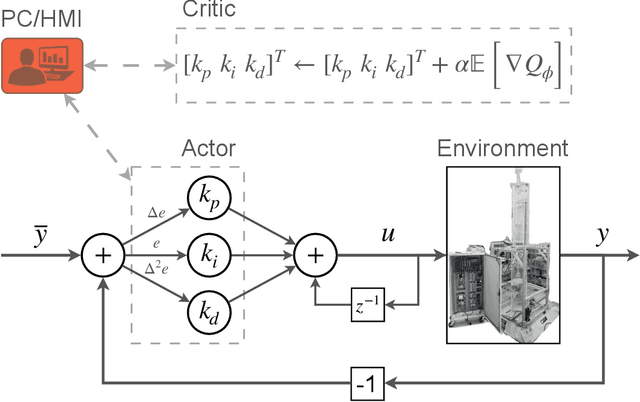

Deep Reinforcement Learning with Shallow Controllers: An Experimental Application to PID Tuning

Nov 13, 2021

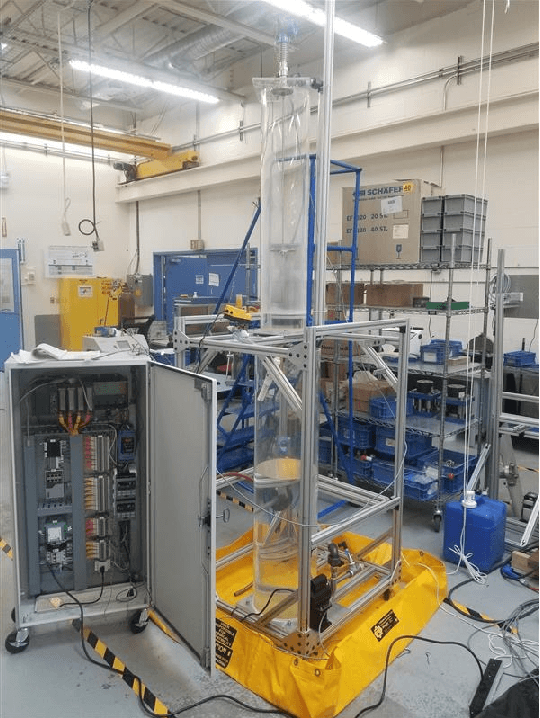

Abstract:Deep reinforcement learning (RL) is an optimization-driven framework for producing control strategies for general dynamical systems without explicit reliance on process models. Good results have been reported in simulation. Here we demonstrate the challenges in implementing a state of the art deep RL algorithm on a real physical system. Aspects include the interplay between software and existing hardware; experiment design and sample efficiency; training subject to input constraints; and interpretability of the algorithm and control law. At the core of our approach is the use of a PID controller as the trainable RL policy. In addition to its simplicity, this approach has several appealing features: No additional hardware needs to be added to the control system, since a PID controller can easily be implemented through a standard programmable logic controller; the control law can easily be initialized in a "safe'' region of the parameter space; and the final product -- a well-tuned PID controller -- has a form that practitioners can reason about and deploy with confidence.

A Meta-Reinforcement Learning Approach to Process Control

Mar 25, 2021

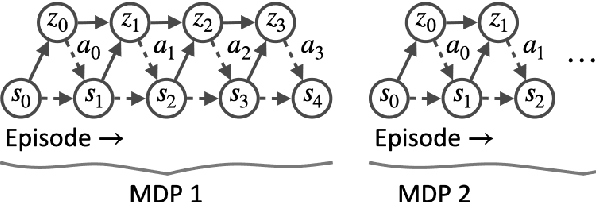

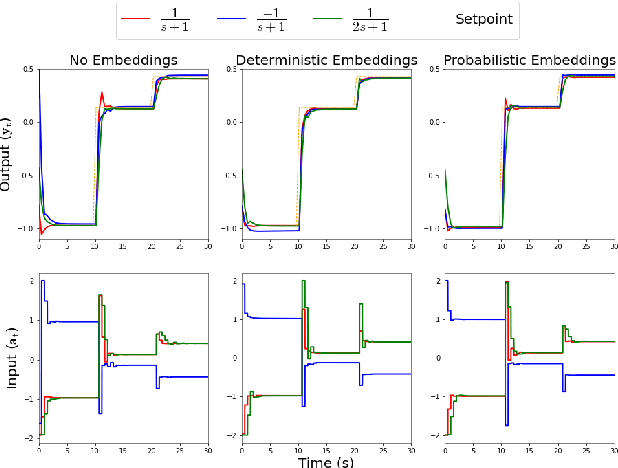

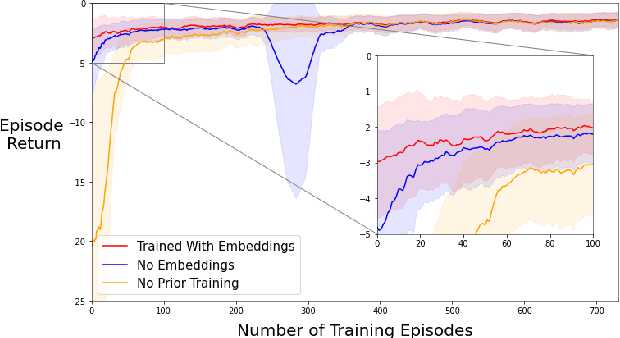

Abstract:Meta-learning is a branch of machine learning which aims to quickly adapt models, such as neural networks, to perform new tasks by learning an underlying structure across related tasks. In essence, models are being trained to learn new tasks effectively rather than master a single task. Meta-learning is appealing for process control applications because the perturbations to a process required to train an AI controller can be costly and unsafe. Additionally, the dynamics and control objectives are similar across many different processes, so it is feasible to create a generalizable controller through meta-learning capable of quickly adapting to different systems. In this work, we construct a deep reinforcement learning (DRL) based controller and meta-train the controller using a latent context variable through a separate embedding neural network. We test our meta-algorithm on its ability to adapt to new process dynamics as well as different control objectives on the same process. In both cases, our meta-learning algorithm adapts very quickly to new tasks, outperforming a regular DRL controller trained from scratch. Meta-learning appears to be a promising approach for constructing more intelligent and sample-efficient controllers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge