Daniel C. Brown

Neural Volumetric Reconstruction for Coherent Synthetic Aperture Sonar

Jun 16, 2023Abstract:Synthetic aperture sonar (SAS) measures a scene from multiple views in order to increase the resolution of reconstructed imagery. Image reconstruction methods for SAS coherently combine measurements to focus acoustic energy onto the scene. However, image formation is typically under-constrained due to a limited number of measurements and bandlimited hardware, which limits the capabilities of existing reconstruction methods. To help meet these challenges, we design an analysis-by-synthesis optimization that leverages recent advances in neural rendering to perform coherent SAS imaging. Our optimization enables us to incorporate physics-based constraints and scene priors into the image formation process. We validate our method on simulation and experimental results captured in both air and water. We demonstrate both quantitatively and qualitatively that our method typically produces superior reconstructions than existing approaches. We share code and data for reproducibility.

Approximate Extraction of Late-Time Returns via Morphological Component Analysis

Aug 11, 2022

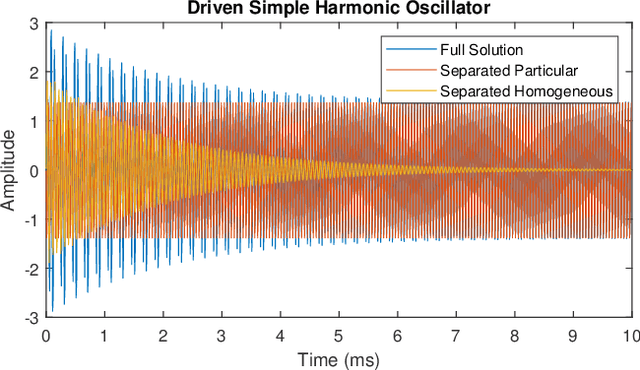

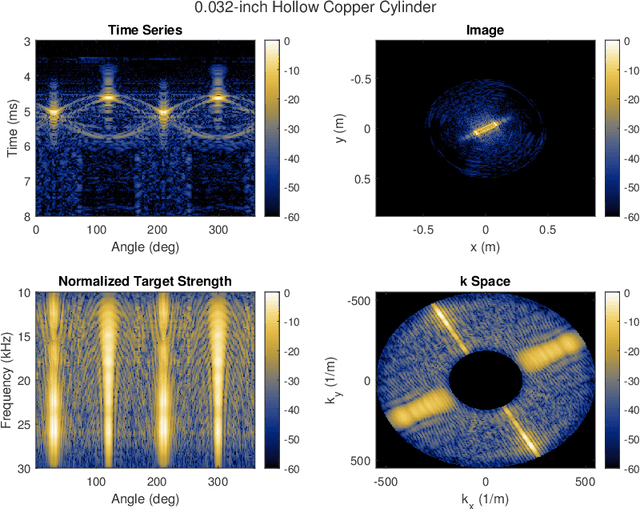

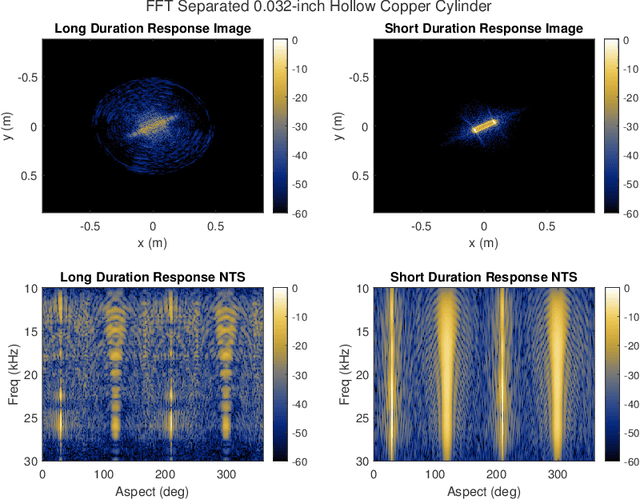

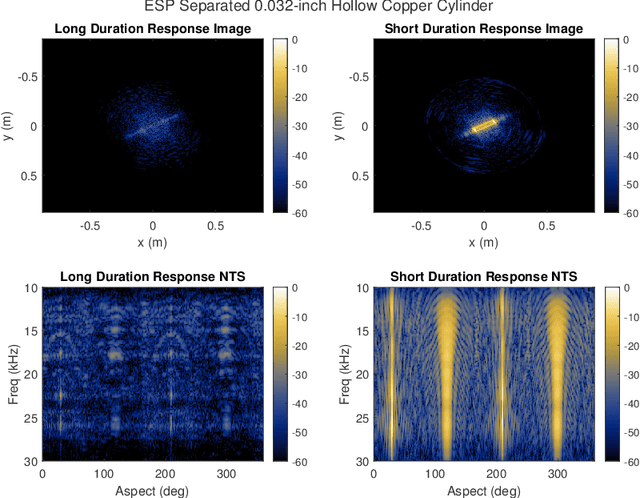

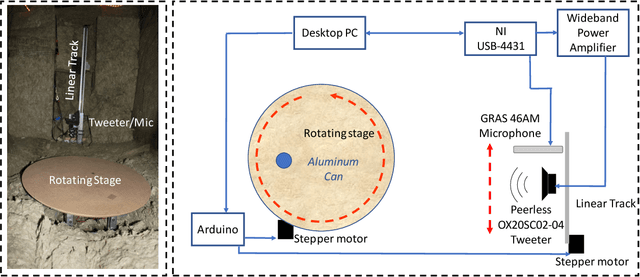

Abstract:A fundamental challenge in acoustic data processing is to separate a measured time series into relevant phenomenological components. A given measurement is typically assumed to be an additive mixture of myriad signals plus noise whose separation forms an ill-posed inverse problem. In the setting of sensing elastic objects using active sonar, we wish to separate the early-time returns (e.g., returns from the object's exterior geometry) from late-time returns caused by elastic or compressional wave coupling. Under the framework of Morphological Component Analysis (MCA), we compare two separation models using the short-duration and long-duration responses as a proxy for early-time and late-time returns. Results are computed for Stanton's elastic cylinder model as well as on experimental data taken from an in-Air circular Synthetic Aperture Sonar (AirSAS) system, whose separated time series are formed into imagery. We find that MCA can be used to separate early and late-time responses in both cases without the use of time-gating. The separation process is demonstrated to be robust to noise and compatible with AirSAS image reconstruction. The best separation results are obtained with a flexible, but computationally intensive, frame based signal model, while a faster Fourier Transform based method is shown to have competitive performance.

SINR: Deconvolving Circular SAS Images Using Implicit Neural Representations

Apr 21, 2022

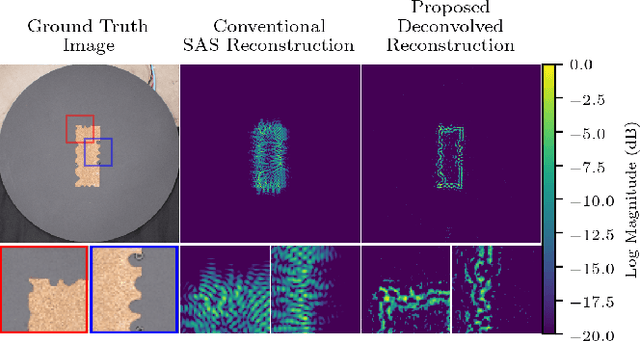

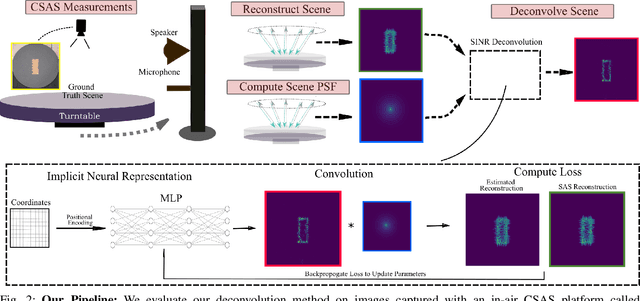

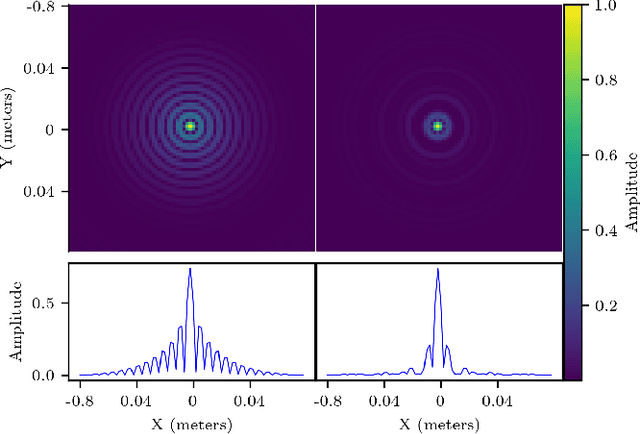

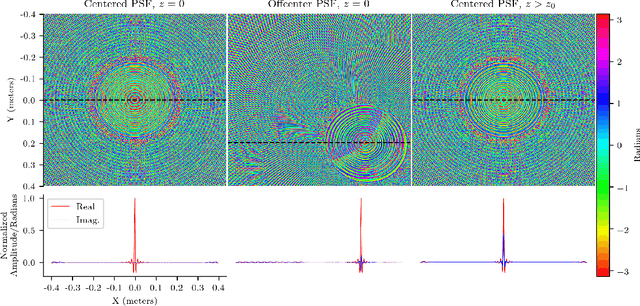

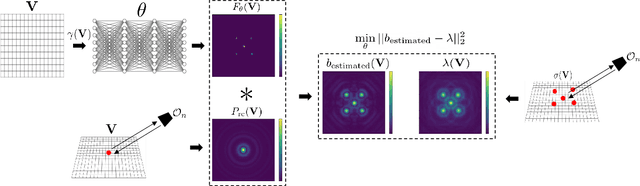

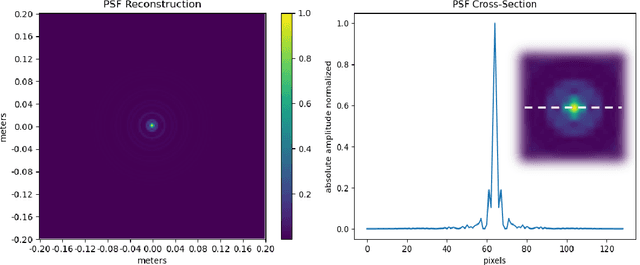

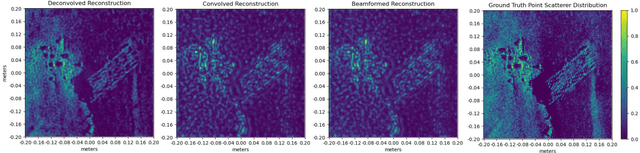

Abstract:Circular Synthetic aperture sonars (CSAS) capture multiple observations of a scene to reconstruct high-resolution images. We can characterize resolution by modeling CSAS imaging as the convolution between a scene's underlying point scattering distribution and a system-dependent point spread function (PSF). The PSF is a function of the transmitted waveform's bandwidth and determines a fixed degree of blurring on reconstructed imagery. In theory, deconvolution overcomes bandwidth limitations by reversing the PSF-induced blur and recovering the scene's scattering distribution. However, deconvolution is an ill-posed inverse problem and sensitive to noise. We propose a self-supervised pipeline (does not require training data) that leverages an implicit neural representation (INR) for deconvolving CSAS images. We highlight the performance of our SAS INR pipeline, which we call SINR, by implementing and comparing to existing deconvolution methods. Additionally, prior SAS deconvolution methods assume a spatially-invariant PSF, which we demonstrate yields subpar performance in practice. We provide theory and methods to account for a spatially-varying CSAS PSF, and demonstrate that doing so enables SINR to achieve superior deconvolution performance on simulated and real acoustic SAS data. We provide code to encourage reproducibility of research.

Enveloped Sinusoid Parseval Frames

Apr 18, 2022

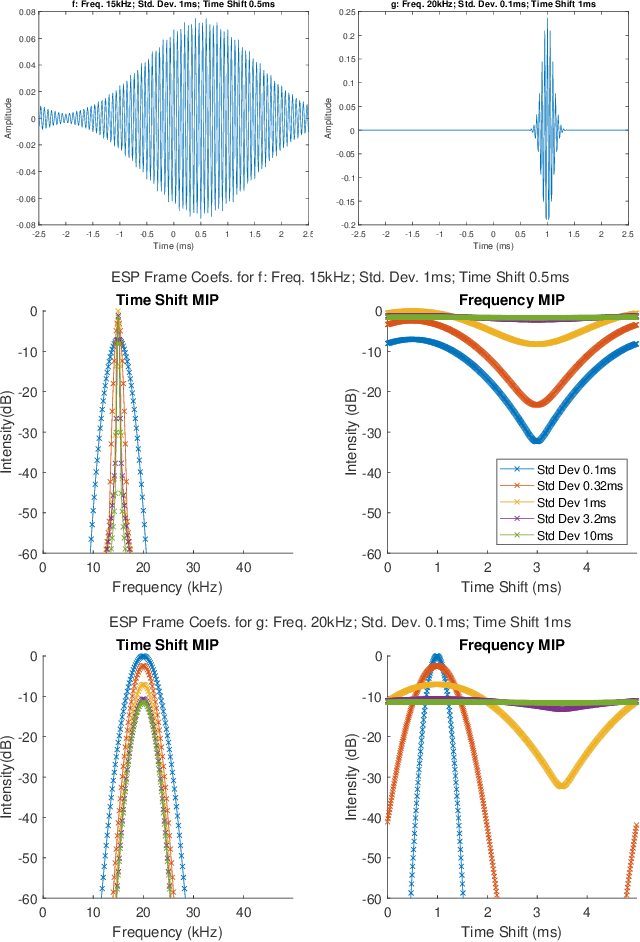

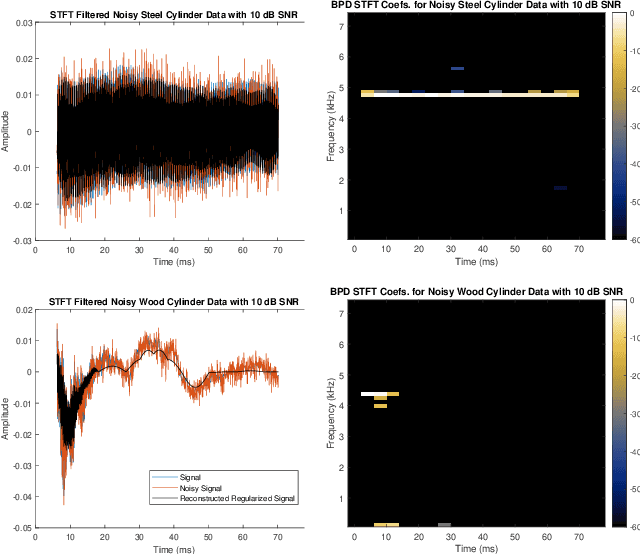

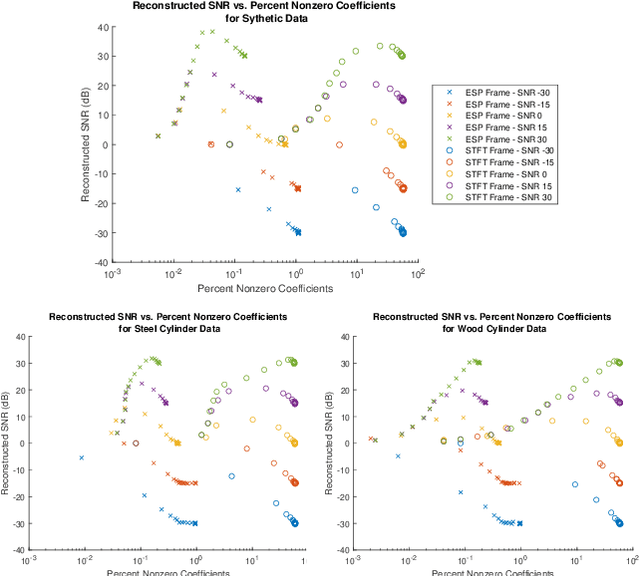

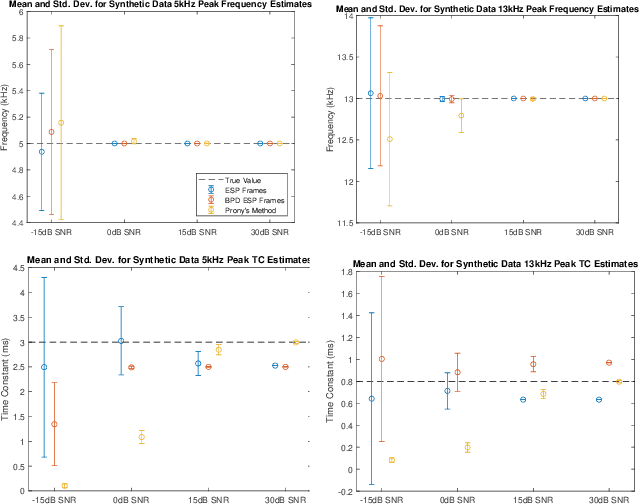

Abstract:This paper presents a method of constructing Parseval frames from any collection of complex envelopes. The resulting Enveloped Sinusoid Parseval (ESP) frames can represent a wide variety of signal types as specified by their physical morphology. Since the ESP frame retains its Parseval property even when generated from a variety of envelopes, it is compatible with large scale and iterative optimization algorithms. ESP frames are constructed by applying time-shifted enveloping functions to the discrete Fourier Transform basis, and in this way are similar to the short-time Fourier Transform. This work provides examples of ESP frame generation for both synthetic and experimentally measured signals. Furthermore, the frame's compatibility with distributed sparse optimization frameworks is demonstrated, and efficient implementation details are provided. Numerical experiments on acoustics data reveal that the flexibility of this method allows it to be simultaneously competitive with the STFT in time-frequency processing and also with Prony's Method for time-constant parameter estimation, surpassing the shortcomings of each individual technique.

Implicit Neural Representations for Deconvolving SAS Images

Dec 16, 2021

Abstract:Synthetic aperture sonar (SAS) image resolution is constrained by waveform bandwidth and array geometry. Specifically, the waveform bandwidth determines a point spread function (PSF) that blurs the locations of point scatterers in the scene. In theory, deconvolving the reconstructed SAS image with the scene PSF restores the original distribution of scatterers and yields sharper reconstructions. However, deconvolution is an ill-posed operation that is highly sensitive to noise. In this work, we leverage implicit neural representations (INRs), shown to be strong priors for the natural image space, to deconvolve SAS images. Importantly, our method does not require training data, as we perform our deconvolution through an analysis-bysynthesis optimization in a self-supervised fashion. We validate our method on simulated SAS data created with a point scattering model and real data captured with an in-air circular SAS. This work is an important first step towards applying neural networks for SAS image deconvolution.

GPU Acceleration for Synthetic Aperture Sonar Image Reconstruction

Jan 14, 2021

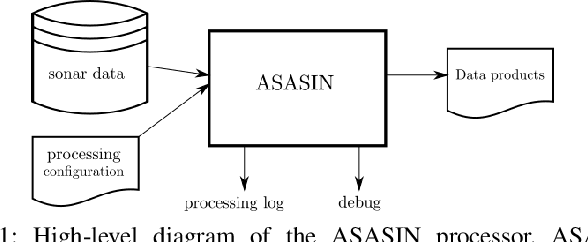

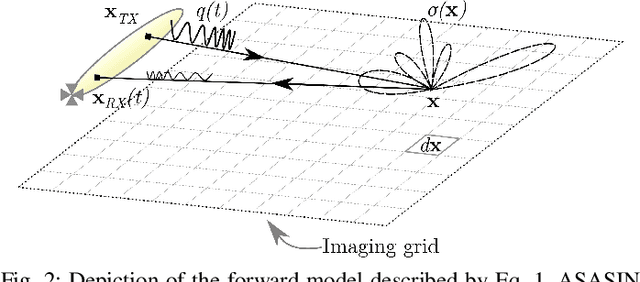

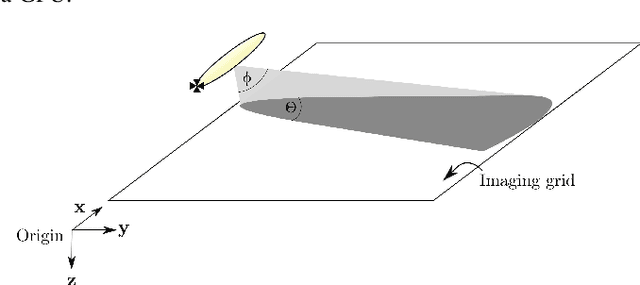

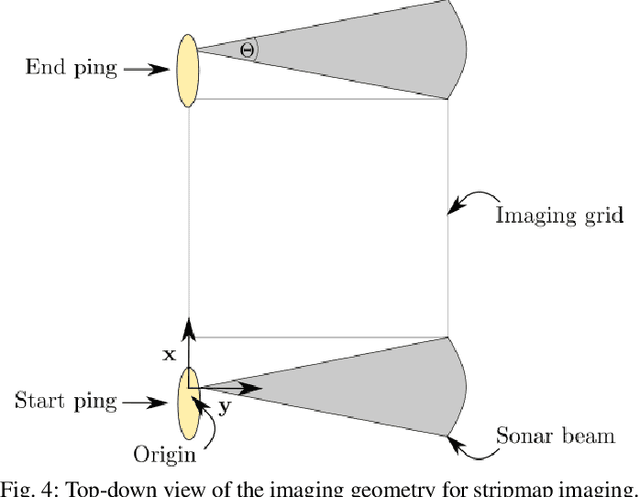

Abstract:Synthetic aperture sonar (SAS) image reconstruction, or beamforming as it is often referred to within the SAS community, comprises a class of computationally intensive algorithms for creating coherent high-resolution imagery from successive spatially varying sonar pings. Image reconstruction is usually performed topside because of the large compute burden necessitated by the procedure. Historically, image reconstruction required significant assumptions in order to produce real-time imagery within an unmanned underwater vehicle's (UUV's) size, weight, and power (SWaP) constraints. However, these assumptions result in reduced image quality. In this work, we describe ASASIN, the Advanced Synthetic Aperture Sonar Imagining eNgine. ASASIN is a time domain backprojection image reconstruction suite utilizing graphics processing units (GPUs) allowing real-time operation on UUVs without sacrificing image quality. We describe several speedups employed in ASASIN allowing us to achieve this objective. Furthermore, ASASIN's signal processing chain is capable of producing 2D and 3D SAS imagery as we will demonstrate. Finally, we measure ASASIN's performance on a variety of GPUs and create a model capable of predicting performance. We demonstrate our model's usefulness in predicting run-time performance on desktop and embedded GPU hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge