Albert Reed

NeRF-enabled Analysis-Through-Synthesis for ISAR Imaging of Small Everyday Objects with Sparse and Noisy UWB Radar Data

Oct 14, 2024

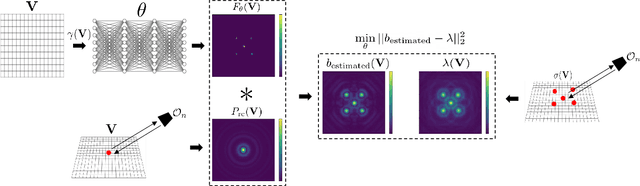

Abstract:Inverse Synthetic Aperture Radar (ISAR) imaging presents a formidable challenge when it comes to small everyday objects due to their limited Radar Cross-Section (RCS) and the inherent resolution constraints of radar systems. Existing ISAR reconstruction methods including backprojection (BP) often require complex setups and controlled environments, rendering them impractical for many real-world noisy scenarios. In this paper, we propose a novel Analysis-through-Synthesis (ATS) framework enabled by Neural Radiance Fields (NeRF) for high-resolution coherent ISAR imaging of small objects using sparse and noisy Ultra-Wideband (UWB) radar data with an inexpensive and portable setup. Our end-to-end framework integrates ultra-wideband radar wave propagation, reflection characteristics, and scene priors, enabling efficient 2D scene reconstruction without the need for costly anechoic chambers or complex measurement test beds. With qualitative and quantitative comparisons, we demonstrate that the proposed method outperforms traditional techniques and generates ISAR images of complex scenes with multiple targets and complex structures in Non-Line-of-Sight (NLOS) and noisy scenarios, particularly with limited number of views and sparse UWB radar scans. This work represents a significant step towards practical, cost-effective ISAR imaging of small everyday objects, with broad implications for robotics and mobile sensing applications.

SINR: Deconvolving Circular SAS Images Using Implicit Neural Representations

Apr 21, 2022

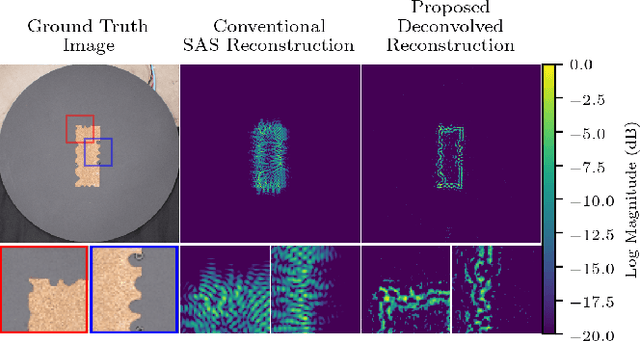

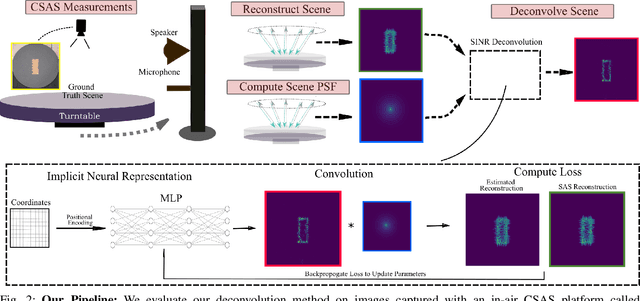

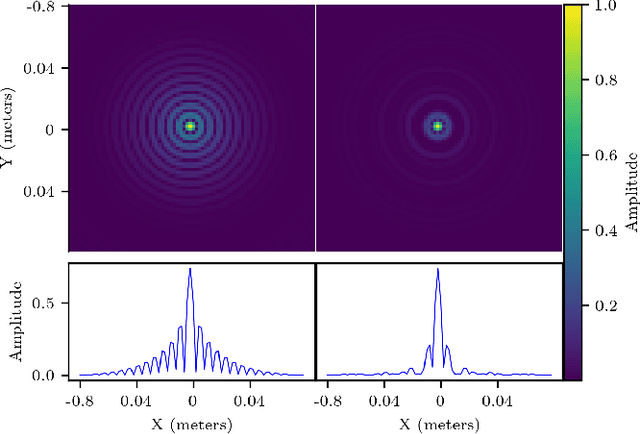

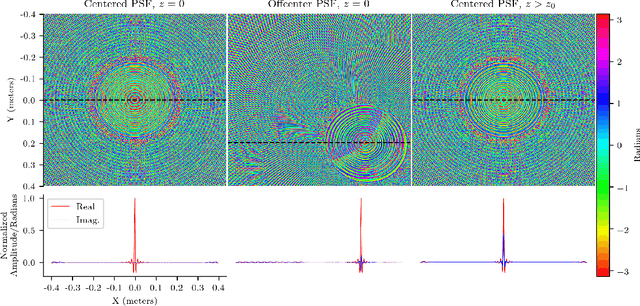

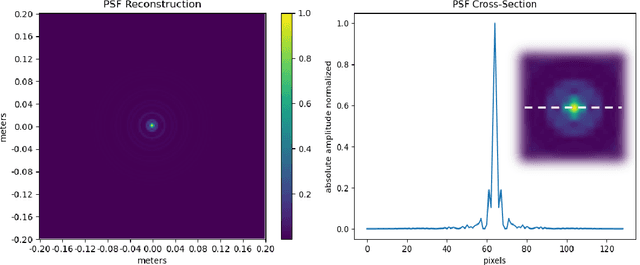

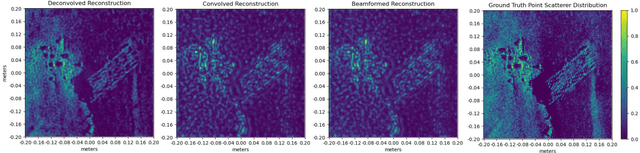

Abstract:Circular Synthetic aperture sonars (CSAS) capture multiple observations of a scene to reconstruct high-resolution images. We can characterize resolution by modeling CSAS imaging as the convolution between a scene's underlying point scattering distribution and a system-dependent point spread function (PSF). The PSF is a function of the transmitted waveform's bandwidth and determines a fixed degree of blurring on reconstructed imagery. In theory, deconvolution overcomes bandwidth limitations by reversing the PSF-induced blur and recovering the scene's scattering distribution. However, deconvolution is an ill-posed inverse problem and sensitive to noise. We propose a self-supervised pipeline (does not require training data) that leverages an implicit neural representation (INR) for deconvolving CSAS images. We highlight the performance of our SAS INR pipeline, which we call SINR, by implementing and comparing to existing deconvolution methods. Additionally, prior SAS deconvolution methods assume a spatially-invariant PSF, which we demonstrate yields subpar performance in practice. We provide theory and methods to account for a spatially-varying CSAS PSF, and demonstrate that doing so enables SINR to achieve superior deconvolution performance on simulated and real acoustic SAS data. We provide code to encourage reproducibility of research.

Implicit Neural Representations for Deconvolving SAS Images

Dec 16, 2021

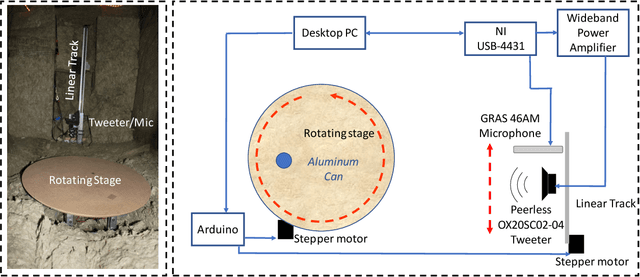

Abstract:Synthetic aperture sonar (SAS) image resolution is constrained by waveform bandwidth and array geometry. Specifically, the waveform bandwidth determines a point spread function (PSF) that blurs the locations of point scatterers in the scene. In theory, deconvolving the reconstructed SAS image with the scene PSF restores the original distribution of scatterers and yields sharper reconstructions. However, deconvolution is an ill-posed operation that is highly sensitive to noise. In this work, we leverage implicit neural representations (INRs), shown to be strong priors for the natural image space, to deconvolve SAS images. Importantly, our method does not require training data, as we perform our deconvolution through an analysis-bysynthesis optimization in a self-supervised fashion. We validate our method on simulated SAS data created with a point scattering model and real data captured with an in-air circular SAS. This work is an important first step towards applying neural networks for SAS image deconvolution.

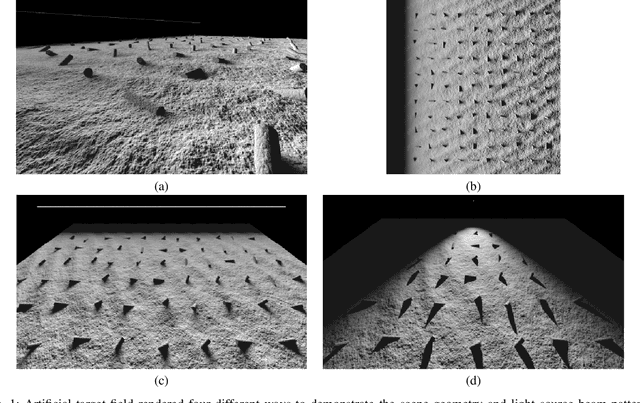

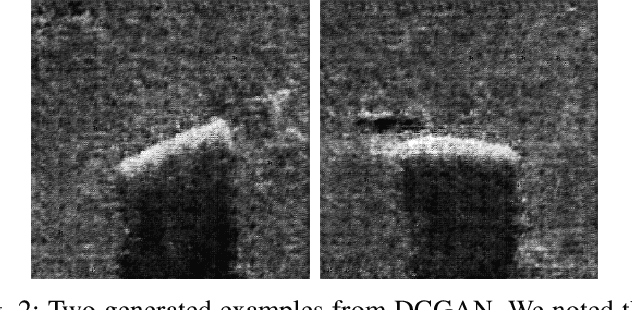

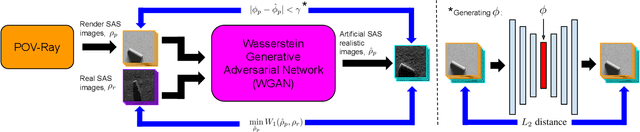

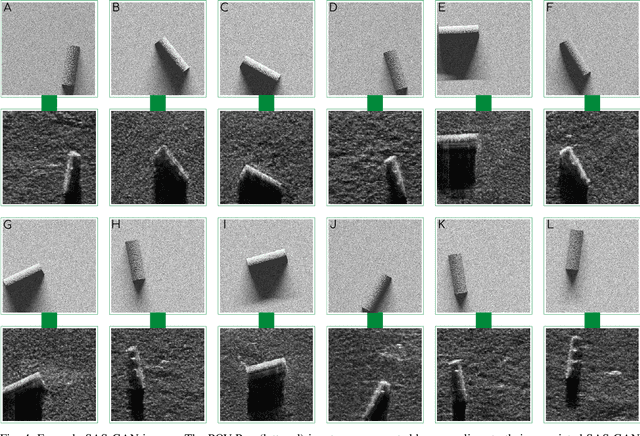

Coupling Rendering and Generative Adversarial Networks for Artificial SAS Image Generation

Oct 02, 2019

Abstract:Acquisition of Synthetic Aperture Sonar (SAS) datasets is bottlenecked by the costly deployment of SAS imaging systems, and even when data acquisition is possible,the data is often skewed towards containing barren seafloor rather than objects of interest. We present a novel pipeline, called SAS GAN, which couples an optical renderer with a generative adversarial network (GAN) to synthesize realistic SAS images of targets on the seafloor. This coupling enables high levels of SAS image realism while enabling control over image geometry and parameters. We demonstrate qualitative results by presenting examples of images created with our pipeline. We also present quantitative results through the use of t-SNE and the Fr\'echet Inception Distance to argue that our generated SAS imagery potentially augments SAS datasets more effectively than an off-the-shelf GAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge