Thomas Blanford

Neural Volumetric Reconstruction for Coherent Synthetic Aperture Sonar

Jun 16, 2023Abstract:Synthetic aperture sonar (SAS) measures a scene from multiple views in order to increase the resolution of reconstructed imagery. Image reconstruction methods for SAS coherently combine measurements to focus acoustic energy onto the scene. However, image formation is typically under-constrained due to a limited number of measurements and bandlimited hardware, which limits the capabilities of existing reconstruction methods. To help meet these challenges, we design an analysis-by-synthesis optimization that leverages recent advances in neural rendering to perform coherent SAS imaging. Our optimization enables us to incorporate physics-based constraints and scene priors into the image formation process. We validate our method on simulation and experimental results captured in both air and water. We demonstrate both quantitatively and qualitatively that our method typically produces superior reconstructions than existing approaches. We share code and data for reproducibility.

SINR: Deconvolving Circular SAS Images Using Implicit Neural Representations

Apr 21, 2022

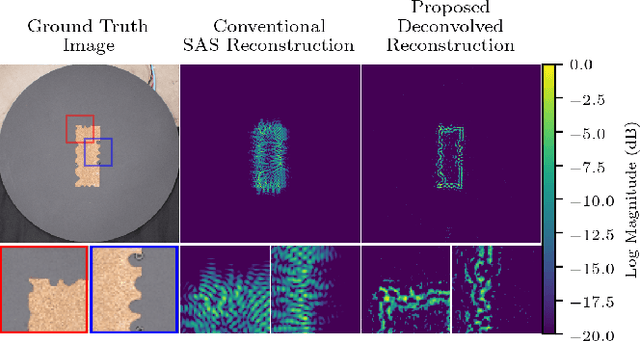

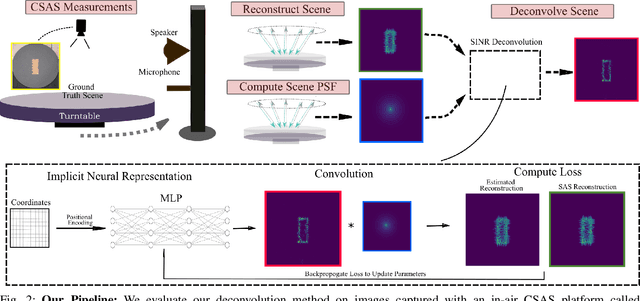

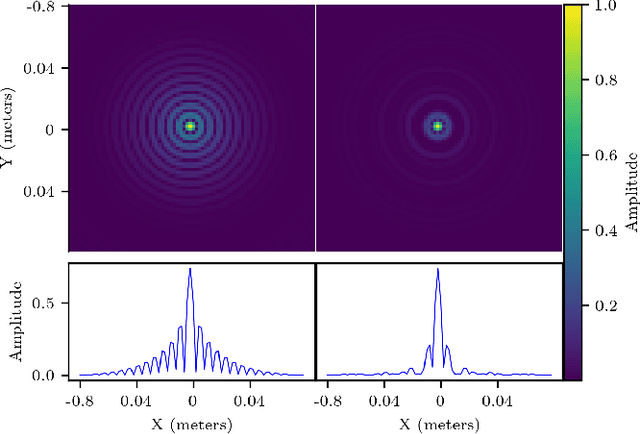

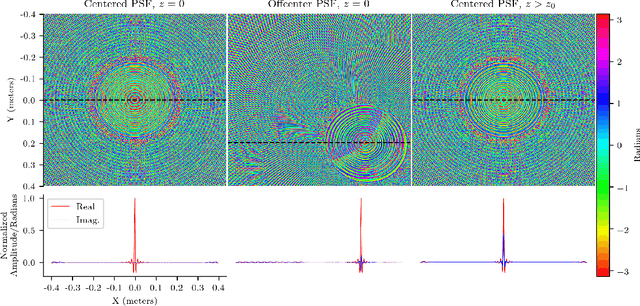

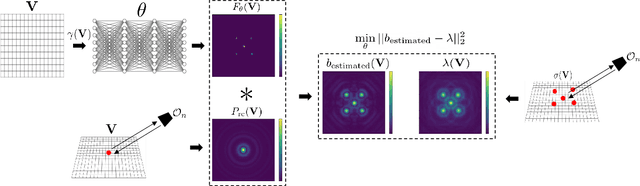

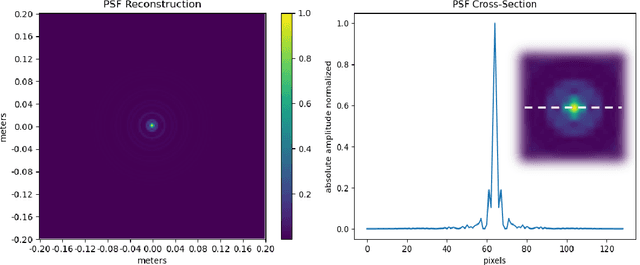

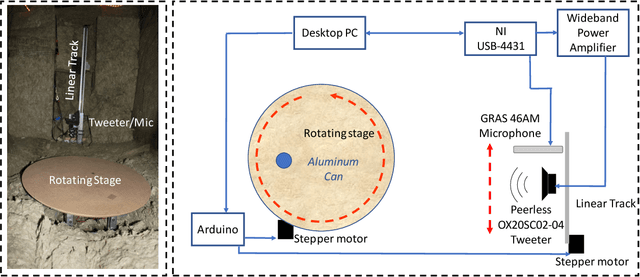

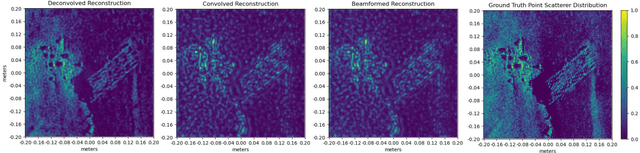

Abstract:Circular Synthetic aperture sonars (CSAS) capture multiple observations of a scene to reconstruct high-resolution images. We can characterize resolution by modeling CSAS imaging as the convolution between a scene's underlying point scattering distribution and a system-dependent point spread function (PSF). The PSF is a function of the transmitted waveform's bandwidth and determines a fixed degree of blurring on reconstructed imagery. In theory, deconvolution overcomes bandwidth limitations by reversing the PSF-induced blur and recovering the scene's scattering distribution. However, deconvolution is an ill-posed inverse problem and sensitive to noise. We propose a self-supervised pipeline (does not require training data) that leverages an implicit neural representation (INR) for deconvolving CSAS images. We highlight the performance of our SAS INR pipeline, which we call SINR, by implementing and comparing to existing deconvolution methods. Additionally, prior SAS deconvolution methods assume a spatially-invariant PSF, which we demonstrate yields subpar performance in practice. We provide theory and methods to account for a spatially-varying CSAS PSF, and demonstrate that doing so enables SINR to achieve superior deconvolution performance on simulated and real acoustic SAS data. We provide code to encourage reproducibility of research.

Implicit Neural Representations for Deconvolving SAS Images

Dec 16, 2021

Abstract:Synthetic aperture sonar (SAS) image resolution is constrained by waveform bandwidth and array geometry. Specifically, the waveform bandwidth determines a point spread function (PSF) that blurs the locations of point scatterers in the scene. In theory, deconvolving the reconstructed SAS image with the scene PSF restores the original distribution of scatterers and yields sharper reconstructions. However, deconvolution is an ill-posed operation that is highly sensitive to noise. In this work, we leverage implicit neural representations (INRs), shown to be strong priors for the natural image space, to deconvolve SAS images. Importantly, our method does not require training data, as we perform our deconvolution through an analysis-bysynthesis optimization in a self-supervised fashion. We validate our method on simulated SAS data created with a point scattering model and real data captured with an in-air circular SAS. This work is an important first step towards applying neural networks for SAS image deconvolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge