Isaac Gerg

Deep Autofocus for Synthetic Aperture Sonar

Oct 29, 2020

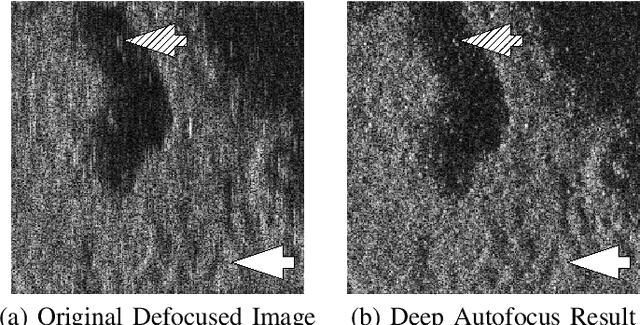

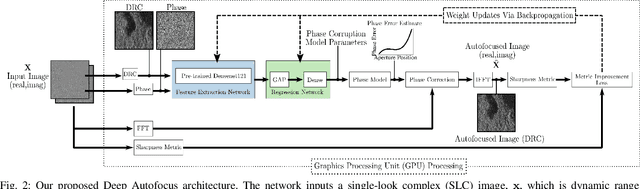

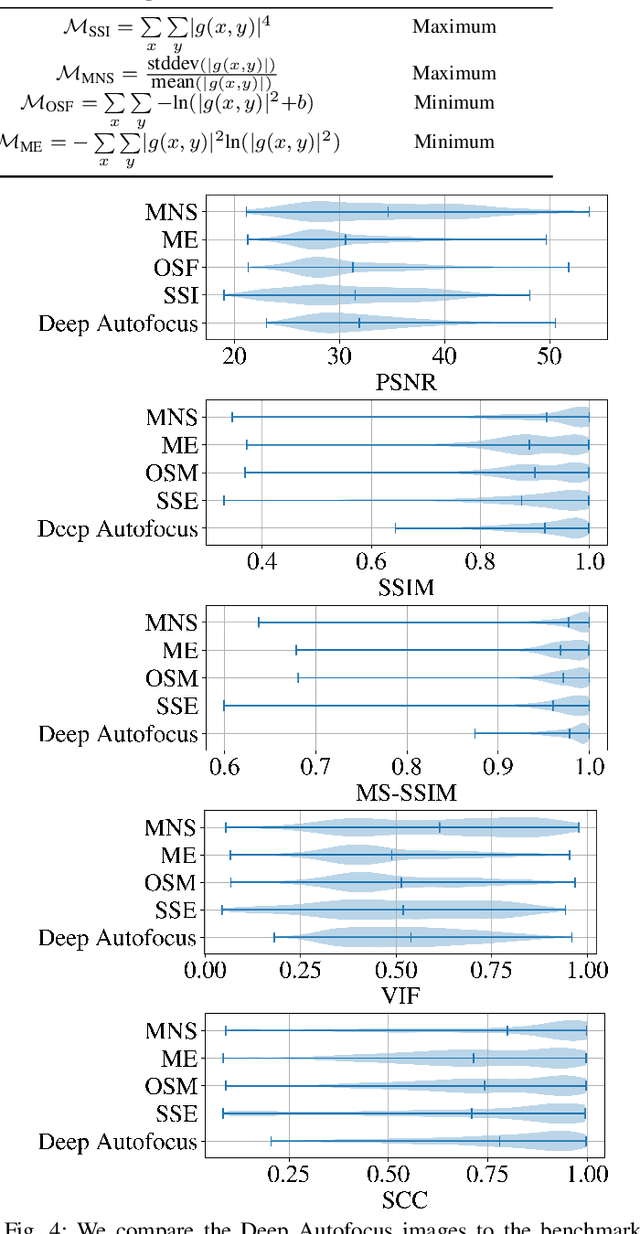

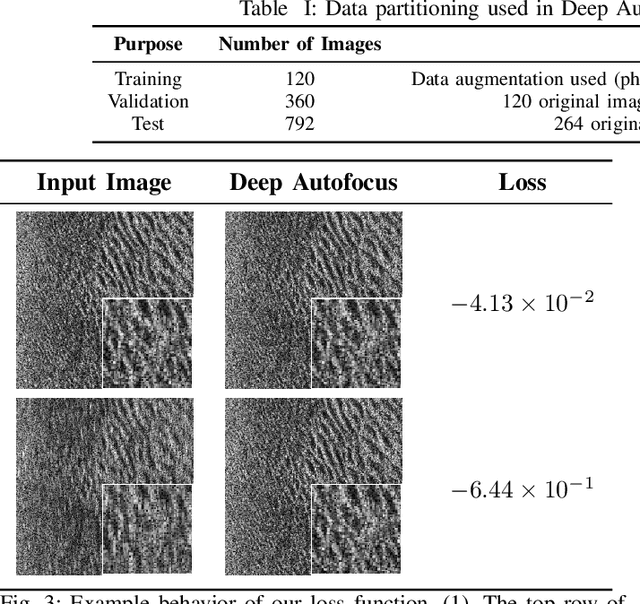

Abstract:Synthetic aperture sonar (SAS) requires precise positional and environmental information to produce well-focused output during the image reconstruction step. However, errors in these measurements are commonly present resulting in defocused imagery. To overcome these issues, an \emph{autofocus} algorithm is employed as a post-processing step after image reconstruction for the purpose of improving image quality using the image content itself. These algorithms are usually iterative and metric-based in that they seek to optimize an image sharpness metric. In this letter, we demonstrate the potential of machine learning, specifically deep learning, to address the autofocus problem. We formulate the problem as a self-supervised, phase error estimation task using a deep network we call Deep Autofocus. Our formulation has the advantages of being non-iterative (and thus fast) and not requiring ground truth focused-defocused images pairs as often required by other deblurring deep learning methods. We compare our technique against a set of common sharpness metrics optimized using gradient descent over a real-world dataset. Our results demonstrate Deep Autofocus can produce imagery that is perceptually as good as benchmark iterative techniques but at a substantially lower computational cost. We conclude that our proposed Deep Autofocus can provide a more favorable cost-quality trade-off than state-of-the-art alternatives with significant potential of future research.

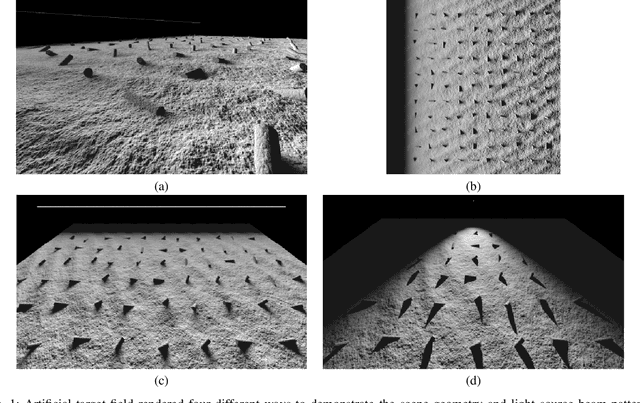

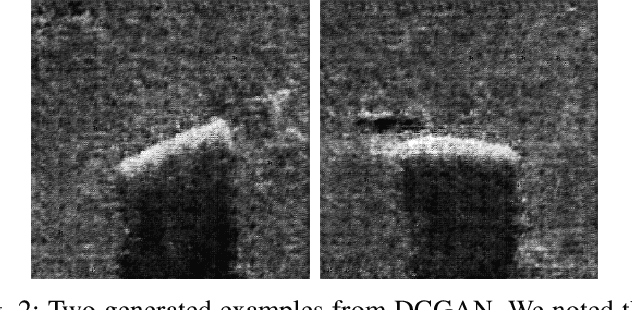

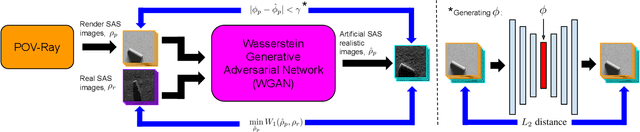

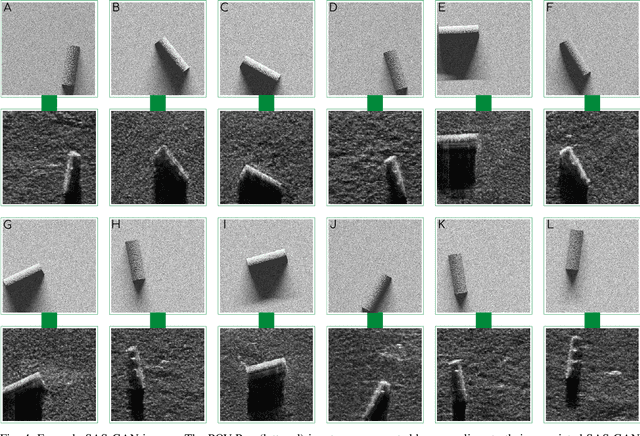

Coupling Rendering and Generative Adversarial Networks for Artificial SAS Image Generation

Oct 02, 2019

Abstract:Acquisition of Synthetic Aperture Sonar (SAS) datasets is bottlenecked by the costly deployment of SAS imaging systems, and even when data acquisition is possible,the data is often skewed towards containing barren seafloor rather than objects of interest. We present a novel pipeline, called SAS GAN, which couples an optical renderer with a generative adversarial network (GAN) to synthesize realistic SAS images of targets on the seafloor. This coupling enables high levels of SAS image realism while enabling control over image geometry and parameters. We demonstrate qualitative results by presenting examples of images created with our pipeline. We also present quantitative results through the use of t-SNE and the Fr\'echet Inception Distance to argue that our generated SAS imagery potentially augments SAS datasets more effectively than an off-the-shelf GAN.

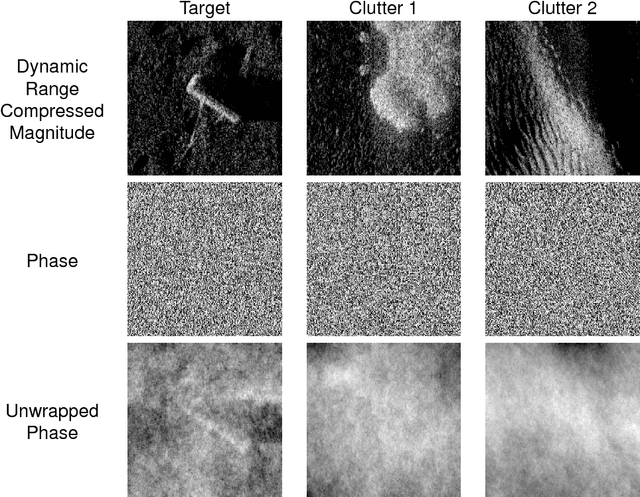

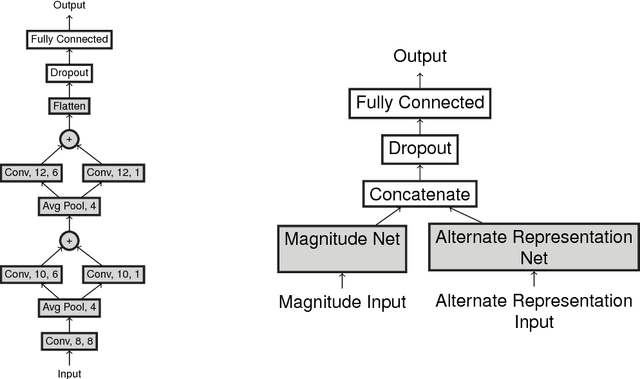

Additional Representations for Improving Synthetic Aperture Sonar Classification Using Convolutional Neural Networks

Oct 15, 2018

Abstract:Object classification in synthetic aperture sonar (SAS) imagery is usually a data starved and class imbalanced problem. There are few objects of interest present among much benign seafloor. Despite these problems, current classification techniques discard a large portion of the collected SAS information. In particular, a beamformed SAS image, which we call a single-look complex (SLC) image, contains complex pixels composed of real and imaginary parts. For human consumption, the SLC is converted to a magnitude-phase representation and the phase information is discarded. Even more problematic, the magnitude information usually exhibits a large dynamic range (>80dB) and must be dynamic range compressed for human display. Often it is this dynamic range compressed representation, originally designed for human consumption, which is fed into a classifier. Consequently, the classification process is completely void of the phase information. In this work, we show improvements in classification performance using the phase information from the SLC as well as information from an alternate source: photographs. We perform statistical testing to demonstrate the validity of our results.

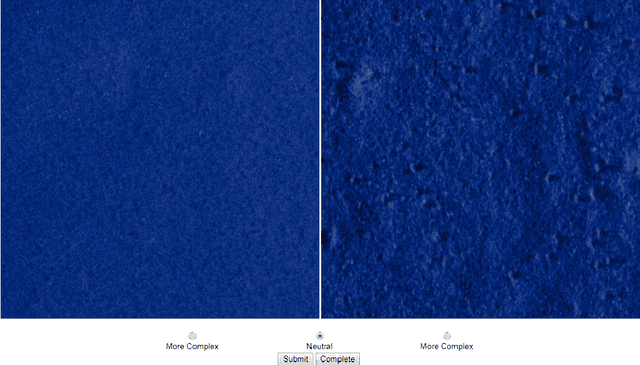

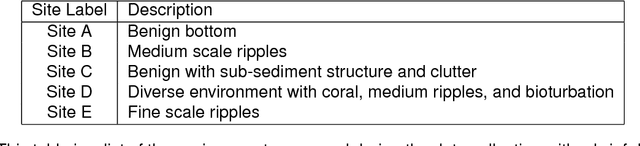

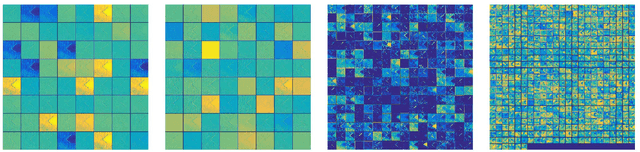

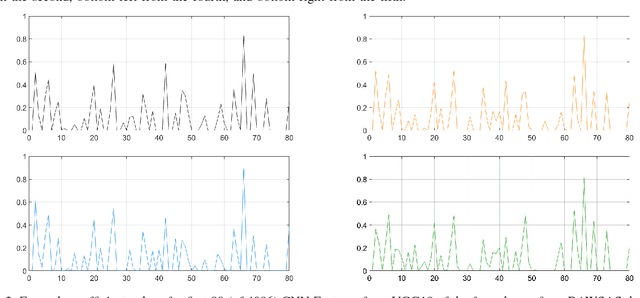

Measuring Human Assessed Complexity in Synthetic Aperture Sonar Imagery Using the Elo Rating System

Aug 15, 2018

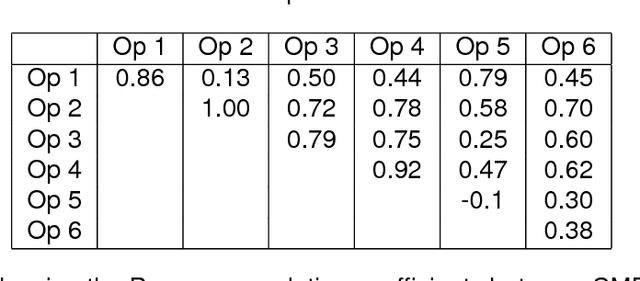

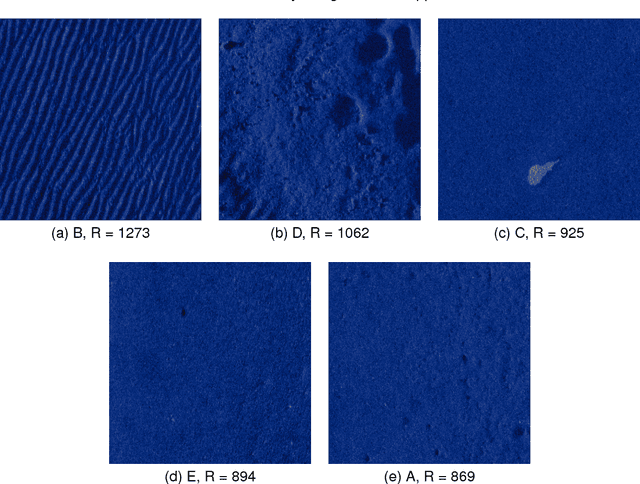

Abstract:Performance of automatic target recognition from synthetic aperture sonar data is heavily dependent on the complexity of the beamformed imagery. Several mechanisms can contribute to this, including unwanted vehicle dynamics, the bathymetry of the scene, and the presence of natural and manmade clutter. To understand the impact of the environmental complexity on image perception, researchers have taken approaches rooted in information theory, or heuristics. Despite these efforts, a quantitative measure for complexity has not been related to the phenomenology from which it is derived. By using subject matter experts (SMEs) we derive a complexity metric for a set of imagery which accounts for the underlying phenomenology. The goal of this work is to develop an understanding of how several common information theoretic and heuristic measures are related to the SME perceived complexity in synthetic aperture sonar imagery. To achieve this, an ensemble of 10-meter x 10-meter images were cropped from a high-frequency SAS data set that spans multiple environments. The SME's were presented pairs of images from which they could rate the relative image complexity. These comparisons were then converted into the desired sequential ranking using a method first developed by A. Elo for establishing rankings of chess players. The Elo method produced a plausible rank ordering across the broad dataset. The heuristic and information theoretical metrics were then compared to the image rank from which they were derived. The metrics with the highest degree of correlation were those relating to spatial information, e.g. variations in pixel intensity, with an R-squared value of approximately 0.9. However, this agreement was dependent on the scale from which the spatial variation was measured. Results will also be presented for many other measures including lacunarity, image compression, and entropy.

Bridging the Gap: Simultaneous Fine Tuning for Data Re-Balancing

Jan 08, 2018

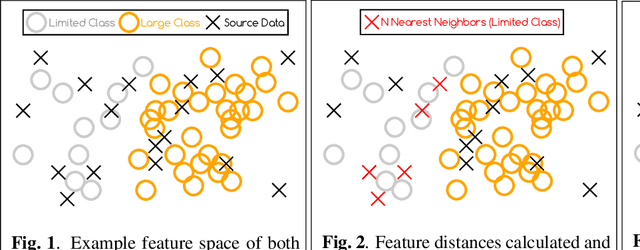

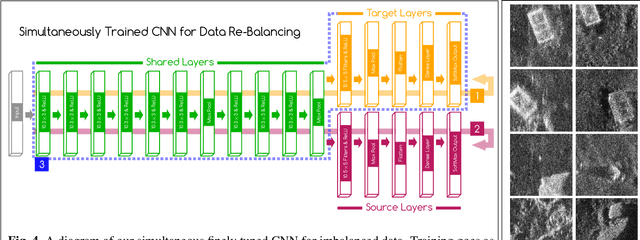

Abstract:There are many real-world classification problems wherein the issue of data imbalance (the case when a data set contains substantially more samples for one/many classes than the rest) is unavoidable. While under-sampling the problematic classes is a common solution, this is not a compelling option when the large data class is itself diverse and/or the limited data class is especially small. We suggest a strategy based on recent work concerning limited data problems which utilizes a supplemental set of images with similar properties to the limited data class to aid in the training of a neural network. We show results for our model against other typical methods on a real-world synthetic aperture sonar data set. Code can be found at github.com/JohnMcKay/dataImbalance.

What's Mine is Yours: Pretrained CNNs for Limited Training Sonar ATR

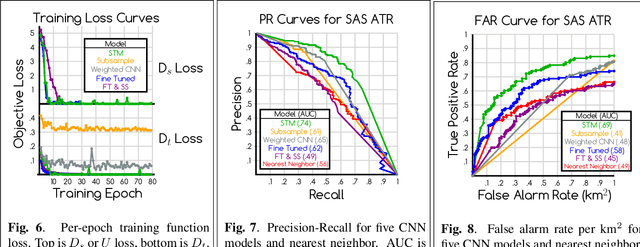

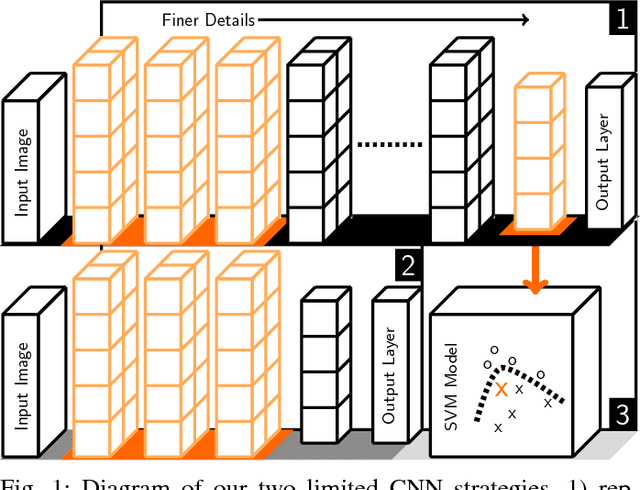

Jun 29, 2017

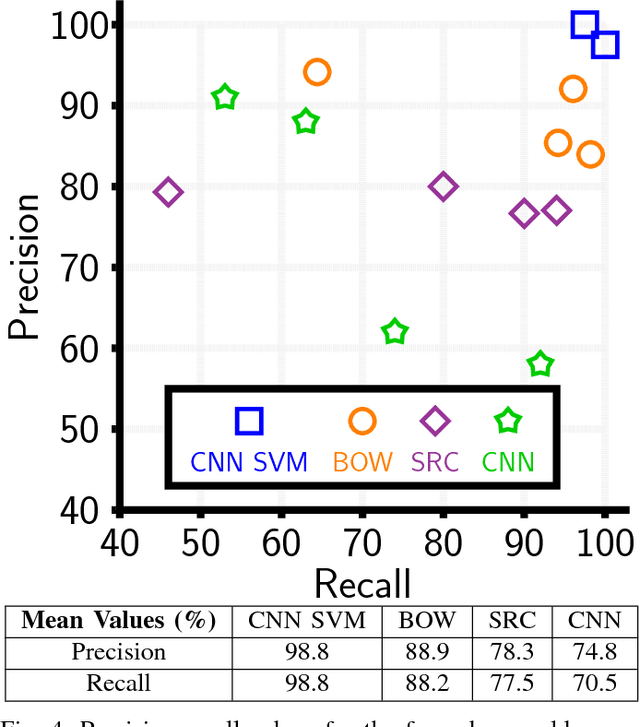

Abstract:Finding mines in Sonar imagery is a significant problem with a great deal of relevance for seafaring military and commercial endeavors. Unfortunately, the lack of enormous Sonar image data sets has prevented automatic target recognition (ATR) algorithms from some of the same advances seen in other computer vision fields. Namely, the boom in convolutional neural nets (CNNs) which have been able to achieve incredible results - even surpassing human actors - has not been an easily feasible route for many practitioners of Sonar ATR. We demonstrate the power of one avenue to incorporating CNNs into Sonar ATR: transfer learning. We first show how well a straightforward, flexible CNN feature-extraction strategy can be used to obtain impressive if not state-of-the-art results. Secondly, we propose a way to utilize the powerful transfer learning approach towards multiple instance target detection and identification within a provided synthetic aperture Sonar data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge