Daniel Bis

An Analysis on the Learning Rules of the Skip-Gram Model

Mar 18, 2020

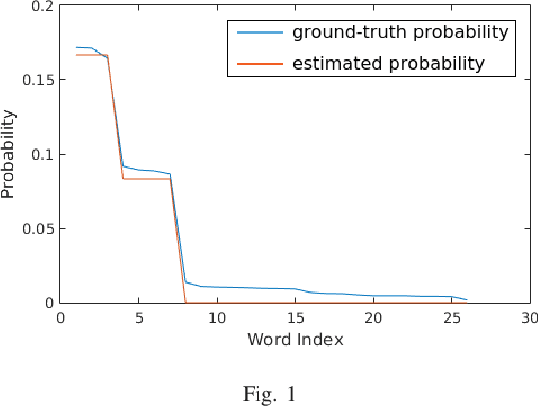

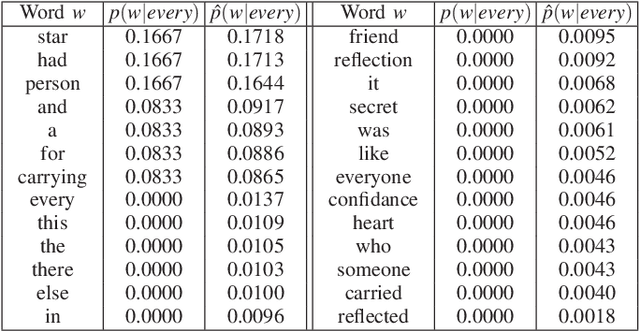

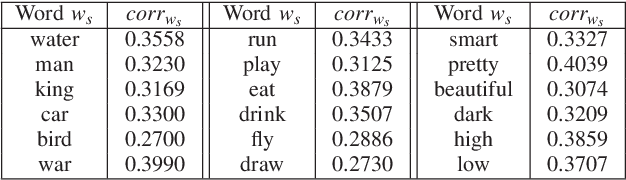

Abstract:To improve the generalization of the representations for natural language processing tasks, words are commonly represented using vectors, where distances among the vectors are related to the similarity of the words. While word2vec, the state-of-the-art implementation of the skip-gram model, is widely used and improves the performance of many natural language processing tasks, its mechanism is not yet well understood. In this work, we derive the learning rules for the skip-gram model and establish their close relationship to competitive learning. In addition, we provide the global optimal solution constraints for the skip-gram model and validate them by experimental results.

* Published on the 2019 International Joint Conference on Neural Networks

Enhancing Prediction Models for One-Year Mortality in Patients with Acute Myocardial Infarction and Post Myocardial Infarction Syndrome

Apr 28, 2019

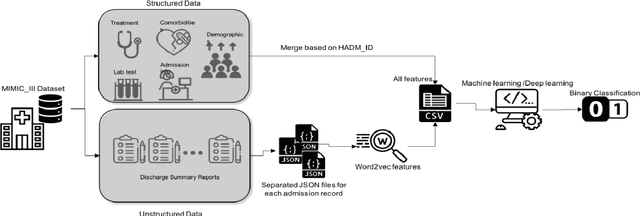

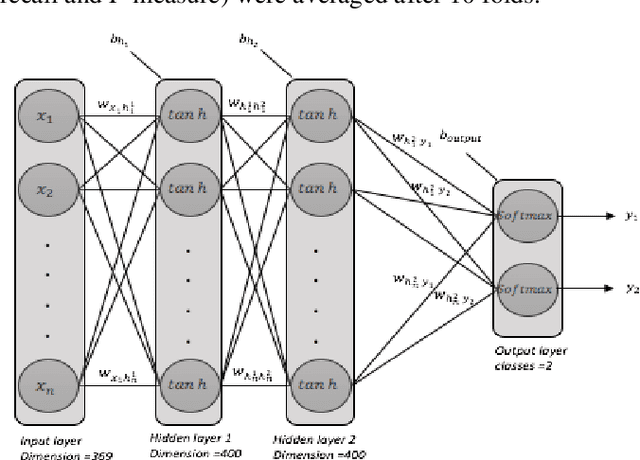

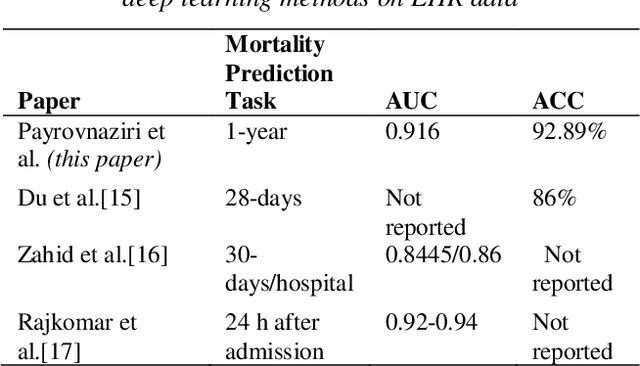

Abstract:Predicting the risk of mortality for patients with acute myocardial infarction (AMI) using electronic health records (EHRs) data can help identify risky patients who might need more tailored care. In our previous work, we built computational models to predict one-year mortality of patients admitted to an intensive care unit (ICU) with AMI or post myocardial infarction syndrome. Our prior work only used the structured clinical data from MIMIC-III, a publicly available ICU clinical database. In this study, we enhanced our work by adding the word embedding features from free-text discharge summaries. Using a richer set of features resulted in significant improvement in the performance of our deep learning models. The average accuracy of our deep learning models was 92.89% and the average F-measure was 0.928. We further reported the impact of different combinations of features extracted from structured and/or unstructured data on the performance of the deep learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge