Daniel Arteaga

Room Impulse Response Generation Conditioned on Acoustic Parameters

Jul 16, 2025Abstract:The generation of room impulse responses (RIRs) using deep neural networks has attracted growing research interest due to its applications in virtual and augmented reality, audio postproduction, and related fields. Most existing approaches condition generative models on physical descriptions of a room, such as its size, shape, and surface materials. However, this reliance on geometric information limits their usability in scenarios where the room layout is unknown or when perceptual realism (how a space sounds to a listener) is more important than strict physical accuracy. In this study, we propose an alternative strategy: conditioning RIR generation directly on a set of RIR acoustic parameters. These parameters include various measures of reverberation time and direct sound to reverberation ratio, both broadband and bandwise. By specifying how the space should sound instead of how it should look, our method enables more flexible and perceptually driven RIR generation. We explore both autoregressive and non-autoregressive generative models operating in the Descript Audio Codec domain, using either discrete token sequences or continuous embeddings. Specifically, we have selected four models to evaluate: an autoregressive transformer, the MaskGIT model, a flow matching model, and a classifier-based approach. Objective and subjective evaluations are performed to compare these methods with state-of-the-art alternatives. Results show that the proposed models match or outperform state-of-the-art alternatives, with the MaskGIT model achieving the best performance.

Deep learning based spatial aliasing reduction in beamforming for audio capture

May 26, 2025Abstract:Spatial aliasing affects spaced microphone arrays, causing directional ambiguity above certain frequencies, degrading spatial and spectral accuracy of beamformers. Given the limitations of conventional signal processing and the scarcity of deep learning approaches to spatial aliasing mitigation, we propose a novel approach using a U-Net architecture to predict a signal-dependent de-aliasing filter, which reduces aliasing in conventional beamforming for spatial capture. Two types of multichannel filters are considered, one which treats the channels independently and a second one that models cross-channel dependencies. The proposed approach is evaluated in two common spatial capture scenarios: stereo and first-order Ambisonics. The results indicate a very significant improvement, both objective and perceptual, with respect to conventional beamforming. This work shows the potential of deep learning to reduce aliasing in beamforming, leading to improvements in multi-microphone setups.

Universal Spatial Audio Transcoder

May 07, 2024Abstract:This paper addresses the challenges associated with both the conversion between different spatial audio formats and the decoding of a spatial audio format to a specific loudspeaker layout. Existing approaches often rely on layout remapping tools, which may not guarantee optimal conversion from a psychoacoustic perspective. To overcome these challenges, we present the Universal Spatial Audio Transcoder(USAT) method and its corresponding open source implementation. USAT generates an optimal decoder or transcoder for any input spatial audio format, adapting it to any output format or 2D/3D loudspeaker configuration. Drawing upon optimization techniques based on psychoacoustic principles, the algorithm maximizes the preservation of spatial information. We present examples of the decoding and transcoding of several audio formats, and show that USAT approach is advantageous compared to the most common methods in the field.

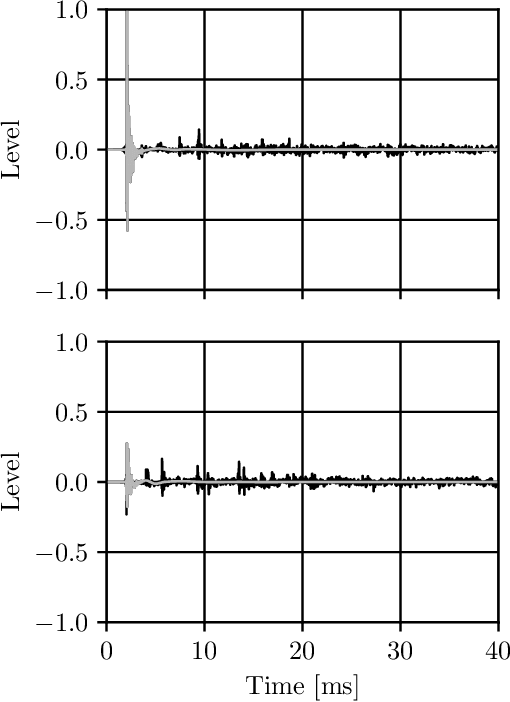

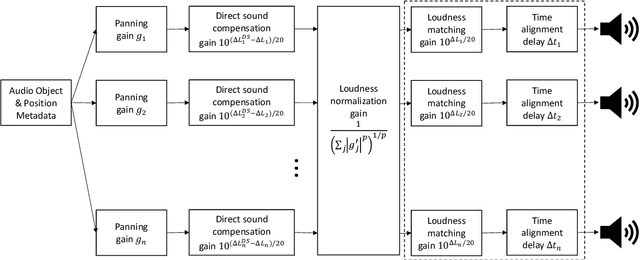

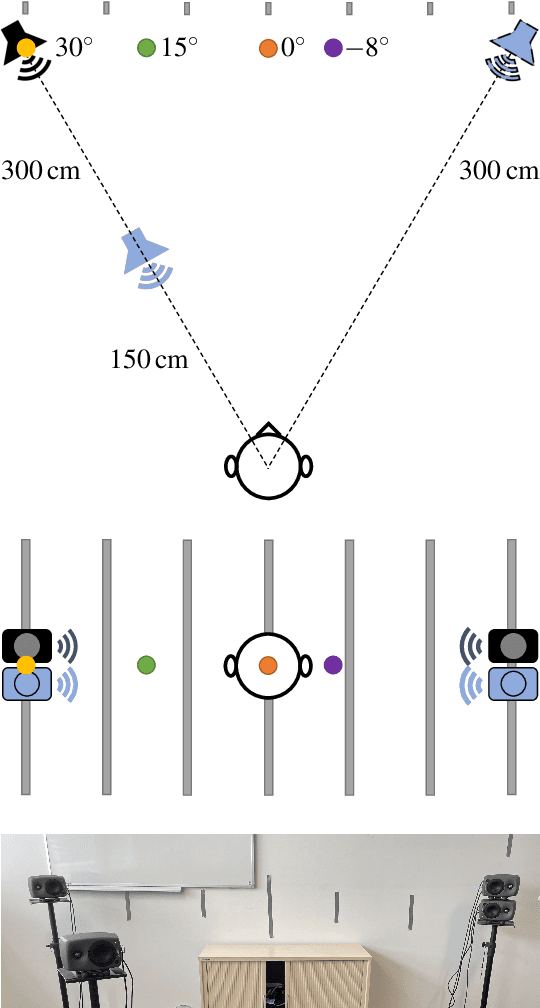

Improved Panning on Non-Equidistant Loudspeakers with Direct Sound Level Compensation

Oct 27, 2023

Abstract:Loudspeaker rendering techniques that create phantom sound sources often assume an equidistant loudspeaker layout. Typical home setups might not fulfill this condition as loudspeakers deviate from canonical positions, thus requiring a corresponding calibration. The standard approach is to compensate for delays and to match the loudness of each loudspeaker at the listener's location. It was found that a shift of the phantom image occurs when this calibration procedure is applied and one of a pair of loudspeakers is significantly closer to the listener than the other. In this paper, a novel approach to panning on non-equidistant loudspeaker layouts is presented whereby the panning position is governed by the direct sound and the perceived loudness is governed by the full impulse response. Subjective listening tests are presented that validate the approach and quantify the perceived effect of the compensation. In a setup where the standard calibration leads to an average error of 10 degrees, the proposed direct sound compensation largely returns the phantom source to its intended position.

* 10 pages. Accepted for presentation in AES Convention 155 (2023)

Mono-to-stereo through parametric stereo generation

Jun 26, 2023

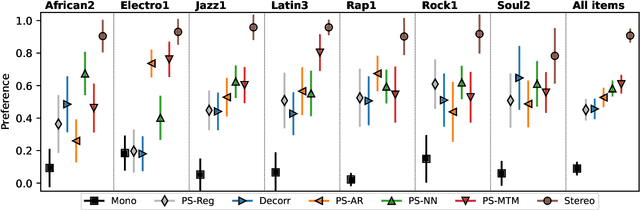

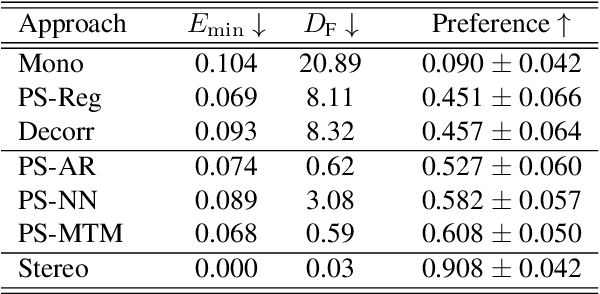

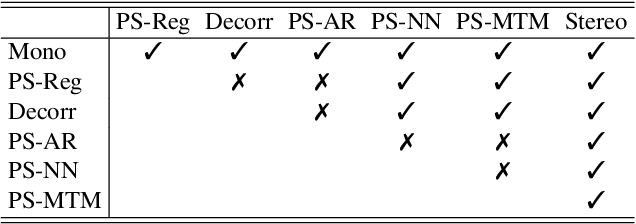

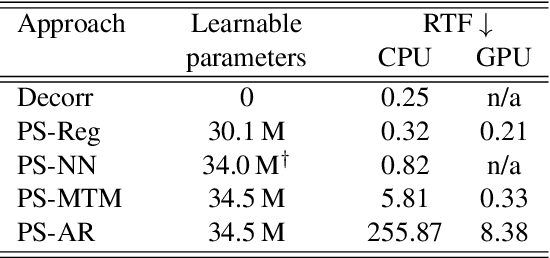

Abstract:Generating a stereophonic presentation from a monophonic audio signal is a challenging open task, especially if the goal is to obtain a realistic spatial imaging with a specific panning of sound elements. In this work, we propose to convert mono to stereo by means of predicting parametric stereo (PS) parameters using both nearest neighbor and deep network approaches. In combination with PS, we also propose to model the task with generative approaches, allowing to synthesize multiple and equally-plausible stereo renditions from the same mono signal. To achieve this, we consider both autoregressive and masked token modelling approaches. We provide evidence that the proposed PS-based models outperform a competitive classical decorrelation baseline and that, within a PS prediction framework, modern generative models outshine equivalent non-generative counterparts. Overall, our work positions both PS and generative modelling as strong and appealing methodologies for mono-to-stereo upmixing. A discussion of the limitations of these approaches is also provided.

Upsampling layers for music source separation

Nov 23, 2021

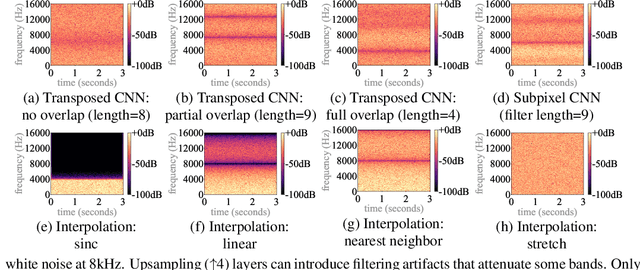

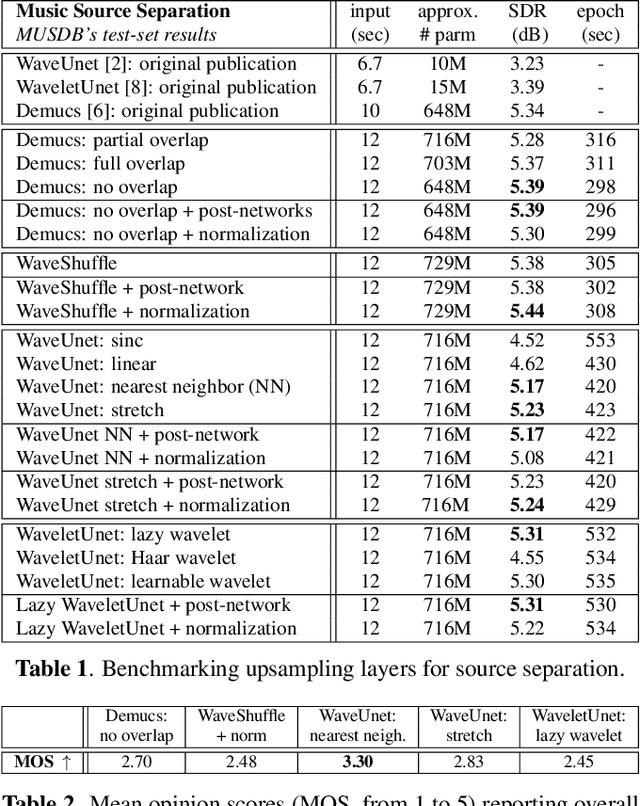

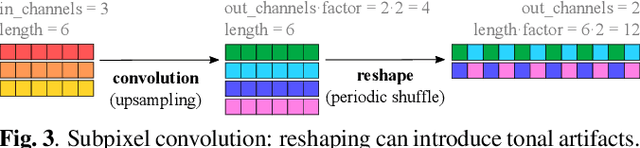

Abstract:Upsampling artifacts are caused by problematic upsampling layers and due to spectral replicas that emerge while upsampling. Also, depending on the used upsampling layer, such artifacts can either be tonal artifacts (additive high-frequency noise) or filtering artifacts (substractive, attenuating some bands). In this work we investigate the practical implications of having upsampling artifacts in the resulting audio, by studying how different artifacts interact and assessing their impact on the models' performance. To that end, we benchmark a large set of upsampling layers for music source separation: different transposed and subpixel convolution setups, different interpolation upsamplers (including two novel layers based on stretch and sinc interpolation), and different wavelet-based upsamplers (including a novel learnable wavelet layer). Our results show that filtering artifacts, associated with interpolation upsamplers, are perceptually preferrable, even if they tend to achieve worse objective scores.

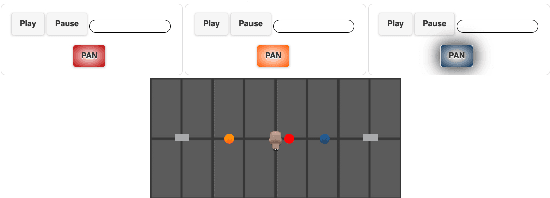

Multichannel-based learning for audio object extraction

Feb 11, 2021

Abstract:The current paradigm for creating and deploying immersive audio content is based on audio objects, which are composed of an audio track and position metadata. While rendering an object-based production into a multichannel mix is straightforward, the reverse process involves sound source separation and estimating the spatial trajectories of the extracted sources. Besides, cinematic object-based productions are often composed by dozens of simultaneous audio objects, which poses a scalability challenge for audio object extraction. Here, we propose a novel deep learning approach to object extraction that learns from the multichannel renders of object-based productions, instead of directly learning from the audio objects themselves. This approach allows tackling the object scalability challenge and also offers the possibility to formulate the problem in a supervised or an unsupervised fashion. Since, to our knowledge, no other works have previously addressed this topic, we first define the task and propose an evaluation methodology, and then discuss under what circumstances our methods outperform the proposed baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge