Danhua Liu

Degradation Estimation Recurrent Neural Network with Local and Non-Local Priors for Compressive Spectral Imaging

Nov 15, 2023

Abstract:In coded aperture snapshot spectral imaging (CASSI) systems, a core problem is to recover the 3D hyperspectral image (HSI) from the 2D measurement. Current deep unfolding networks (DUNs) for the HSI reconstruction mainly suffered from three issues. Firstly, in previous DUNs, the DNNs across different stages were unable to share the feature representations learned from different stages, leading to parameter sparsity, which in turn limited their reconstruction potential. Secondly, previous DUNs fail to estimate degradation-related parameters within a unified framework, including the degradation matrix in the data subproblem and the noise level in the prior subproblem. Consequently, either the accuracy of solving the data or the prior subproblem is compromised. Thirdly, exploiting both local and non-local priors for the HSI reconstruction is crucial, and it remains a key issue to be addressed. In this paper, we first transform the DUN into a Recurrent Neural Network (RNN) by sharing parameters across stages, which allows the DNN in each stage could learn feature representation from different stages, enhancing the representativeness of the DUN. Secondly, we incorporate the Degradation Estimation Network into the RNN (DERNN), which simultaneously estimates the degradation matrix and the noise level by residual learning with reference to the sensing matrix. Thirdly, we propose a Local and Non-Local Transformer (LNLT) to effectively exploit both local and non-local priors in HSIs. By integrating the LNLT into the DERNN for solving the prior subproblem, we propose the DERNN-LNLT, which achieves state-of-the-art performance.

Knowledge-guided Semantic Computing Network

Sep 29, 2018

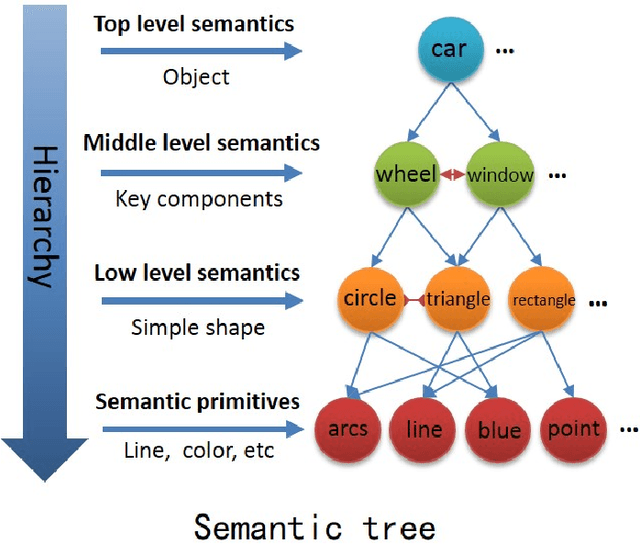

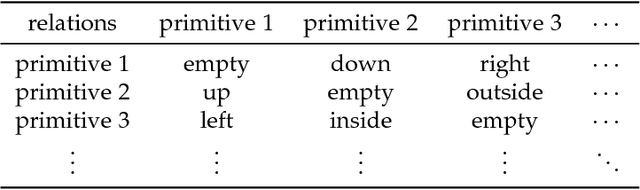

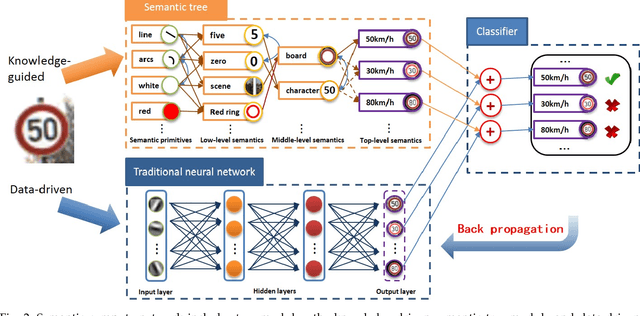

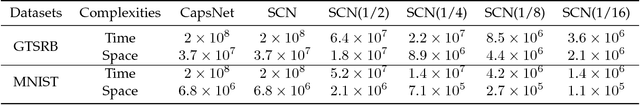

Abstract:It is very useful to integrate human knowledge and experience into traditional neural networks for faster learning speed, fewer training samples and better interpretability. However, due to the obscured and indescribable black box model of neural networks, it is very difficult to design its architecture, interpret its features and predict its performance. Inspired by human visual cognition process, we propose a knowledge-guided semantic computing network which includes two modules: a knowledge-guided semantic tree and a data-driven neural network. The semantic tree is pre-defined to describe the spatial structural relations of different semantics, which just corresponds to the tree-like description of objects based on human knowledge. The object recognition process through the semantic tree only needs simple forward computing without training. Besides, to enhance the recognition ability of the semantic tree in aspects of the diversity, randomicity and variability, we use the traditional neural network to aid the semantic tree to learn some indescribable features. Only in this case, the training process is needed. The experimental results on MNIST and GTSRB datasets show that compared with the traditional data-driven network, our proposed semantic computing network can achieve better performance with fewer training samples and lower computational complexity. Especially, Our model also has better adversarial robustness than traditional neural network with the help of human knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge