Dang Huynh

Unveiling Comparative Sentiments in Vietnamese Product Reviews: A Sequential Classification Framework

Jan 02, 2024Abstract:Comparative opinion mining is a specialized field of sentiment analysis that aims to identify and extract sentiments expressed comparatively. To address this task, we propose an approach that consists of solving three sequential sub-tasks: (i) identifying comparative sentence, i.e., if a sentence has a comparative meaning, (ii) extracting comparative elements, i.e., what are comparison subjects, objects, aspects, predicates, and (iii) classifying comparison types which contribute to a deeper comprehension of user sentiments in Vietnamese product reviews. Our method is ranked fifth at the Vietnamese Language and Speech Processing (VLSP) 2023 challenge on Comparative Opinion Mining (ComOM) from Vietnamese Product Reviews.

An Effective Leaf Recognition Using Convolutional Neural Networks Based Features

Aug 04, 2021

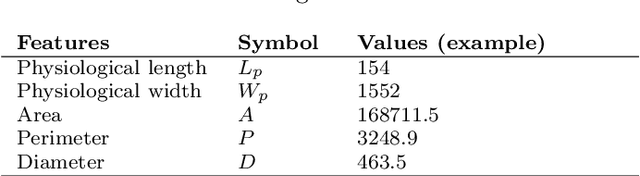

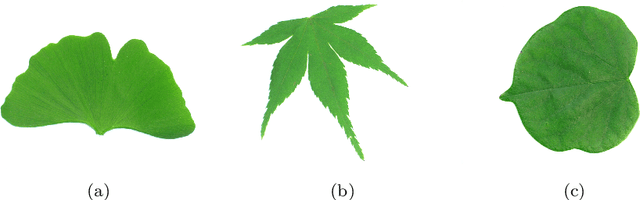

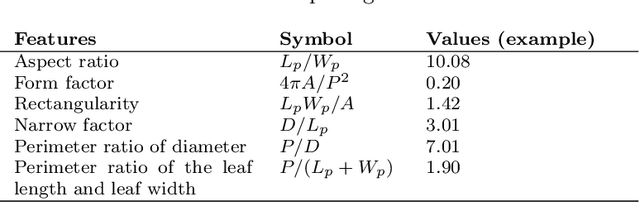

Abstract:There is a warning light for the loss of plant habitats worldwide that entails concerted efforts to conserve plant biodiversity. Thus, plant species classification is of crucial importance to address this environmental challenge. In recent years, there is a considerable increase in the number of studies related to plant taxonomy. While some researchers try to improve their recognition performance using novel approaches, others concentrate on computational optimization for their framework. In addition, a few studies are diving into feature extraction to gain significantly in terms of accuracy. In this paper, we propose an effective method for the leaf recognition problem. In our proposed approach, a leaf goes through some pre-processing to extract its refined color image, vein image, xy-projection histogram, handcrafted shape, texture features, and Fourier descriptors. These attributes are then transformed into a better representation by neural network-based encoders before a support vector machine (SVM) model is utilized to classify different leaves. Overall, our approach performs a state-of-the-art result on the Flavia leaf dataset, achieving the accuracy of 99.58\% on test sets under random 10-fold cross-validation and bypassing the previous methods. We also release our codes\footnote{Scripts are available at \url{https://github.com/dinhvietcuong1996/LeafRecognition}} for contributing to the research community in the leaf classification problem.

Binarizing MobileNet via Evolution-based Searching

May 15, 2020

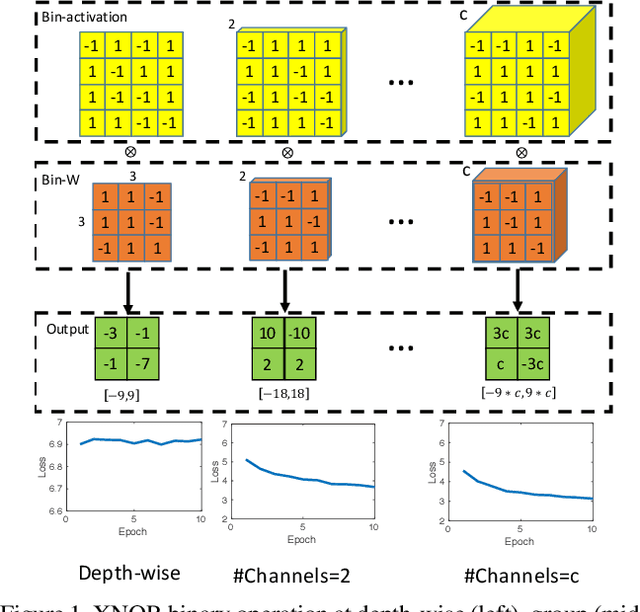

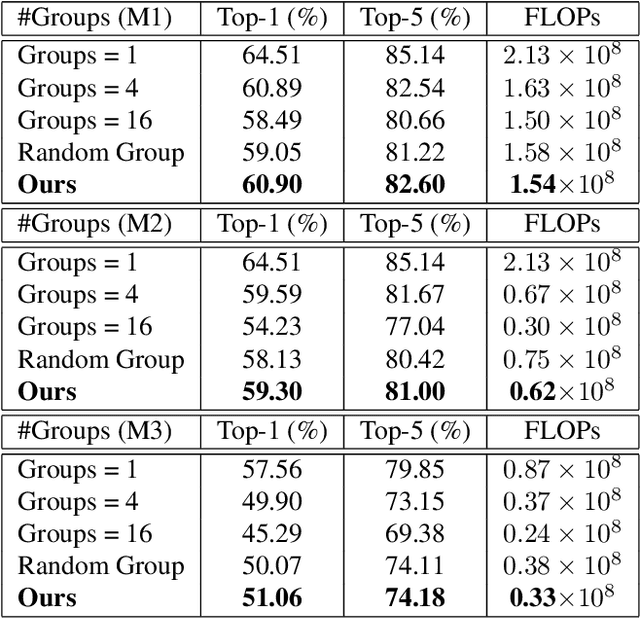

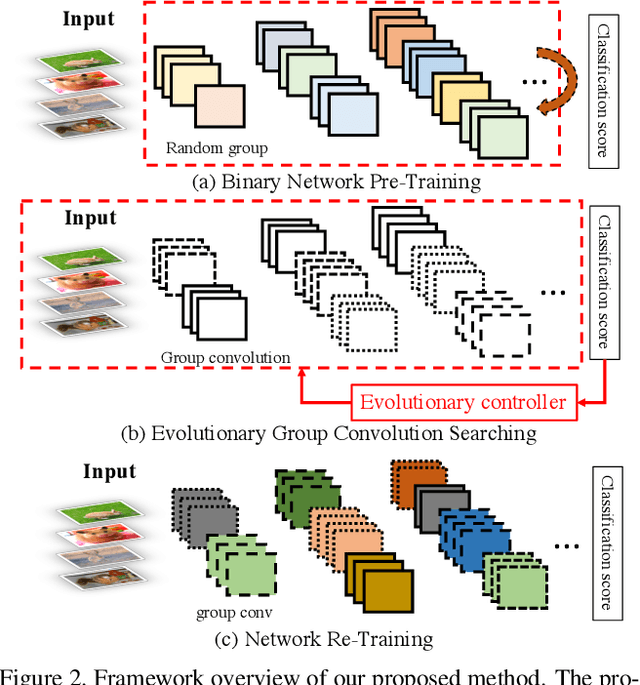

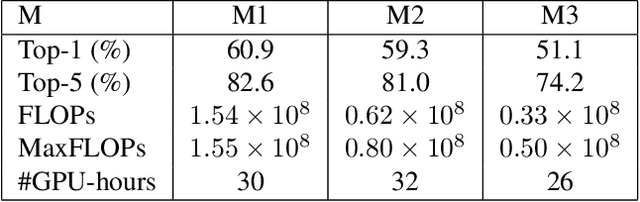

Abstract:Binary Neural Networks (BNNs), known to be one among the effectively compact network architectures, have achieved great outcomes in the visual tasks. Designing efficient binary architectures is not trivial due to the binary nature of the network. In this paper, we propose a use of evolutionary search to facilitate the construction and training scheme when binarizing MobileNet, a compact network with separable depth-wise convolution. Inspired by one-shot architecture search frameworks, we manipulate the idea of group convolution to design efficient 1-Bit Convolutional Neural Networks (CNNs), assuming an approximately optimal trade-off between computational cost and model accuracy. Our objective is to come up with a tiny yet efficient binary neural architecture by exploring the best candidates of the group convolution while optimizing the model performance in terms of complexity and latency. The approach is threefold. First, we train strong baseline binary networks with a wide range of random group combinations at each convolutional layer. This set-up gives the binary neural networks a capability of preserving essential information through layers. Second, to find a good set of hyperparameters for group convolutions we make use of the evolutionary search which leverages the exploration of efficient 1-bit models. Lastly, these binary models are trained from scratch in a usual manner to achieve the final binary model. Various experiments on ImageNet are conducted to show that following our construction guideline, the final model achieves 60.09% Top-1 accuracy and outperforms the state-of-the-art CI-BCNN with the same computational cost.

MoBiNet: A Mobile Binary Network for Image Classification

Jul 31, 2019

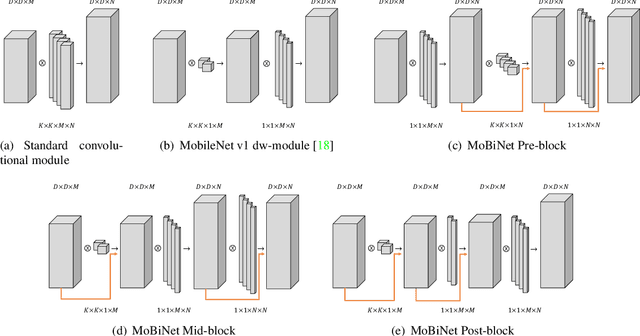

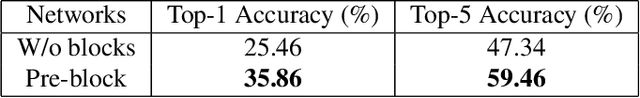

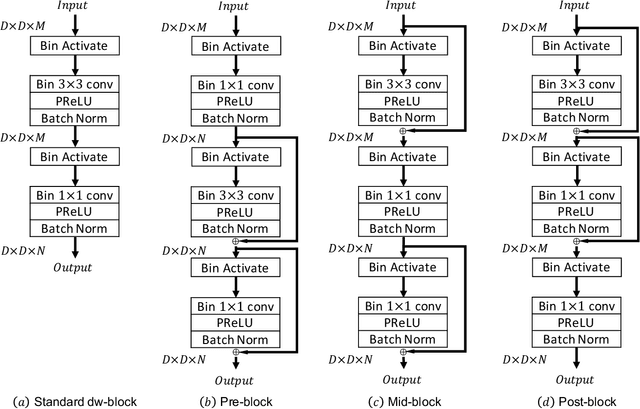

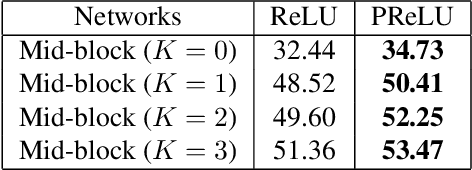

Abstract:MobileNet and Binary Neural Networks are two among the most widely used techniques to construct deep learning models for performing a variety of tasks on mobile and embedded platforms.In this paper, we present a simple yet efficient scheme to exploit MobileNet binarization at activation function and model weights. However, training a binary network from scratch with separable depth-wise and point-wise convolutions in case of MobileNet is not trivial and prone to divergence. To tackle this training issue, we propose a novel neural network architecture, namely MoBiNet - Mobile Binary Network in which skip connections are manipulated to prevent information loss and vanishing gradient, thus facilitate the training process. More importantly, while existing binary neural networks often make use of cumbersome backbones such as Alex-Net, ResNet, VGG-16 with float-type pre-trained weights initialization, our MoBiNet focuses on binarizing the already-compressed neural networks like MobileNet without the need of a pre-trained model to start with. Therefore, our proposal results in an effectively small model while keeping the accuracy comparable to existing ones. Experiments on ImageNet dataset show the potential of the MoBiNet as it achieves 54.40% top-1 accuracy and dramatically reduces the computational cost with binary operators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge