Dan Shen

An Efficient Probabilistic Solution to Mapping Errors in LiDAR-Camera Fusion for Autonomous Vehicles

Nov 08, 2023

Abstract:LiDAR-camera fusion is one of the core processes for the perception system of current automated driving systems. The typical sensor fusion process includes a list of coordinate transformation operations following system calibration. Although a significant amount of research has been done to improve the fusion accuracy, there are still inherent data mapping errors in practice related to system synchronization offsets, vehicle vibrations, the small size of the target, and fast relative moving speeds. Moreover, more and more complicated algorithms to improve fusion accuracy can overwhelm the onboard computational resources, limiting the actual implementation. This study proposes a novel and low-cost probabilistic LiDAR-Camera fusion method to alleviate these inherent mapping errors in scene reconstruction. By calculating shape similarity using KL-divergence and applying RANSAC-regression-based trajectory smoother, the effects of LiDAR-camera mapping errors are minimized in object localization and distance estimation. Designed experiments are conducted to prove the robustness and effectiveness of the proposed strategy.

Active Collision Avoidance System for E-Scooters in Pedestrian Environment

Nov 07, 2023Abstract:In the dense fabric of urban areas, electric scooters have rapidly become a preferred mode of transportation. As they cater to modern mobility demands, they present significant safety challenges, especially when interacting with pedestrians. In general, e-scooters are suggested to be ridden in bike lanes/sidewalks or share the road with cars at the maximum speed of about 15-20 mph, which is more flexible and much faster than pedestrians and bicyclists. Accurate prediction of pedestrian movement, coupled with assistant motion control of scooters, is essential in minimizing collision risks and seamlessly integrating scooters in areas dense with pedestrians. Addressing these safety concerns, our research introduces a novel e-Scooter collision avoidance system (eCAS) with a method for predicting pedestrian trajectories, employing an advanced LSTM network integrated with a state refinement module. This proactive model is designed to ensure unobstructed movement in areas with substantial pedestrian traffic without collisions. Results are validated on two public datasets, ETH and UCY, providing encouraging outcomes. Our model demonstrated proficiency in anticipating pedestrian paths and augmented scooter path planning, allowing for heightened adaptability in densely populated locales. This study shows the potential of melding pedestrian trajectory prediction with scooter motion planning. With the ubiquity of electric scooters in urban environments, such advancements have become crucial to safeguard all participants in urban transit.

A Wearable Data Collection System for Studying Micro-Level E-Scooter Behavior in Naturalistic Road Environment

Dec 22, 2022

Abstract:As one of the most popular micro-mobility options, e-scooters are spreading in hundreds of big cities and college towns in the US and worldwide. In the meantime, e-scooters are also posing new challenges to traffic safety. In general, e-scooters are suggested to be ridden in bike lanes/sidewalks or share the road with cars at the maximum speed of about 15-20 mph, which is more flexible and much faster than the pedestrains and bicyclists. These features make e-scooters challenging for human drivers, pedestrians, vehicle active safety modules, and self-driving modules to see and interact. To study this new mobility option and address e-scooter riders' and other road users' safety concerns, this paper proposes a wearable data collection system for investigating the micro-level e-Scooter motion behavior in a Naturalistic road environment. An e-Scooter-based data acquisition system has been developed by integrating LiDAR, cameras, and GPS using the robot operating system (ROS). Software frameworks are developed to support hardware interfaces, sensor operation, sensor synchronization, and data saving. The integrated system can collect data continuously for hours, meeting all the requirements including calibration accuracy and capability of collecting the vehicle and e-Scooter encountering data.

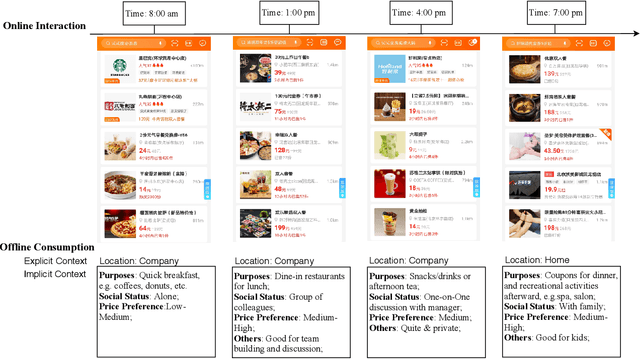

Infer Implicit Contexts in Real-time Online-to-Offline Recommendation

Jul 08, 2019

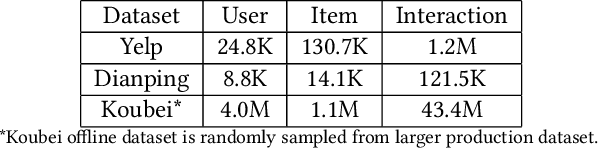

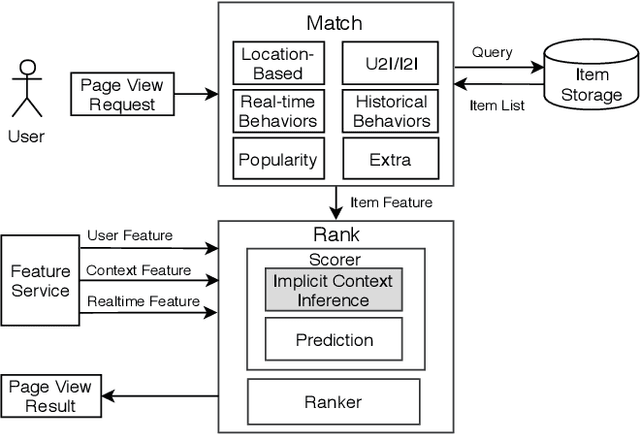

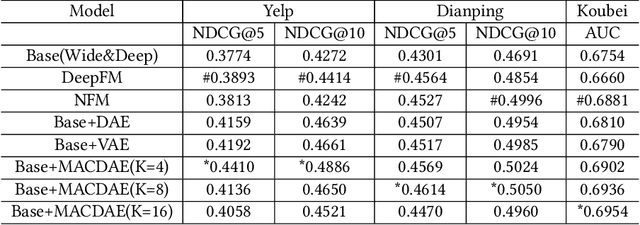

Abstract:Understanding users' context is essential for successful recommendations, especially for Online-to-Offline (O2O) recommendation, such as Yelp, Groupon, and Koubei. Different from traditional recommendation where individual preference is mostly static, O2O recommendation should be dynamic to capture variation of users' purposes across time and location. However, precisely inferring users' real-time contexts information, especially those implicit ones, is extremely difficult, and it is a central challenge for O2O recommendation. In this paper, we propose a new approach, called Mixture Attentional Constrained Denoise AutoEncoder (MACDAE), to infer implicit contexts and consequently, to improve the quality of real-time O2O recommendation. In MACDAE, we first leverage the interaction among users, items, and explicit contexts to infer users' implicit contexts, then combine the learned implicit-context representation into an end-to-end model to make the recommendation. MACDAE works quite well in the real system. We conducted both offline and online evaluations of the proposed approach. Experiments on several real-world datasets (Yelp, Dianping, and Koubei) show our approach could achieve significant improvements over state-of-the-arts. Furthermore, online A/B test suggests a 2.9% increase for click-through rate and 5.6% improvement for conversion rate in real-world traffic. Our model has been deployed in the product of "Guess You Like" recommendation in Koubei.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge