Dale McConachie

Tracking Partially-Occluded Deformable Objects while Enforcing Geometric Constraints

Nov 01, 2020

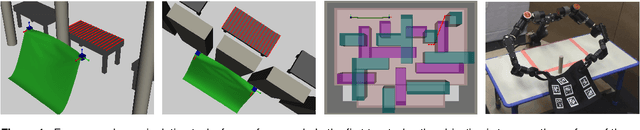

Abstract:In order to manipulate a deformable object, such as rope or cloth, in unstructured environments, robots need a way to estimate its current shape. However, tracking the shape of a deformable object can be challenging because of the object's high flexibility, (self-)occlusion, and interaction with obstacles. Building a high-fidelity physics simulation to aid in tracking is difficult for novel environments. Instead we focus on tracking the object based on RGBD images and geometric motion estimates and obstacles. Our key contributions over previous work in this vein are: 1) A better way to handle severe occlusion by using a motion model to regularize the tracking estimate; and 2) The formulation of \textit{convex} geometric constraints, which allow us to prevent self-intersection and penetration into known obstacles via a post-processing step. These contributions allow us to outperform previous methods by a large margin in terms of accuracy in scenarios with severe occlusion and obstacles.

Learning When to Trust a Dynamics Model for Planning in Reduced State Spaces

Jan 29, 2020

Abstract:When the dynamics of a system are difficult to model and/or time-consuming to evaluate, such as in deformable object manipulation tasks, motion planning algorithms struggle to find feasible plans efficiently. Such problems are often reduced to state spaces where the dynamics are straightforward to model and evaluate. However, such reductions usually discard information about the system for the benefit of computational efficiency, leading to cases where the true and reduced dynamics disagree on the result of an action. This paper presents a formulation for planning in reduced state spaces that uses a classifier to bias the planner away from state-action pairs that are not reliably feasible under the true dynamics. We present a method to generate and label data to train such a classifier, as well as an application of our framework to rope manipulation, where we use a Virtual Elastic Band (VEB) approximation to the true dynamics. Our experiments with rope manipulation demonstrate that the classifier significantly improves the success rate of our RRT-based planner in several difficult scenarios which are designed to cause the VEB to produce incorrect predictions in key parts of the environment.

Manipulating Deformable Objects by Interleaving Prediction, Planning, and Control

Jan 27, 2020

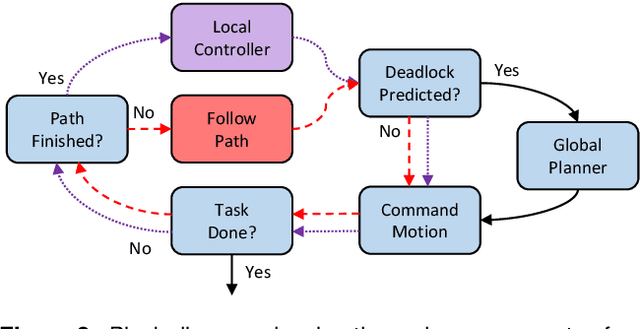

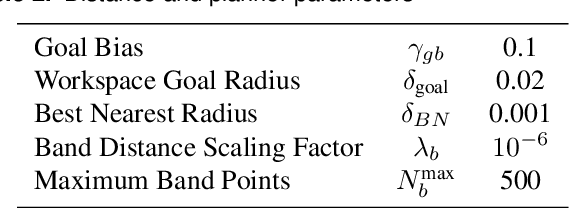

Abstract:We present a framework for deformable object manipulation that interleaves planning and control, enabling complex manipulation tasks without relying on high-fidelity modeling or simulation. The key question we address is when should we use planning and when should we use control to achieve the task? Planners are designed to find paths through complex configuration spaces, but for highly underactuated systems, such as deformable objects, achieving a specific configuration is very difficult even with high-fidelity models. Conversely, controllers can be designed to achieve specific configurations, but they can be trapped in undesirable local minima due to obstacles. Our approach consists of three components: (1) A global motion planner to generate gross motion of the deformable object; (2) A local controller for refinement of the configuration of the deformable object; and (3) A novel deadlock prediction algorithm to determine when to use planning versus control. By separating planning from control we are able to use different representations of the deformable object, reducing overall complexity and enabling efficient computation of motion. We provide a detailed proof of probabilistic completeness for our planner, which is valid despite the fact that our system is underactuated and we do not have a steering function. We then demonstrate that our framework is able to successfully perform several manipulation tasks with rope and cloth in simulation which cannot be performed using either our controller or planner alone. These experiments suggest that our planner can generate paths efficiently, taking under a second on average to find a feasible path in three out of four scenarios. We also show that our framework is effective on a 16 DoF physical robot, where reachability and dual-arm constraints make the planning more difficult.

Bandit-Based Model Selection for Deformable Object Manipulation

Mar 29, 2017

Abstract:We present a novel approach to deformable object manipulation that does not rely on highly-accurate modeling. The key contribution of this paper is to formulate the task as a Multi-Armed Bandit problem, with each arm representing a model of the deformable object. To "pull" an arm and evaluate its utility, we use the arm's model to generate a velocity command for the gripper(s) holding the object and execute it. As the task proceeds and the object deforms, the utility of each model can change. Our framework estimates these changes and balances exploration of the model set with exploitation of high-utility models. We also propose an approach based on Kalman Filtering for Non-stationary Multi-armed Normal Bandits (KF-MANB) to leverage the coupling between models to learn more from each arm pull. We demonstrate that our method outperforms previous methods on synthetic trials, and performs competitively on several manipulation tasks in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge