Cyran Aouameur

Diff-A-Riff: Musical Accompaniment Co-creation via Latent Diffusion Models

Jun 12, 2024

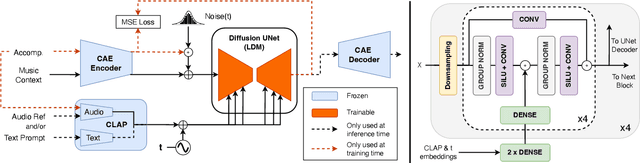

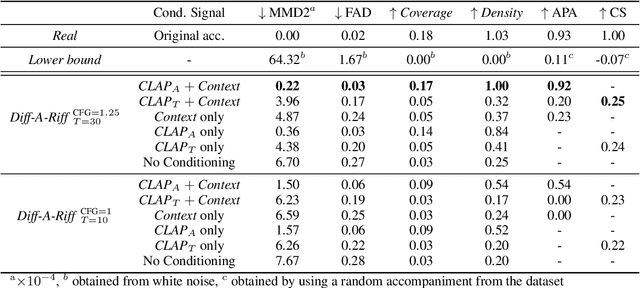

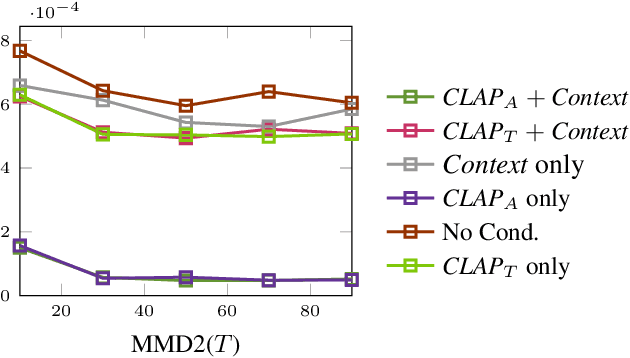

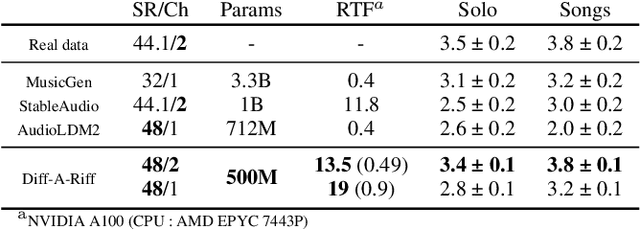

Abstract:Recent advancements in deep generative models present new opportunities for music production but also pose challenges, such as high computational demands and limited audio quality. Moreover, current systems frequently rely solely on text input and typically focus on producing complete musical pieces, which is incompatible with existing workflows in music production. To address these issues, we introduce "Diff-A-Riff," a Latent Diffusion Model designed to generate high-quality instrumental accompaniments adaptable to any musical context. This model offers control through either audio references, text prompts, or both, and produces 48kHz pseudo-stereo audio while significantly reducing inference time and memory usage. We demonstrate the model's capabilities through objective metrics and subjective listening tests, with extensive examples available on the accompanying website: sonycslparis.github.io/diffariff-companion/

DrumGAN VST: A Plugin for Drum Sound Analysis/Synthesis With Autoencoding Generative Adversarial Networks

Jun 29, 2022

Abstract:In contemporary popular music production, drum sound design is commonly performed by cumbersome browsing and processing of pre-recorded samples in sound libraries. One can also use specialized synthesis hardware, typically controlled through low-level, musically meaningless parameters. Today, the field of Deep Learning offers methods to control the synthesis process via learned high-level features and allows generating a wide variety of sounds. In this paper, we present DrumGAN VST, a plugin for synthesizing drum sounds using a Generative Adversarial Network. DrumGAN VST operates on 44.1 kHz sample-rate audio, offers independent and continuous instrument class controls, and features an encoding neural network that maps sounds into the GAN's latent space, enabling resynthesis and manipulation of pre-existing drum sounds. We provide numerous sound examples and a demo of the proposed VST plugin.

VQCPC-GAN: Variable-length Adversarial Audio Synthesis using Vector-Quantized Contrastive Predictive Coding

May 04, 2021

Abstract:Influenced by the field of Computer Vision, Generative Adversarial Networks (GANs) are often adopted for the audio domain using fixed-size two-dimensional spectrogram representations as the "image data". However, in the (musical) audio domain, it is often desired to generate output of variable duration. This paper presents VQCPC-GAN, an adversarial framework for synthesizing variable-length audio by exploiting Vector-Quantized Contrastive Predictive Coding (VQCPC). A sequence of VQCPC tokens extracted from real audio data serves as conditional input to a GAN architecture, providing step-wise time-dependent features of the generated content. The input noise z (characteristic in adversarial architectures) remains fixed over time, ensuring temporal consistency of global features. We evaluate the proposed model by comparing a diverse set of metrics against various strong baselines. Results show that, even though the baselines score best, VQCPC-GAN achieves comparable performance even when generating variable-length audio. Numerous sound examples are provided in the accompanying website, and we release the code for reproducibility.

Neural Drum Machine : An Interactive System for Real-time Synthesis of Drum Sounds

Jul 04, 2019

Abstract:In this work, we introduce a system for real-time generation of drum sounds. This system is composed of two parts: a generative model for drum sounds together with a Max4Live plugin providing intuitive controls on the generative process. The generative model consists of a Conditional Wasserstein autoencoder (CWAE), which learns to generate Mel-scaled magnitude spectrograms of short percussion samples, coupled with a Multi-Head Convolutional Neural Network (MCNN) which estimates the corresponding audio signal from the magnitude spectrogram. The design of this model makes it lightweight, so that it allows one to perform real-time generation of novel drum sounds on an average CPU, removing the need for the users to possess dedicated hardware in order to use this system. We then present our Max4Live interface designed to interact with this generative model. With this setup, the system can be easily integrated into a studio-production environment and enhance the creative process. Finally, we discuss the advantages of our system and how the interaction of music producers with such tools could change the way drum tracks are composed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge