Craig Corcoran

Using Deep Networks and Transfer Learning to Address Disinformation

May 24, 2019

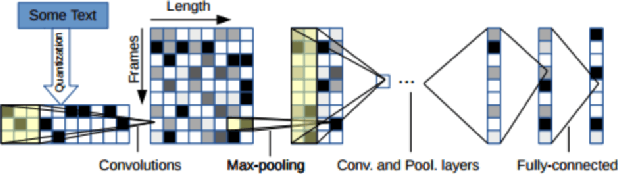

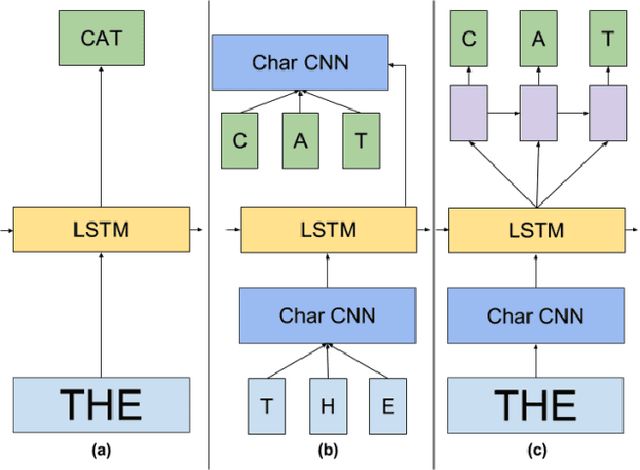

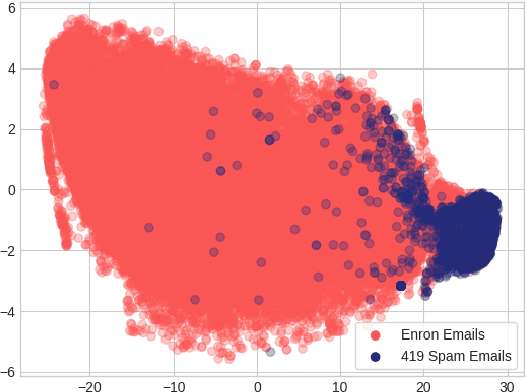

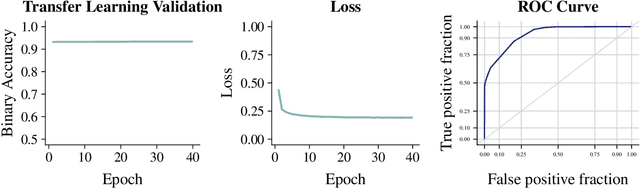

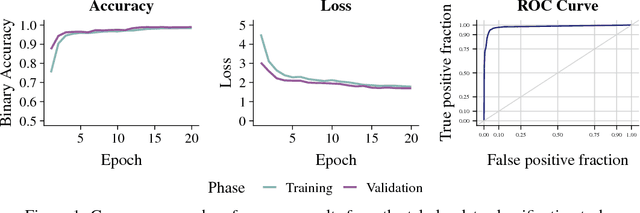

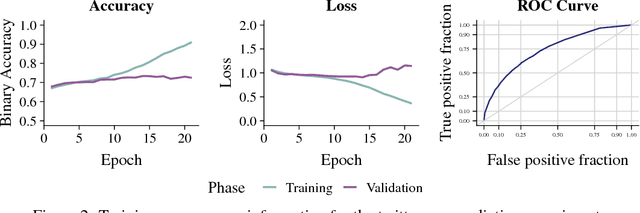

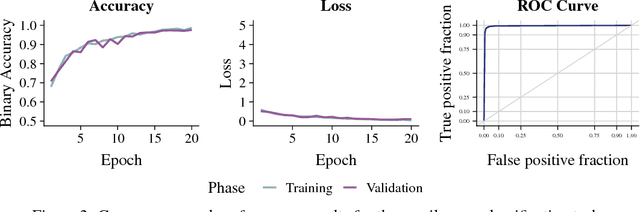

Abstract:We apply an ensemble pipeline composed of a character-level convolutional neural network (CNN) and a long short-term memory (LSTM) as a general tool for addressing a range of disinformation problems. We also demonstrate the ability to use this architecture to transfer knowledge from labeled data in one domain to related (supervised and unsupervised) tasks. Character-level neural networks and transfer learning are particularly valuable tools in the disinformation space because of the messy nature of social media, lack of labeled data, and the multi-channel tactics of influence campaigns. We demonstrate their effectiveness in several tasks relevant for detecting disinformation: spam emails, review bombing, political sentiment, and conversation clustering.

Semantic Classification of Tabular Datasets via Character-Level Convolutional Neural Networks

Jan 24, 2019

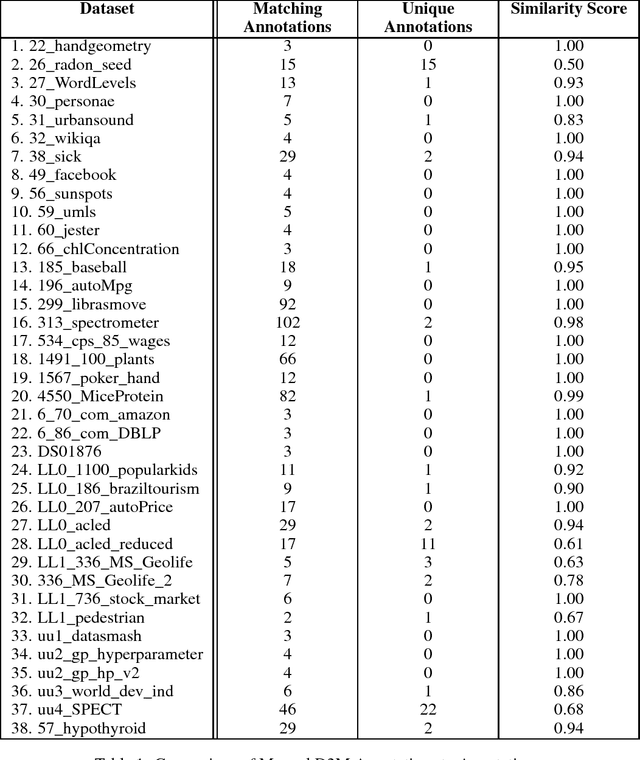

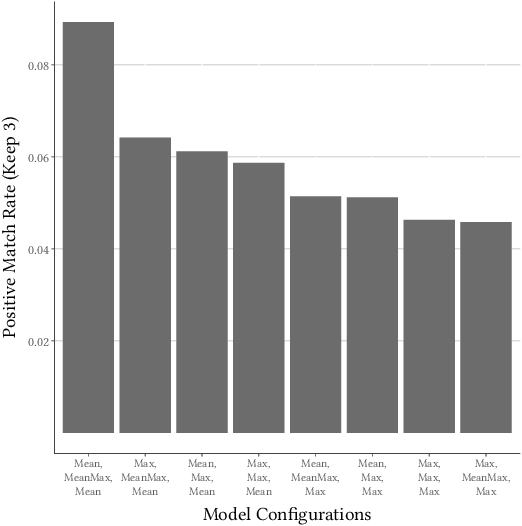

Abstract:A character-level convolutional neural network (CNN) motivated by applications in "automated machine learning" (AutoML) is proposed to semantically classify columns in tabular data. Simulated data containing a set of base classes is first used to learn an initial set of weights. Hand-labeled data from the CKAN repository is then used in a transfer-learning paradigm to adapt the initial weights to a more sophisticated representation of the problem (e.g., including more classes). In doing so, realistic data imperfections are learned and the set of classes handled can be expanded from the base set with reduced labeled data and computing power requirements. Results show the effectiveness and flexibility of this approach in three diverse domains: semantic classification of tabular data, age prediction from social media posts, and email spam classification. In addition to providing further evidence of the effectiveness of transfer learning in natural language processing (NLP), our experiments suggest that analyzing the semantic structure of language at the character level without additional metadata---i.e., network structure, headers, etc.---can produce competitive accuracy for type classification, spam classification, and social media age prediction. We present our open-source toolkit SIMON, an acronym for Semantic Inference for the Modeling of ONtologies, which implements this approach in a user-friendly and scalable/parallelizable fashion.

Abstractive Tabular Dataset Summarization via Knowledge Base Semantic Embeddings

Apr 05, 2018

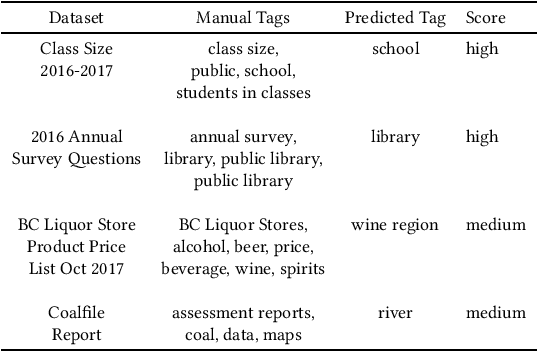

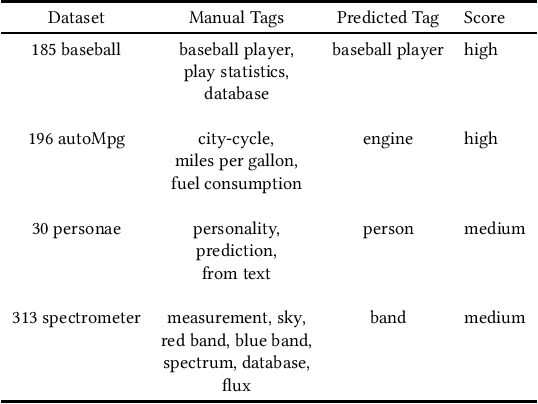

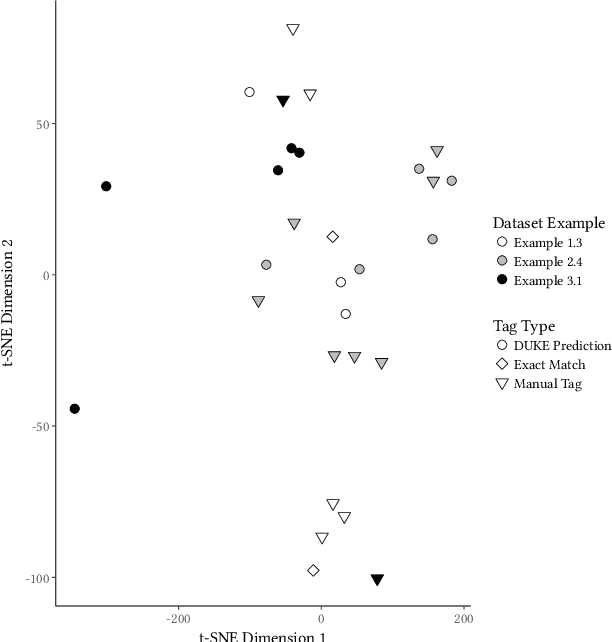

Abstract:This paper describes an abstractive summarization method for tabular data which employs a knowledge base semantic embedding to generate the summary. Assuming the dataset contains descriptive text in headers, columns and/or some augmenting metadata, the system employs the embedding to recommend a subject/type for each text segment. Recommendations are aggregated into a small collection of super types considered to be descriptive of the dataset by exploiting the hierarchy of types in a pre-specified ontology. Using February 2015 Wikipedia as the knowledge base, and a corresponding DBpedia ontology as types, we present experimental results on open data taken from several sources--OpenML, CKAN and data.world--to illustrate the effectiveness of the approach.

Switched linear encoding with rectified linear autoencoders

Jan 19, 2013

Abstract:Several recent results in machine learning have established formal connections between autoencoders---artificial neural network models that attempt to reproduce their inputs---and other coding models like sparse coding and K-means. This paper explores in depth an autoencoder model that is constructed using rectified linear activations on its hidden units. Our analysis builds on recent results to further unify the world of sparse linear coding models. We provide an intuitive interpretation of the behavior of these coding models and demonstrate this intuition using small, artificial datasets with known distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge