Colin Greatwood

Agile Reactive Navigation for A Non-Holonomic Mobile Robot Using A Pixel Processor Array

Sep 27, 2020

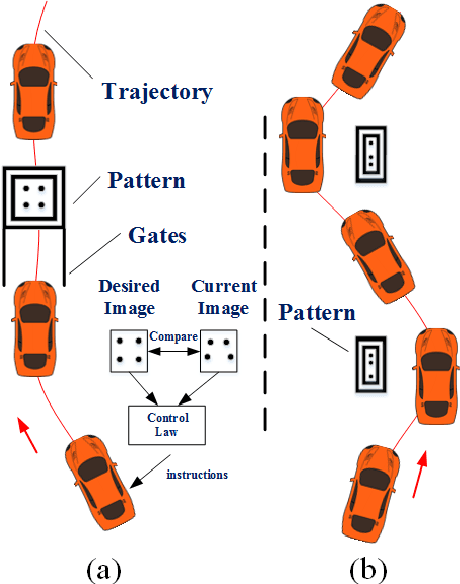

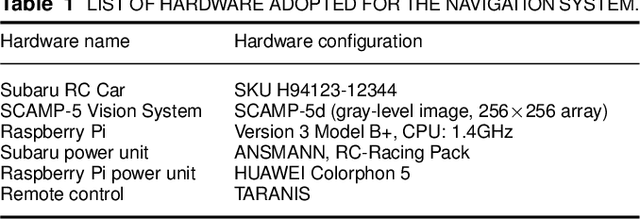

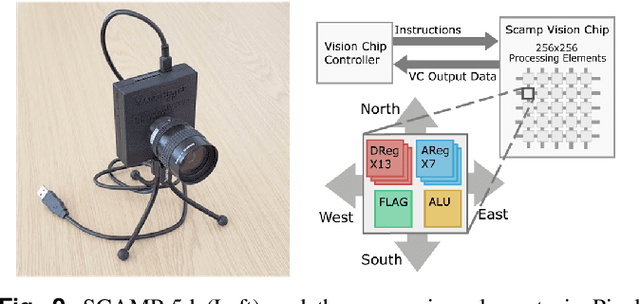

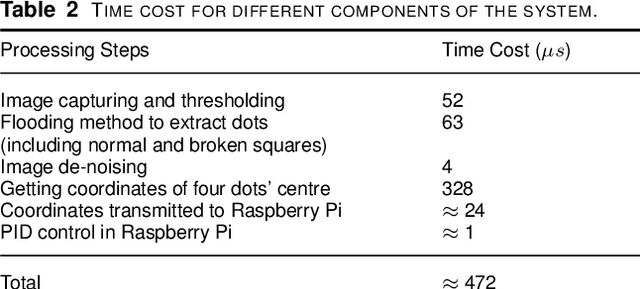

Abstract:This paper presents an agile reactive navigation strategy for driving a non-holonomic ground vehicle around a preset course of gates in a cluttered environment using a low-cost processor array sensor. This enables machine vision tasks to be performed directly upon the sensor's image plane, rather than using a separate general-purpose computer. We demonstrate a small ground vehicle running through or avoiding multiple gates at high speed using minimal computational resources. To achieve this, target tracking algorithms are developed for the Pixel Processing Array and captured images are then processed directly on the vision sensor acquiring target information for controlling the ground vehicle. The algorithm can run at up to 2000 fps outdoors and 200fps at indoor illumination levels. Conducting image processing at the sensor level avoids the bottleneck of image transfer encountered in conventional sensors. The real-time performance of on-board image processing and robustness is validated through experiments. Experimental results demonstrate that the algorithm's ability to enable a ground vehicle to navigate at an average speed of 2.20 m/s for passing through multiple gates and 3.88 m/s for a 'slalom' task in an environment featuring significant visual clutter.

Aerial Animal Biometrics: Individual Friesian Cattle Recovery and Visual Identification via an Autonomous UAV with Onboard Deep Inference

Jul 11, 2019

Abstract:This paper describes a computationally-enhanced M100 UAV platform with an onboard deep learning inference system for integrated computer vision and navigation able to autonomously find and visually identify by coat pattern individual Holstein Friesian cattle in freely moving herds. We propose an approach that utilises three deep convolutional neural network architectures running live onboard the aircraft; that is, a YoloV2-based species detector, a dual-stream CNN delivering exploratory agency and an InceptionV3-based biometric LRCN for individual animal identification. We evaluate the performance of each of the components offline, and also online via real-world field tests comprising 146.7 minutes of autonomous low altitude flight in a farm environment over a dispersed herd of 17 heifer dairy cows. We report error-free identification performance on this online experiment. The presented proof-of-concept system is the first of its kind and a successful step towards autonomous biometric identification of individual animals from the air in open pasture environments for tag-less AI support in farming and ecology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge