Chuike Sun

Appformer: A Novel Framework for Mobile App Usage Prediction Leveraging Progressive Multi-Modal Data Fusion and Feature Extraction

Jul 28, 2024

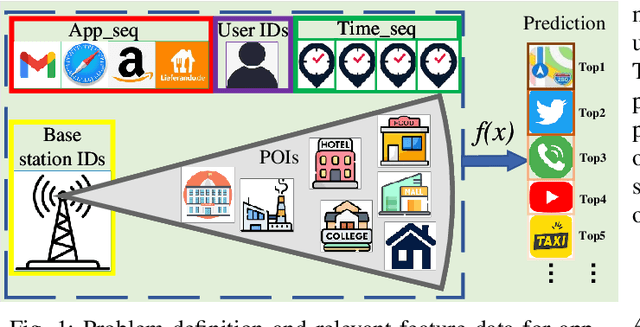

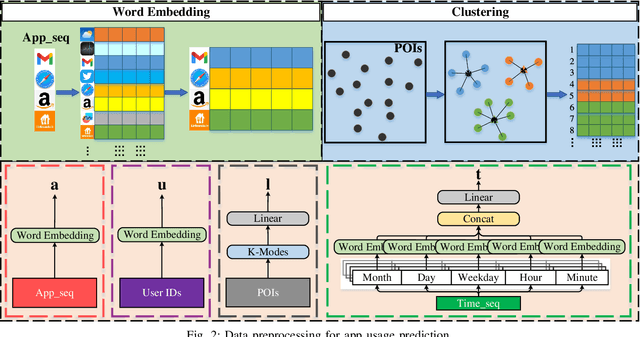

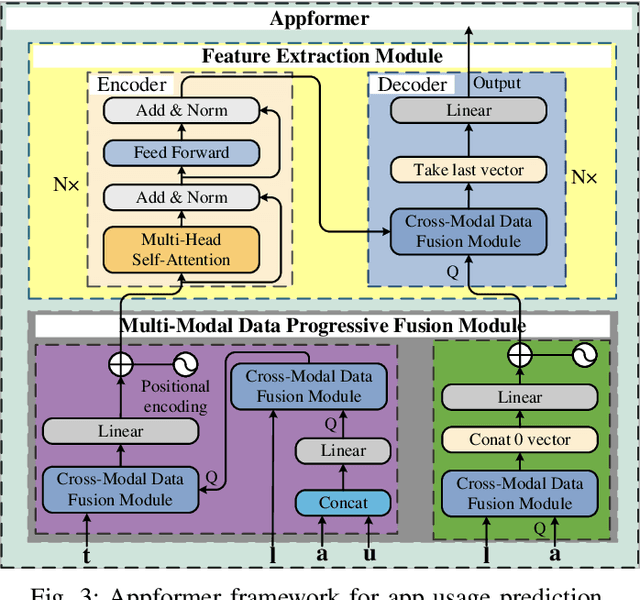

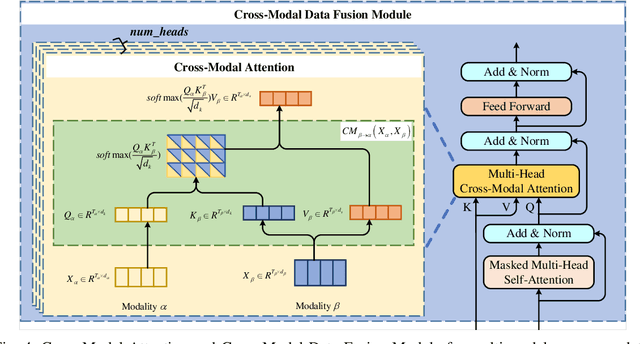

Abstract:This article presents Appformer, a novel mobile application prediction framework inspired by the efficiency of Transformer-like architectures in processing sequential data through self-attention mechanisms. Combining a Multi-Modal Data Progressive Fusion Module with a sophisticated Feature Extraction Module, Appformer leverages the synergies of multi-modal data fusion and data mining techniques while maintaining user privacy. The framework employs Points of Interest (POIs) associated with base stations, optimizing them through comprehensive comparative experiments to identify the most effective clustering method. These refined inputs are seamlessly integrated into the initial phases of cross-modal data fusion, where temporal units are encoded via word embeddings and subsequently merged in later stages. The Feature Extraction Module, employing Transformer-like architectures specialized for time series analysis, adeptly distils comprehensive features. It meticulously fine-tunes the outputs from the fusion module, facilitating the extraction of high-calibre, multi-modal features, thus guaranteeing a robust and efficient extraction process. Extensive experimental validation confirms Appformer's effectiveness, attaining state-of-the-art (SOTA) metrics in mobile app usage prediction, thereby signifying a notable progression in this field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge