Christopher Crick

A3: Active Adversarial Alignment for Source-Free Domain Adaptation

Sep 27, 2024

Abstract:Unsupervised domain adaptation (UDA) aims to transfer knowledge from a labeled source domain to an unlabeled target domain. Recent works have focused on source-free UDA, where only target data is available. This is challenging as models rely on noisy pseudo-labels and struggle with distribution shifts. We propose Active Adversarial Alignment (A3), a novel framework combining self-supervised learning, adversarial training, and active learning for robust source-free UDA. A3 actively samples informative and diverse data using an acquisition function for training. It adapts models via adversarial losses and consistency regularization, aligning distributions without source data access. A3 advances source-free UDA through its synergistic integration of active and adversarial learning for effective domain alignment and noise reduction.

Learning by Watching: A Review of Video-based Learning Approaches for Robot Manipulation

Feb 11, 2024Abstract:Robot learning of manipulation skills is hindered by the scarcity of diverse, unbiased datasets. While curated datasets can help, challenges remain in generalizability and real-world transfer. Meanwhile, large-scale "in-the-wild" video datasets have driven progress in computer vision through self-supervised techniques. Translating this to robotics, recent works have explored learning manipulation skills by passively watching abundant videos sourced online. Showing promising results, such video-based learning paradigms provide scalable supervision while reducing dataset bias. This survey reviews foundations such as video feature representation learning techniques, object affordance understanding, 3D hand/body modeling, and large-scale robot resources, as well as emerging techniques for acquiring robot manipulation skills from uncontrolled video demonstrations. We discuss how learning only from observing large-scale human videos can enhance generalization and sample efficiency for robotic manipulation. The survey summarizes video-based learning approaches, analyses their benefits over standard datasets, survey metrics, and benchmarks, and discusses open challenges and future directions in this nascent domain at the intersection of computer vision, natural language processing, and robot learning.

Brain-Inspired Visual Odometry: Balancing Speed and Interpretability through a System of Systems Approach

Dec 20, 2023Abstract:In this study, we address the critical challenge of balancing speed and accuracy while maintaining interpretablity in visual odometry (VO) systems, a pivotal aspect in the field of autonomous navigation and robotics. Traditional VO systems often face a trade-off between computational speed and the precision of pose estimation. To tackle this issue, we introduce an innovative system that synergistically combines traditional VO methods with a specifically tailored fully connected network (FCN). Our system is unique in its approach to handle each degree of freedom independently within the FCN, placing a strong emphasis on causal inference to enhance interpretability. This allows for a detailed and accurate assessment of relative pose error (RPE) across various degrees of freedom, providing a more comprehensive understanding of parameter variations and movement dynamics in different environments. Notably, our system demonstrates a remarkable improvement in processing speed without compromising accuracy. In certain scenarios, it achieves up to a 5% reduction in Root Mean Square Error (RMSE), showcasing its ability to effectively bridge the gap between speed and accuracy that has long been a limitation in VO research. This advancement represents a significant step forward in developing more efficient and reliable VO systems, with wide-ranging applications in real-time navigation and robotic systems.

Enhancing Human-robot Collaboration by Exploring Intuitive Augmented Reality Design Representations

Mar 09, 2023

Abstract:As the use of Augmented Reality (AR) to enhance interactions between human agents and robotic systems in a work environment continues to grow, robots must communicate their intents in informative yet straightforward ways. This improves the human agent's feeling of trust and safety in the work environment while also reducing task completion time. To this end, we discuss a set of guidelines for the systematic design of AR interfaces for Human-Robot Interaction (HRI) systems. Furthermore, we develop design frameworks that would ride on these guidelines and serve as a base for researchers seeking to explore this direction further. We develop a series of designs for visually representing the robot's planned path and reactions, which we evaluate by conducting a user survey involving 14 participants. Subjects were given different design representations to review and rate based on their intuitiveness and informativeness. The collated results showed that our design representations significantly improved the participants' ease of understanding the robot's intents over the baselines for the robot's proposed navigation path, planned arm trajectory, and reactions.

Hand Gesture Recognition through Reflected Infrared Light Wave Signals

Jan 14, 2023

Abstract:In this study, we present a wireless (non-contact) gesture recognition method using only incoherent light wave signals reflected from a human subject. In comparison to existing radar, light shadow, sound and camera-based sensing systems, this technology uses a low-cost ubiquitous light source (e.g., infrared LED) to send light towards the subject's hand performing gestures and the reflected light is collected by a light sensor (e.g., photodetector). This light wave sensing system recognizes different gestures from the variations of the received light intensity within a 20-35cm range. The hand gesture recognition results demonstrate up to 96% accuracy on average. The developed system can be utilized in numerous Human-computer Interaction (HCI) applications as a low-cost and non-contact gesture recognition technology.

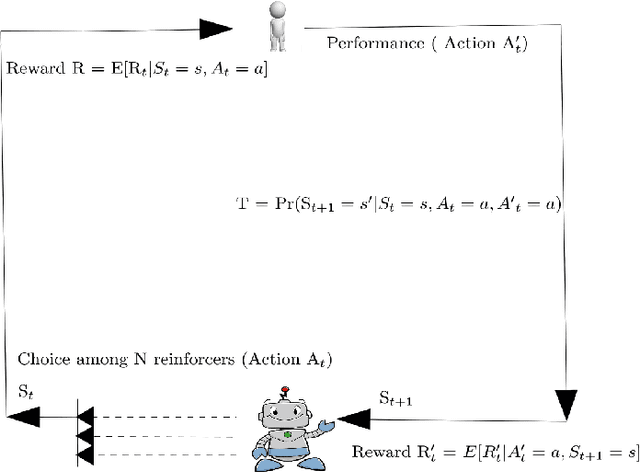

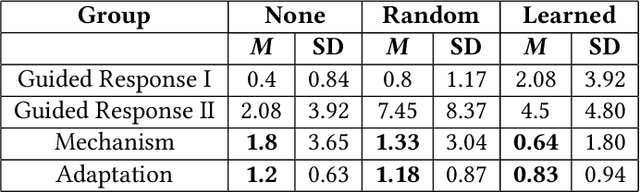

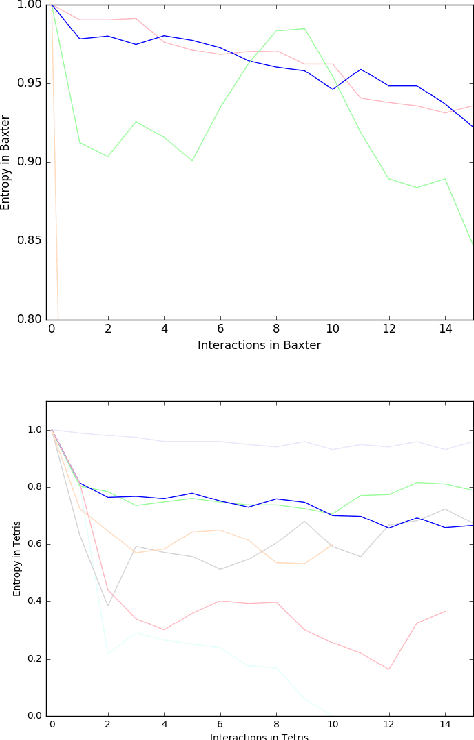

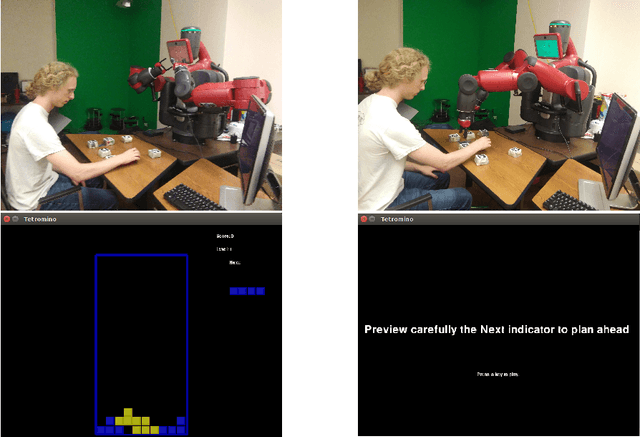

Mutual Reinforcement Learning

Aug 07, 2019

Abstract:Recently, collaborative robots have begun to train humans to achieve complex tasks, and the mutual information exchange between them can lead to successful robot-human collaborations. In this paper we demonstrate the application and effectiveness of a new approach called mutual reinforcement learning (MRL), where both humans and autonomous agents act as reinforcement learners in a skill transfer scenario over continuous communication and feedback. An autonomous agent initially acts as an instructor who can teach a novice human participant complex skills using the MRL strategy. While teaching skills in a physical (block-building) ($n=34$) or simulated (Tetris) environment ($n=31$), the expert tries to identify appropriate reward channels preferred by each individual and adapts itself accordingly using an exploration-exploitation strategy. These reward channel preferences can identify important behaviors of the human participants, because they may well exercise the same behaviors in similar situations later. In this way, skill transfer takes place between an expert system and a novice human operator. We divided the subject population into three groups and observed the skill transfer phenomenon, analyzing it with Simpson"s psychometric model. 5-point Likert scales were also used to identify the cognitive models of the human participants. We obtained a shared cognitive model which not only improves human cognition but enhances the robot's cognitive strategy to understand the mental model of its human partners while building a successful robot-human collaborative framework.

Loopy Belief Propagation as a Basis for Communication in Sensor Networks

Oct 19, 2012

Abstract:Sensor networks are an exciting new kind of computer system. Consisting of a large number of tiny, cheap computational devices physically distributed in an environment, they gather and process data about the environment in real time. One of the central questions in sensor networks is what to do with the data, i.e., how to reason with it and how to communicate it. This paper argues that the lessons of the UAI community, in particular that one should produce and communicate beliefs rather than raw sensor values, are highly relevant to sensor networks. We contend that loopy belief propagation is particularly well suited to communicating beliefs in sensor networks, due to its compact implementation and distributed nature. We investigate the ability of loopy belief propagation to function under the stressful conditions likely to prevail in sensor networks. Our experiments show that it performs well and degrades gracefully. It converges to appropriate beliefs even in highly asynchronous settings where some nodes communicate far less frequently than others; it continues to function if some nodes fail to participate in the propagation process; and it can track changes in the environment that occur while beliefs are propagating. As a result, we believe that sensor networks present an important application opportunity for UAI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge