Emily Kieson

Emotion Recognition in Horses with Convolutional Neural Networks

May 25, 2021

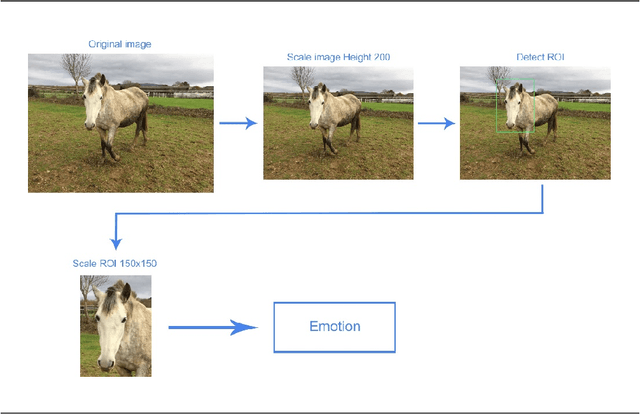

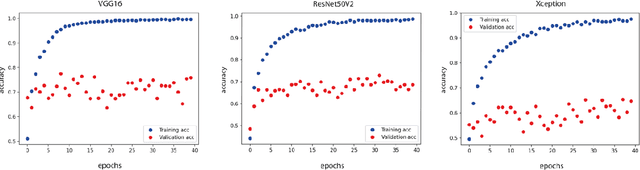

Abstract:Creating intelligent systems capable of recognizing emotions is a difficult task, especially when looking at emotions in animals. This paper describes the process of designing a "proof of concept" system to recognize emotions in horses. This system is formed by two elements, a detector and a model. The detector is a faster region-based convolutional neural network that detects horses in an image. The second one, the model, is a convolutional neural network that predicts the emotion of those horses. These two models were trained with multiple images of horses until they achieved high accuracy in their tasks, creating therefore the desired system. 400 images of horses were used to train both the detector and the model while 80 were used to validate the system. Once the two components were validated they were combined into a testable system that would detect equine emotions based on established behavioral ethograms indicating emotional affect through head, neck, ear, muzzle, and eye position. The system showed an accuracy of between 69% and 74% on the validation set, demonstrating that it is possible to predict emotions in animals using autonomous intelligent systems. It is a first "proof of concept" approach that can be enhanced in many ways. Such a system has multiple applications including further studies in the growing field of animal emotions as well as in the veterinary field to determine the physical welfare of horses or other livestock.

Mutual Reinforcement Learning

Aug 07, 2019

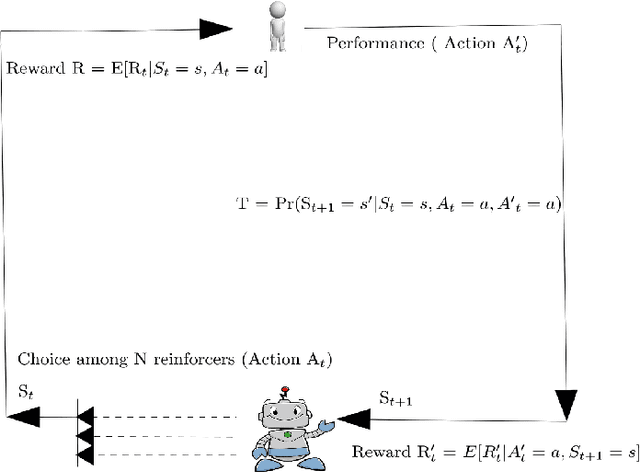

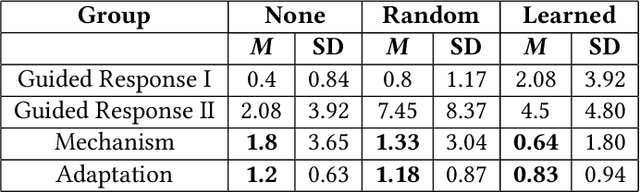

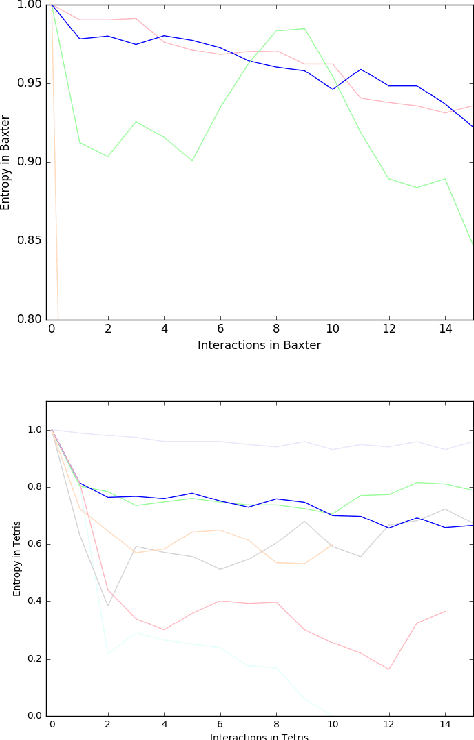

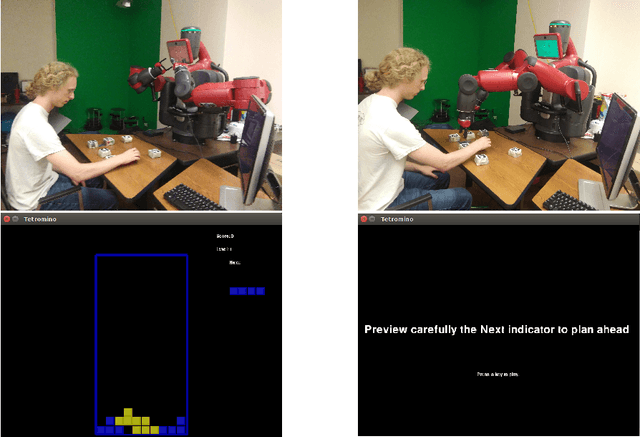

Abstract:Recently, collaborative robots have begun to train humans to achieve complex tasks, and the mutual information exchange between them can lead to successful robot-human collaborations. In this paper we demonstrate the application and effectiveness of a new approach called mutual reinforcement learning (MRL), where both humans and autonomous agents act as reinforcement learners in a skill transfer scenario over continuous communication and feedback. An autonomous agent initially acts as an instructor who can teach a novice human participant complex skills using the MRL strategy. While teaching skills in a physical (block-building) ($n=34$) or simulated (Tetris) environment ($n=31$), the expert tries to identify appropriate reward channels preferred by each individual and adapts itself accordingly using an exploration-exploitation strategy. These reward channel preferences can identify important behaviors of the human participants, because they may well exercise the same behaviors in similar situations later. In this way, skill transfer takes place between an expert system and a novice human operator. We divided the subject population into three groups and observed the skill transfer phenomenon, analyzing it with Simpson"s psychometric model. 5-point Likert scales were also used to identify the cognitive models of the human participants. We obtained a shared cognitive model which not only improves human cognition but enhances the robot's cognitive strategy to understand the mental model of its human partners while building a successful robot-human collaborative framework.

Intent Communication between Autonomous Vehicles and Pedestrians

Aug 23, 2017

Abstract:When pedestrians encounter vehicles, they typically stop and wait for a signal from the driver to either cross or wait. What happens when the car is autonomous and there isn't a human driver to signal them? This paper seeks to address this issue with an intent communication system (ICS) that acts in place of a human driver. This intent system has been developed to take into account the psychology behind what pedestrians are familiar with and what they expect from machines. The system integrates those expectations into the design of physical systems and mathematical algorithms. The goal of the system is to ensure that communication is simple, yet effective without leaving pedestrians with a sense of distrust in autonomous vehicles. To validate the ICS, two types of experiments have been run: field tests with an autonomous vehicle to determine how humans actually interact with the ICS and simulations to account for multiple potential behaviors.The results from both experiments show that humans react positively and more predictably when the intent of the vehicle is communicated compared to when the intent of the vehicle is unknown. In particular, the results from the simulation specifically showed a 142 percent difference between the pedestrian's trust in the vehicle's actions when the ICS is enabled and the pedestrian has prior knowledge of the vehicle than when the ICS is not enabled and the pedestrian having no prior knowledge of the vehicle.

Can Co-robots Learn to Teach?

Nov 22, 2016

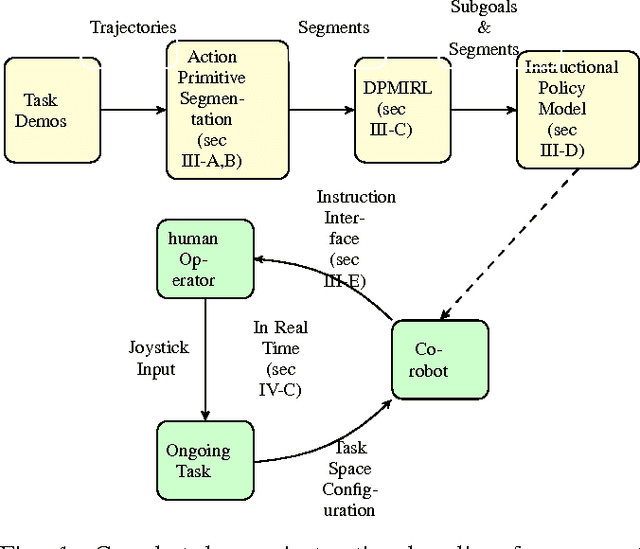

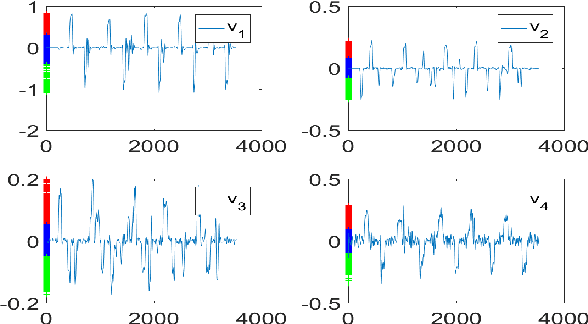

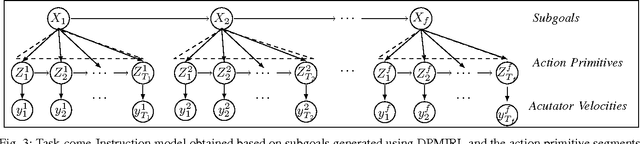

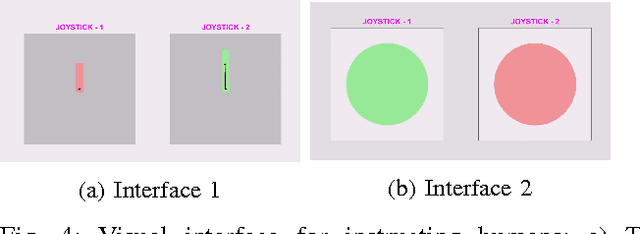

Abstract:We explore beyond existing work on learning from demonstration by asking the question: Can robots learn to teach?, that is, can a robot autonomously learn an instructional policy from expert demonstration and use it to instruct or collaborate with humans in executing complex tasks in uncertain environments? In this paper we pursue a solution to this problem by leveraging the idea that humans often implicitly decompose a higher level task into several subgoals whose execution brings the task closer to completion. We propose Dirichlet process based non-parametric Inverse Reinforcement Learning (DPMIRL) approach for reward based unsupervised clustering of task space into subgoals. This approach is shown to capture the latent subgoals that a human teacher would have utilized to train a novice. The notion of action primitive is introduced as the means to communicate instruction policy to humans in the least complicated manner, and as a computationally efficient tool to segment demonstration data. We evaluate our approach through experiments on hydraulic actuated scaled model of an excavator and evaluate and compare different teaching strategies utilized by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge