Charles Abramson

Mutual Reinforcement Learning

Aug 07, 2019

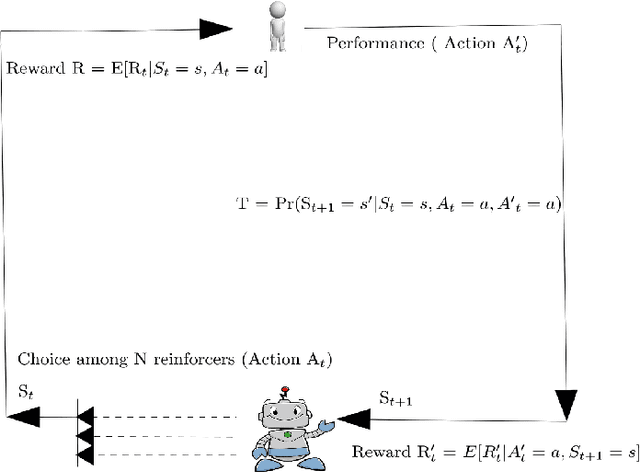

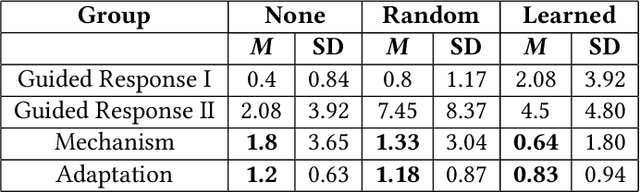

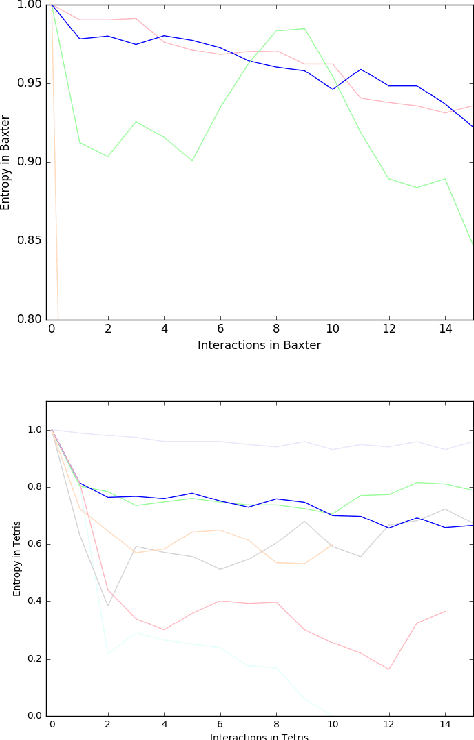

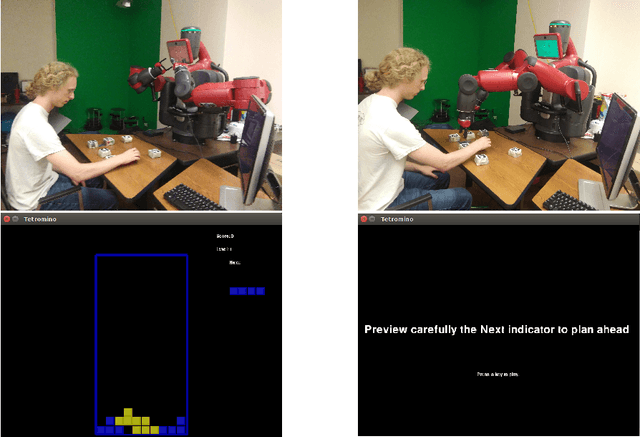

Abstract:Recently, collaborative robots have begun to train humans to achieve complex tasks, and the mutual information exchange between them can lead to successful robot-human collaborations. In this paper we demonstrate the application and effectiveness of a new approach called mutual reinforcement learning (MRL), where both humans and autonomous agents act as reinforcement learners in a skill transfer scenario over continuous communication and feedback. An autonomous agent initially acts as an instructor who can teach a novice human participant complex skills using the MRL strategy. While teaching skills in a physical (block-building) ($n=34$) or simulated (Tetris) environment ($n=31$), the expert tries to identify appropriate reward channels preferred by each individual and adapts itself accordingly using an exploration-exploitation strategy. These reward channel preferences can identify important behaviors of the human participants, because they may well exercise the same behaviors in similar situations later. In this way, skill transfer takes place between an expert system and a novice human operator. We divided the subject population into three groups and observed the skill transfer phenomenon, analyzing it with Simpson"s psychometric model. 5-point Likert scales were also used to identify the cognitive models of the human participants. We obtained a shared cognitive model which not only improves human cognition but enhances the robot's cognitive strategy to understand the mental model of its human partners while building a successful robot-human collaborative framework.

Can Co-robots Learn to Teach?

Nov 22, 2016

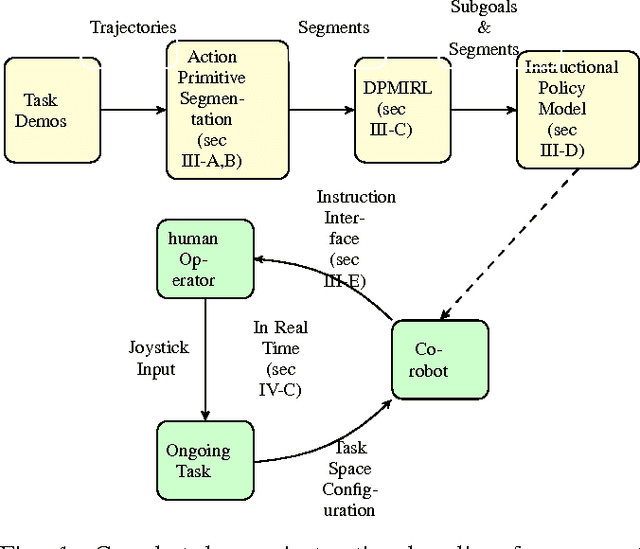

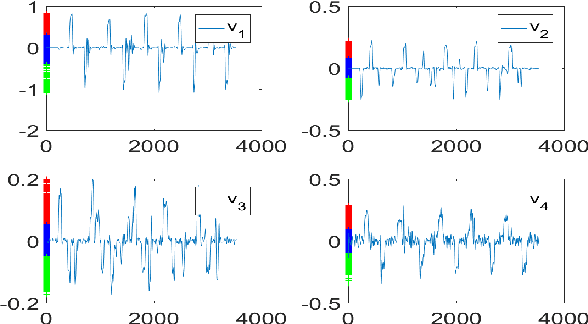

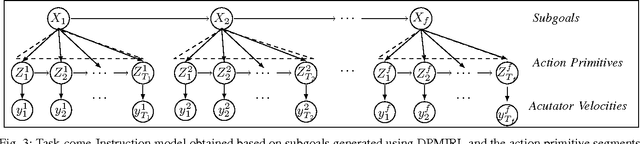

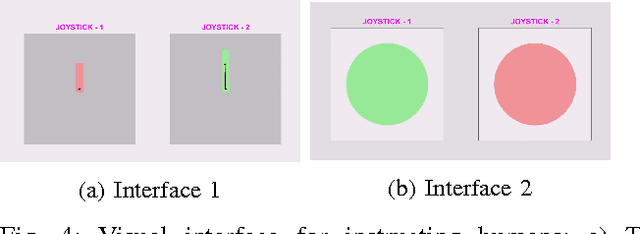

Abstract:We explore beyond existing work on learning from demonstration by asking the question: Can robots learn to teach?, that is, can a robot autonomously learn an instructional policy from expert demonstration and use it to instruct or collaborate with humans in executing complex tasks in uncertain environments? In this paper we pursue a solution to this problem by leveraging the idea that humans often implicitly decompose a higher level task into several subgoals whose execution brings the task closer to completion. We propose Dirichlet process based non-parametric Inverse Reinforcement Learning (DPMIRL) approach for reward based unsupervised clustering of task space into subgoals. This approach is shown to capture the latent subgoals that a human teacher would have utilized to train a novice. The notion of action primitive is introduced as the means to communicate instruction policy to humans in the least complicated manner, and as a computationally efficient tool to segment demonstration data. We evaluate our approach through experiments on hydraulic actuated scaled model of an excavator and evaluate and compare different teaching strategies utilized by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge