Christian J. Mahoney

Application of Deep Learning in Recognizing Bates Numbers and Confidentiality Stamping from Images

Feb 05, 2021

Abstract:In eDiscovery, it is critical to ensure that each page produced in legal proceedings conforms with the requirements of court or government agency production requests. Errors in productions could have severe consequences in a case, putting a party in an adverse position. The volume of pages produced continues to increase, and tremendous time and effort has been taken to ensure quality control of document productions. This has historically been a manual and laborious process. This paper demonstrates a novel automated production quality control application which leverages deep learning-based image recognition technology to extract Bates Number and Confidentiality Stamping from legal case production images and validate their correctness. Effectiveness of the method is verified with an experiment using a real-world production data.

A Framework for Explainable Text Classification in Legal Document Review

Dec 19, 2019

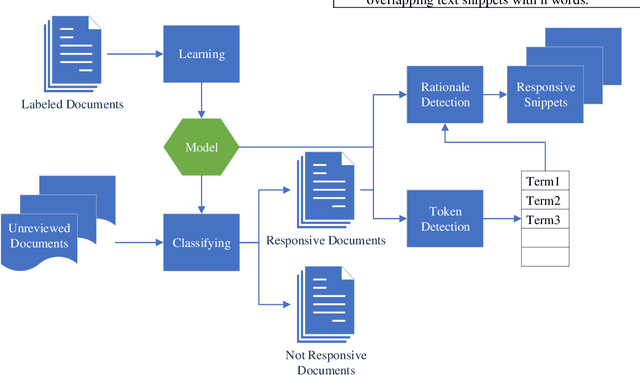

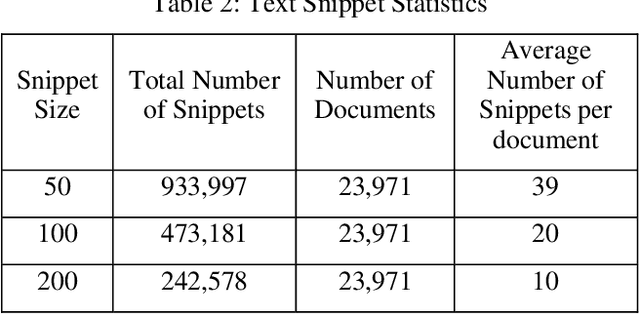

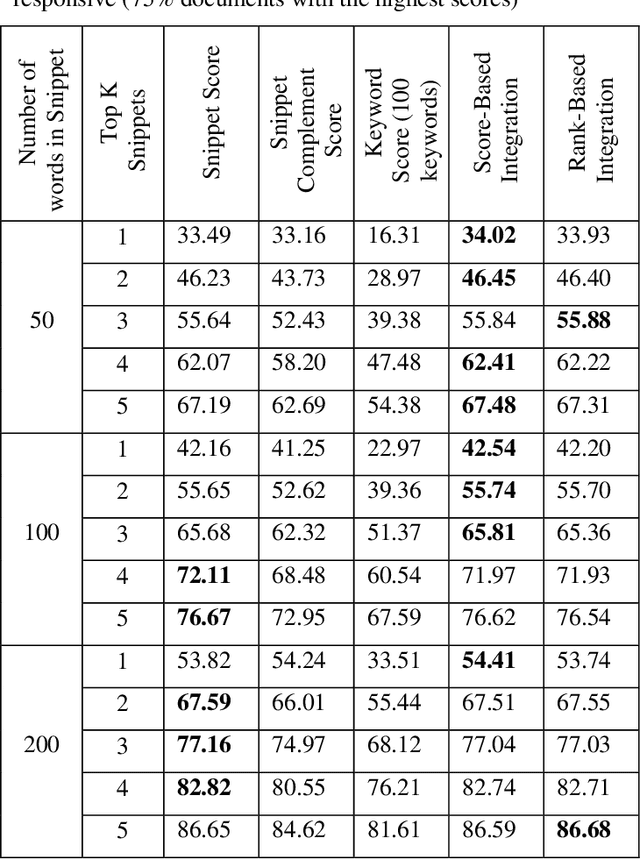

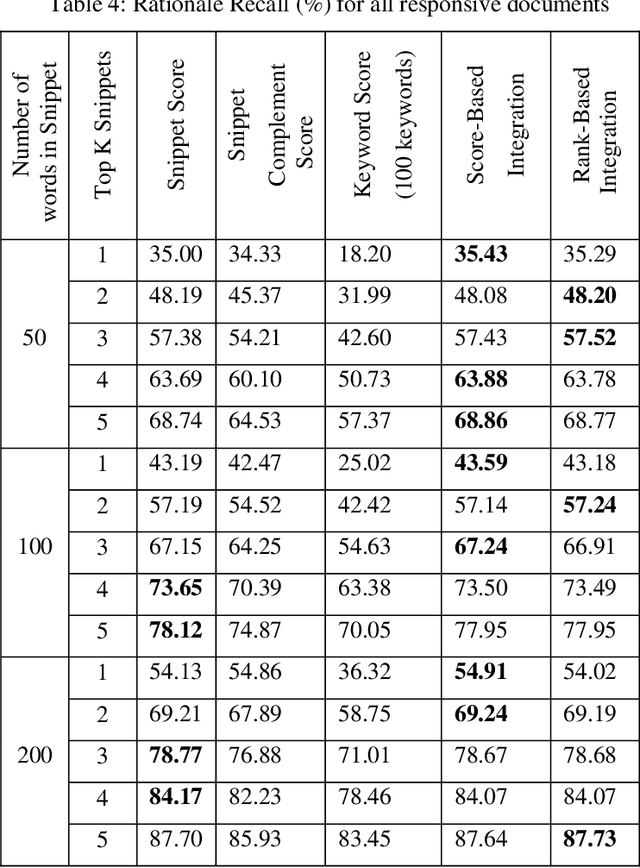

Abstract:Companies regularly spend millions of dollars producing electronically-stored documents in legal matters. Recently, parties on both sides of the 'legal aisle' are accepting the use of machine learning techniques like text classification to cull massive volumes of data and to identify responsive documents for use in these matters. While text classification is regularly used to reduce the discovery costs in legal matters, it also faces a peculiar perception challenge: amongst lawyers, this technology is sometimes looked upon as a "black box", little information provided for attorneys to understand why documents are classified as responsive. In recent years, a group of AI and ML researchers have been actively researching Explainable AI, in which actions or decisions are human understandable. In legal document review scenarios, a document can be identified as responsive, if one or more of its text snippets are deemed responsive. In these scenarios, if text classification can be used to locate these snippets, then attorneys could easily evaluate the model's classification decision. When deployed with defined and explainable results, text classification can drastically enhance overall quality and speed of the review process by reducing the review time. Moreover, explainable predictive coding provides lawyers with greater confidence in the results of that supervised learning task. This paper describes a framework for explainable text classification as a valuable tool in legal services: for enhancing the quality and efficiency of legal document review and for assisting in locating responsive snippets within responsive documents. This framework has been implemented in our legal analytics product, which has been used in hundreds of legal matters. We also report our experimental results using the data from an actual legal matter that used this type of document review.

Evaluation of Seed Set Selection Approaches and Active Learning Strategies in Predictive Coding

Jun 11, 2019

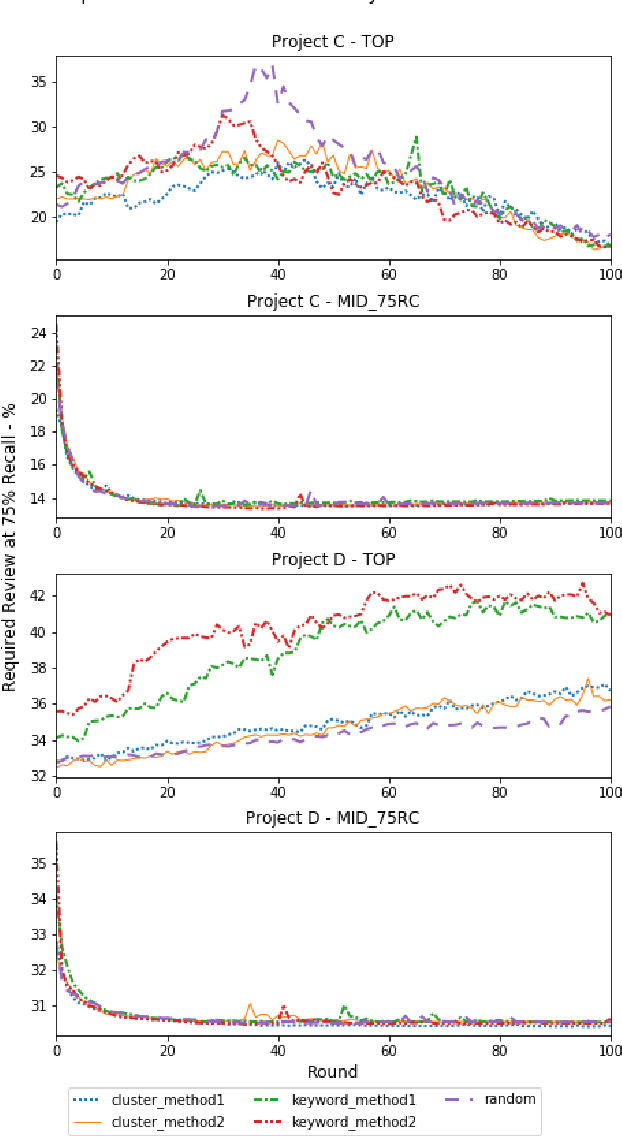

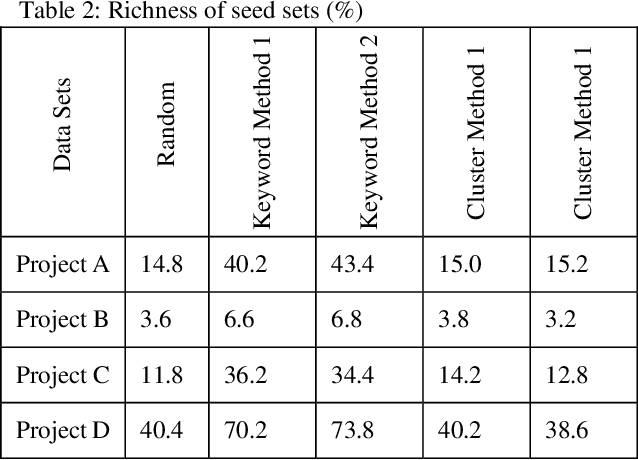

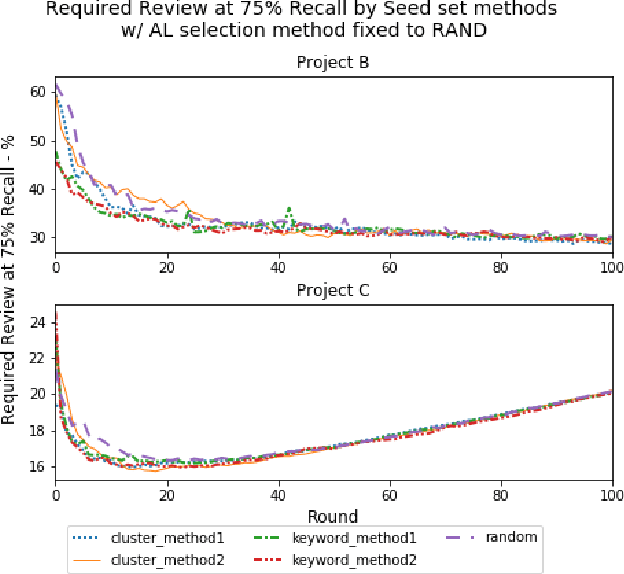

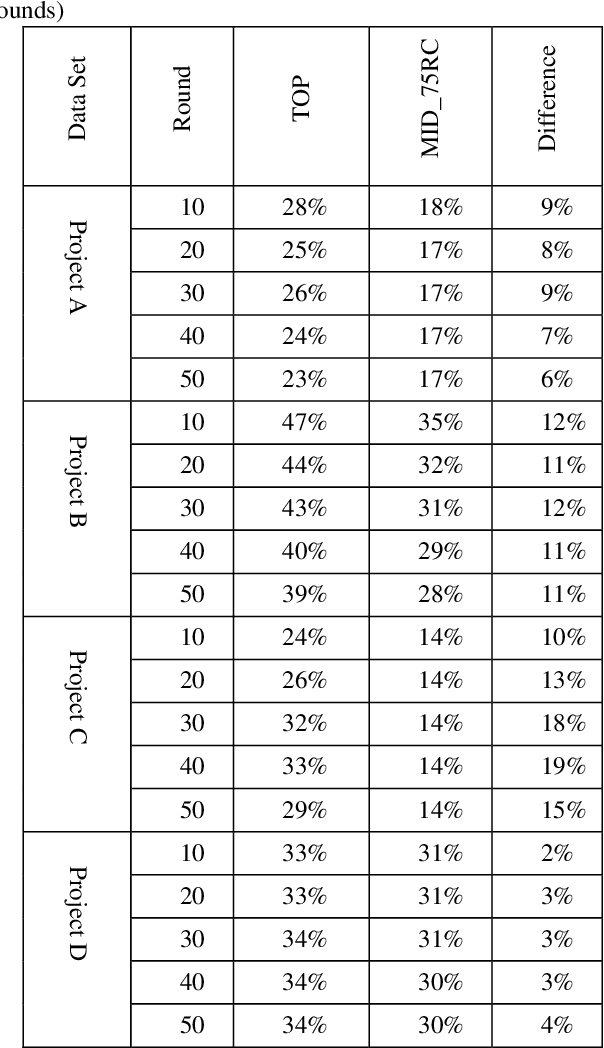

Abstract:Active learning is a popular methodology in text classification - known in the legal domain as "predictive coding" or "Technology Assisted Review" or "TAR" - due to its potential to minimize the required review effort to build effective classifiers. In this study, we use extensive experimentation to examine the impact of popular seed set selection strategies in active learning, within a predictive coding exercise, and evaluate different active learning strategies against well-researched continuous active learning strategies for the purpose of determining efficient training methods for classifying large populations quickly and precisely. We study how random sampling, keyword models and clustering based seed set selection strategies combined together with top-ranked, uncertain, random, recall inspired, and hybrid active learning document selection strategies affect the performance of active learning for predictive coding. We use the percentage of documents requiring review to reach 75% recall as the "benchmark" metric to evaluate and compare our approaches. In most cases we find that seed set selection methods have a minor impact, though they do show significant impact in lower richness data sets or when choosing a top-ranked active learning selection strategy. Our results also show that active learning selection strategies implementing uncertainty, random, or 75% recall selection strategies has the potential to reach the optimum active learning round much earlier than the popular continuous active learning approach (top-ranked selection). The results of our research shed light on the impact of active learning seed set selection strategies and also the effectiveness of the selection strategies for the following learning rounds. Legal practitioners can use the results of this study to enhance the efficiency, precision, and simplicity of their predictive coding process.

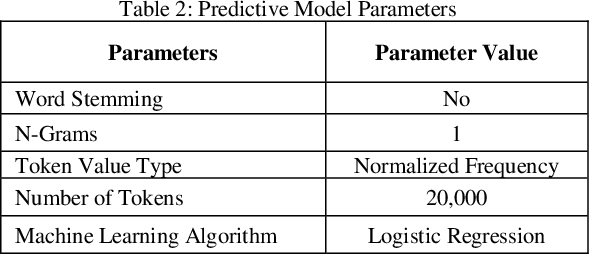

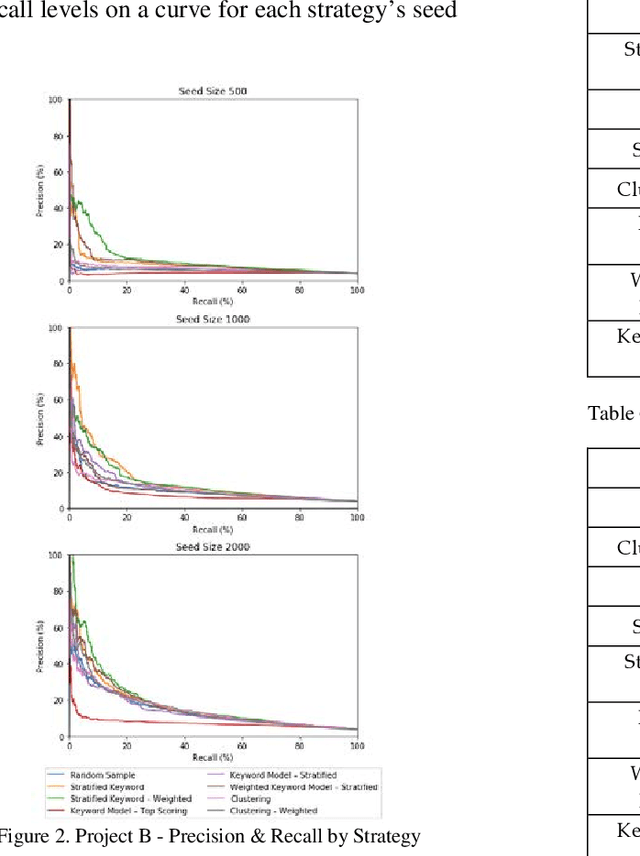

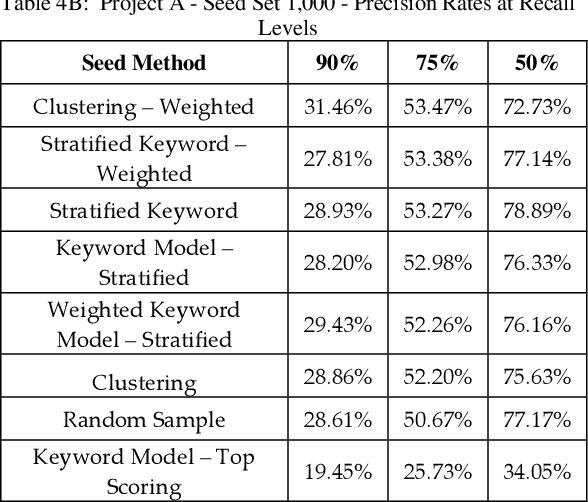

Empirical Evaluations of Seed Set Selection Strategies for Predictive Coding

Mar 21, 2019

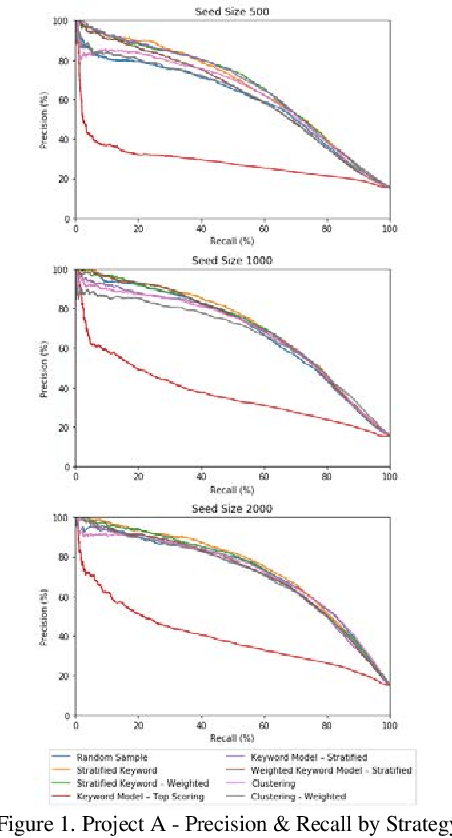

Abstract:Training documents have a significant impact on the performance of predictive models in the legal domain. Yet, there is limited research that explores the effectiveness of the training document selection strategy - in particular, the strategy used to select the seed set, or the set of documents an attorney reviews first to establish an initial model. Since there is limited research on this important component of predictive coding, the authors of this paper set out to identify strategies that consistently perform well. Our research demonstrated that the seed set selection strategy can have a significant impact on the precision of a predictive model. Enabling attorneys with the results of this study will allow them to initiate the most effective predictive modeling process to comb through the terabytes of data typically present in modern litigation. This study used documents from four actual legal cases to evaluate eight different seed set selection strategies. Attorneys can use the results contained within this paper to enhance their approach to predictive coding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge