Chengzhi Zhang

Quantifying the Knowledge Proximity Between Academic and Industry Research: An Entity and Semantic Perspective

Feb 05, 2026Abstract:The academia and industry are characterized by a reciprocal shaping and dynamic feedback mechanism. Despite distinct institutional logics, they have adapted closely in collaborative publishing and talent mobility, demonstrating tension between institutional divergence and intensive collaboration. Existing studies on their knowledge proximity mainly rely on macro indicators such as the number of collaborative papers or patents, lacking an analysis of knowledge units in the literature. This has led to an insufficient grasp of fine-grained knowledge proximity between industry and academia, potentially undermining collaboration frameworks and resource allocation efficiency. To remedy the limitation, this study quantifies the trajectory of academia-industry co-evolution through fine-grained entities and semantic space. In the entity measurement part, we extract fine-grained knowledge entities via pre-trained models, measure sequence overlaps using cosine similarity, and analyze topological features through complex network analysis. At the semantic level, we employ unsupervised contrastive learning to quantify convergence in semantic spaces by measuring cross-institutional textual similarities. Finally, we use citation distribution patterns to examine correlations between bidirectional knowledge flows and similarity. Analysis reveals that knowledge proximity between academia and industry rises, particularly following technological change. This provides textual evidence of bidirectional adaptation in co-evolution. Additionally, academia's knowledge dominance weakens during technological paradigm shifts. The dataset and code for this paper can be accessed at https://github.com/tinierZhao/Academic-Industrial-associations.

Enhancing Academic Paper Recommendations Using Fine-Grained Knowledge Entities and Multifaceted Document Embeddings

Jan 27, 2026Abstract:In the era of explosive growth in academic literature, the burden of literature review on scholars are increasing. Proactively recommending academic papers that align with scholars' literature needs in the research process has become one of the crucial pathways to enhance research efficiency and stimulate innovative thinking. Current academic paper recommendation systems primarily focus on broad and coarse-grained suggestions based on general topic or field similarities. While these systems effectively identify related literature, they fall short in addressing scholars' more specific and fine-grained needs, such as locating papers that utilize particular research methods, or tackle distinct research tasks within the same topic. To meet the diverse and specific literature needs of scholars in the research process, this paper proposes a novel academic paper recommendation method. This approach embeds multidimensional information by integrating new types of fine-grained knowledge entities, title and abstract of document, and citation data. Recommendations are then generated by calculating the similarity between combined paper vectors. The proposed recommendation method was evaluated using the STM-KG dataset, a knowledge graph that incorporates scientific concepts derived from papers across ten distinct domains. The experimental results indicate that our method outperforms baseline models, achieving an average precision of 27.3% among the top 50 recommendations. This represents an improvement of 6.7% over existing approaches.

The Effect of Gender Diversity on Scientific Team Impact: A Team Roles Perspective

Dec 29, 2025Abstract:The influence of gender diversity on the success of scientific teams is of great interest to academia. However, prior findings remain inconsistent, and most studies operationalize diversity in aggregate terms, overlooking internal role differentiation. This limitation obscures a more nuanced understanding of how gender diversity shapes team impact. In particular, the effect of gender diversity across different team roles remains poorly understood. To this end, we define a scientific team as all coauthors of a paper and measure team impact through five-year citation counts. Using author contribution statements, we classified members into leadership and support roles. Drawing on more than 130,000 papers from PLOS journals, most of which are in biomedical-related disciplines, we employed multivariable regression to examine the association between gender diversity in these roles and team impact. Furthermore, we apply a threshold regression model to investigate how team size moderates this relationship. The results show that (1) the relationship between gender diversity and team impact follows an inverted U-shape for both leadership and support groups; (2) teams with an all-female leadership group and an all-male support group achieve higher impact than other team types. Interestingly, (3) the effect of leadership-group gender diversity is significantly negative for small teams but becomes positive and statistically insignificant in large teams. In contrast, the estimates for support-group gender diversity remain significant and positive, regardless of team size.

Automated Generation of Research Workflows from Academic Papers: A Full-text Mining Framework

Sep 16, 2025Abstract:The automated generation of research workflows is essential for improving the reproducibility of research and accelerating the paradigm of "AI for Science". However, existing methods typically extract merely fragmented procedural components and thus fail to capture complete research workflows. To address this gap, we propose an end-to-end framework that generates comprehensive, structured research workflows by mining full-text academic papers. As a case study in the Natural Language Processing (NLP) domain, our paragraph-centric approach first employs Positive-Unlabeled (PU) Learning with SciBERT to identify workflow-descriptive paragraphs, achieving an F1-score of 0.9772. Subsequently, we utilize Flan-T5 with prompt learning to generate workflow phrases from these paragraphs, yielding ROUGE-1, ROUGE-2, and ROUGE-L scores of 0.4543, 0.2877, and 0.4427, respectively. These phrases are then systematically categorized into data preparation, data processing, and data analysis stages using ChatGPT with few-shot learning, achieving a classification precision of 0.958. By mapping categorized phrases to their document locations in the documents, we finally generate readable visual flowcharts of the entire research workflows. This approach facilitates the analysis of workflows derived from an NLP corpus and reveals key methodological shifts over the past two decades, including the increasing emphasis on data analysis and the transition from feature engineering to ablation studies. Our work offers a validated technical framework for automated workflow generation, along with a novel, process-oriented perspective for the empirical investigation of evolving scientific paradigms. Source code and data are available at: https://github.com/ZH-heng/research_workflow.

SciNLP: A Domain-Specific Benchmark for Full-Text Scientific Entity and Relation Extraction in NLP

Sep 10, 2025Abstract:Structured information extraction from scientific literature is crucial for capturing core concepts and emerging trends in specialized fields. While existing datasets aid model development, most focus on specific publication sections due to domain complexity and the high cost of annotating scientific texts. To address this limitation, we introduce SciNLP - a specialized benchmark for full-text entity and relation extraction in the Natural Language Processing (NLP) domain. The dataset comprises 60 manually annotated full-text NLP publications, covering 7,072 entities and 1,826 relations. Compared to existing research, SciNLP is the first dataset providing full-text annotations of entities and their relationships in the NLP domain. To validate the effectiveness of SciNLP, we conducted comparative experiments with similar datasets and evaluated the performance of state-of-the-art supervised models on this dataset. Results reveal varying extraction capabilities of existing models across academic texts of different lengths. Cross-comparisons with existing datasets show that SciNLP achieves significant performance improvements on certain baseline models. Using models trained on SciNLP, we implemented automatic construction of a fine-grained knowledge graph for the NLP domain. Our KG has an average node degree of 3.2 per entity, indicating rich semantic topological information that enhances downstream applications. The dataset is publicly available at https://github.com/AKADDC/SciNLP.

SurveyGen: Quality-Aware Scientific Survey Generation with Large Language Models

Aug 25, 2025Abstract:Automatic survey generation has emerged as a key task in scientific document processing. While large language models (LLMs) have shown promise in generating survey texts, the lack of standardized evaluation datasets critically hampers rigorous assessment of their performance against human-written surveys. In this work, we present SurveyGen, a large-scale dataset comprising over 4,200 human-written surveys across diverse scientific domains, along with 242,143 cited references and extensive quality-related metadata for both the surveys and the cited papers. Leveraging this resource, we build QUAL-SG, a novel quality-aware framework for survey generation that enhances the standard Retrieval-Augmented Generation (RAG) pipeline by incorporating quality-aware indicators into literature retrieval to assess and select higher-quality source papers. Using this dataset and framework, we systematically evaluate state-of-the-art LLMs under varying levels of human involvement - from fully automatic generation to human-guided writing. Experimental results and human evaluations show that while semi-automatic pipelines can achieve partially competitive outcomes, fully automatic survey generation still suffers from low citation quality and limited critical analysis.

SC4ANM: Identifying Optimal Section Combinations for Automated Novelty Prediction in Academic Papers

May 22, 2025Abstract:Novelty is a core component of academic papers, and there are multiple perspectives on the assessment of novelty. Existing methods often focus on word or entity combinations, which provide limited insights. The content related to a paper's novelty is typically distributed across different core sections, e.g., Introduction, Methodology and Results. Therefore, exploring the optimal combination of sections for evaluating the novelty of a paper is important for advancing automated novelty assessment. In this paper, we utilize different combinations of sections from academic papers as inputs to drive language models to predict novelty scores. We then analyze the results to determine the optimal section combinations for novelty score prediction. We first employ natural language processing techniques to identify the sectional structure of academic papers, categorizing them into introduction, methods, results, and discussion (IMRaD). Subsequently, we used different combinations of these sections (e.g., introduction and methods) as inputs for pretrained language models (PLMs) and large language models (LLMs), employing novelty scores provided by human expert reviewers as ground truth labels to obtain prediction results. The results indicate that using introduction, results and discussion is most appropriate for assessing the novelty of a paper, while the use of the entire text does not yield significant results. Furthermore, based on the results of the PLMs and LLMs, the introduction and results appear to be the most important section for the task of novelty score prediction. The code and dataset for this paper can be accessed at https://github.com/njust-winchy/SC4ANM.

Are the confidence scores of reviewers consistent with the review content? Evidence from top conference proceedings in AI

May 21, 2025Abstract:Peer review is vital in academia for evaluating research quality. Top AI conferences use reviewer confidence scores to ensure review reliability, but existing studies lack fine-grained analysis of text-score consistency, potentially missing key details. This work assesses consistency at word, sentence, and aspect levels using deep learning and NLP conference review data. We employ deep learning to detect hedge sentences and aspects, then analyze report length, hedge word/sentence frequency, aspect mentions, and sentiment to evaluate text-score alignment. Correlation, significance, and regression tests examine confidence scores' impact on paper outcomes. Results show high text-score consistency across all levels, with regression revealing higher confidence scores correlate with paper rejection, validating expert assessments and peer review fairness.

Enhancing Abstractive Summarization of Scientific Papers Using Structure Information

May 20, 2025

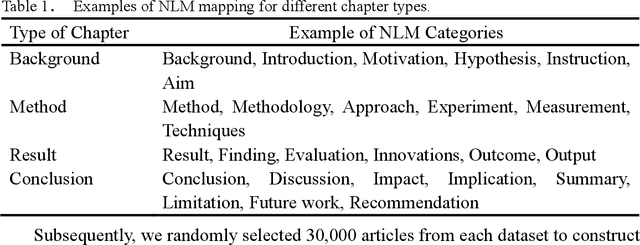

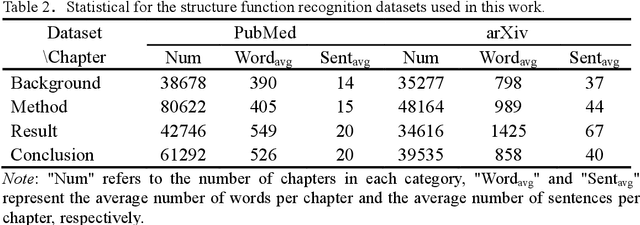

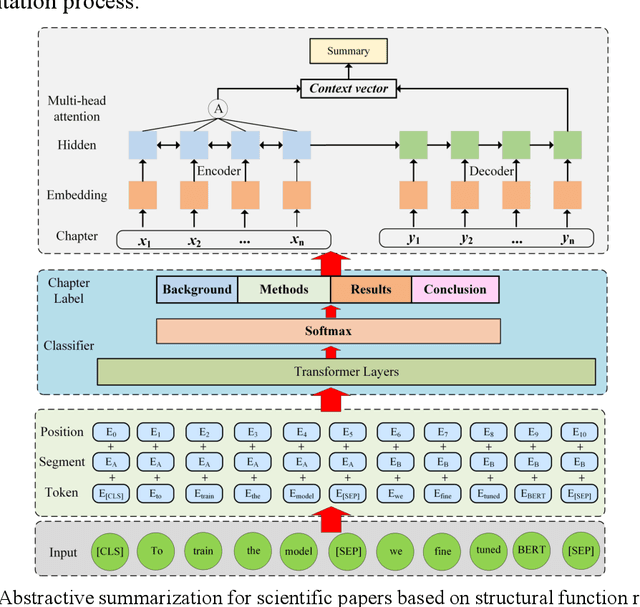

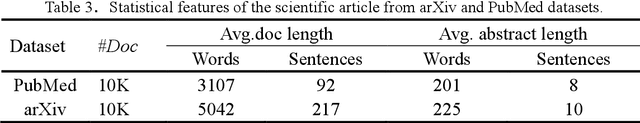

Abstract:Abstractive summarization of scientific papers has always been a research focus, yet existing methods face two main challenges. First, most summarization models rely on Encoder-Decoder architectures that treat papers as sequences of words, thus fail to fully capture the structured information inherent in scientific papers. Second, existing research often use keyword mapping or feature engineering to identify the structural information, but these methods struggle with the structural flexibility of scientific papers and lack robustness across different disciplines. To address these challenges, we propose a two-stage abstractive summarization framework that leverages automatic recognition of structural functions within scientific papers. In the first stage, we standardize chapter titles from numerous scientific papers and construct a large-scale dataset for structural function recognition. A classifier is then trained to automatically identify the key structural components (e.g., Background, Methods, Results, Discussion), which provides a foundation for generating more balanced summaries. In the second stage, we employ Longformer to capture rich contextual relationships across sections and generating context-aware summaries. Experiments conducted on two domain-specific scientific paper summarization datasets demonstrate that our method outperforms advanced baselines, and generates more comprehensive summaries. The code and dataset can be accessed at https://github.com/tongbao96/code-for-SFR-AS.

Examining Linguistic Shifts in Academic Writing Before and After the Launch of ChatGPT: A Study on Preprint Papers

May 18, 2025Abstract:Large Language Models (LLMs), such as ChatGPT, have prompted academic concerns about their impact on academic writing. Existing studies have primarily examined LLM usage in academic writing through quantitative approaches, such as word frequency statistics and probability-based analyses. However, few have systematically examined the potential impact of LLMs on the linguistic characteristics of academic writing. To address this gap, we conducted a large-scale analysis across 823,798 abstracts published in last decade from arXiv dataset. Through the linguistic analysis of features such as the frequency of LLM-preferred words, lexical complexity, syntactic complexity, cohesion, readability and sentiment, the results indicate a significant increase in the proportion of LLM-preferred words in abstracts, revealing the widespread influence of LLMs on academic writing. Additionally, we observed an increase in lexical complexity and sentiment in the abstracts, but a decrease in syntactic complexity, suggesting that LLMs introduce more new vocabulary and simplify sentence structure. However, the significant decrease in cohesion and readability indicates that abstracts have fewer connecting words and are becoming more difficult to read. Moreover, our analysis reveals that scholars with weaker English proficiency were more likely to use the LLMs for academic writing, and focused on improving the overall logic and fluency of the abstracts. Finally, at discipline level, we found that scholars in Computer Science showed more pronounced changes in writing style, while the changes in Mathematics were minimal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge