Chenchu Xu

TransCC: Transformer Network for Coronary Artery CCTA Segmentation

Oct 07, 2023Abstract:The accurate segmentation of Coronary Computed Tomography Angiography (CCTA) images holds substantial clinical value for the early detection and treatment of Coronary Heart Disease (CHD). The Transformer, utilizing a self-attention mechanism, has demonstrated commendable performance in the realm of medical image processing. However, challenges persist in coronary segmentation tasks due to (1) the damage to target local structures caused by fixed-size image patch embedding, and (2) the critical role of both global and local features in medical image segmentation tasks.To address these challenges, we propose a deep learning framework, TransCC, that effectively amalgamates the Transformer and convolutional neural networks for CCTA segmentation. Firstly, we introduce a Feature Interaction Extraction (FIE) module designed to capture the characteristics of image patches, thereby circumventing the loss of semantic information inherent in the original method. Secondly, we devise a Multilayer Enhanced Perceptron (MEP) to augment attention to local information within spatial dimensions, serving as a complement to the self-attention mechanism. Experimental results indicate that TransCC outperforms existing methods in segmentation performance, boasting an average Dice coefficient of 0.730 and an average Intersection over Union (IoU) of 0.582. These results underscore the effectiveness of TransCC in CCTA image segmentation.

Direct detection of pixel-level myocardial infarction areas via a deep-learning algorithm

Jun 10, 2017

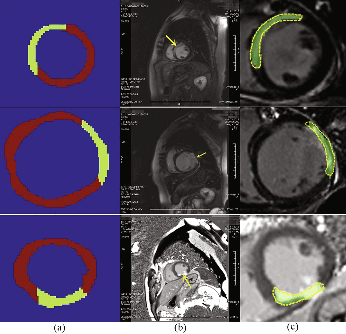

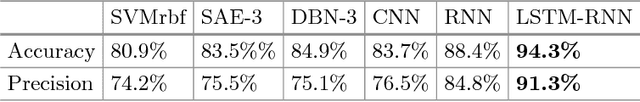

Abstract:Accurate detection of the myocardial infarction (MI) area is crucial for early diagnosis planning and follow-up management. In this study, we propose an end-to-end deep-learning algorithm framework (OF-RNN ) to accurately detect the MI area at the pixel level. Our OF-RNN consists of three different function layers: the heart localization layers, which can accurately and automatically crop the region-of-interest (ROI) sequences, including the left ventricle, using the whole cardiac magnetic resonance image sequences; the motion statistical layers, which are used to build a time-series architecture to capture two types of motion features (at the pixel-level) by integrating the local motion features generated by long short-term memory-recurrent neural networks and the global motion features generated by deep optical flows from the whole ROI sequence, which can effectively characterize myocardial physiologic function; and the fully connected discriminate layers, which use stacked auto-encoders to further learn these features, and they use a softmax classifier to build the correspondences from the motion features to the tissue identities (infarction or not) for each pixel. Through the seamless connection of each layer, our OF-RNN can obtain the area, position, and shape of the MI for each patient. Our proposed framework yielded an overall classification accuracy of 94.35% at the pixel level, from 114 clinical subjects. These results indicate the potential of our proposed method in aiding standardized MI assessments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge