Changzhen Ji

A Neural Conversation Generation Model via Equivalent Shared Memory Investigation

Aug 20, 2021

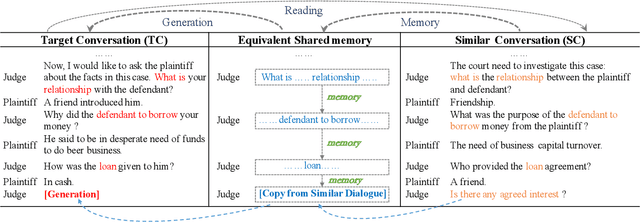

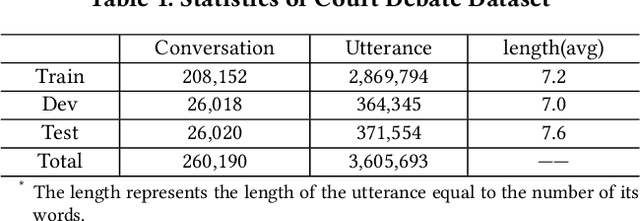

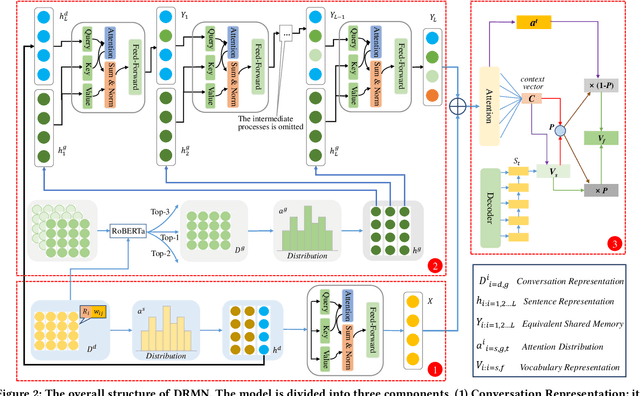

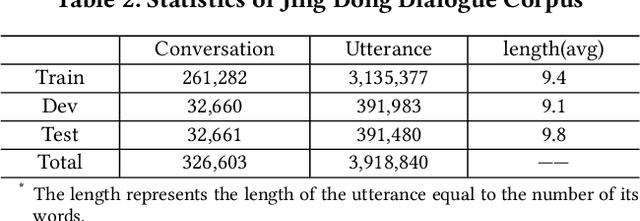

Abstract:Conversation generation as a challenging task in Natural Language Generation (NLG) has been increasingly attracting attention over the last years. A number of recent works adopted sequence-to-sequence structures along with external knowledge, which successfully enhanced the quality of generated conversations. Nevertheless, few works utilized the knowledge extracted from similar conversations for utterance generation. Taking conversations in customer service and court debate domains as examples, it is evident that essential entities/phrases, as well as their associated logic and inter-relationships can be extracted and borrowed from similar conversation instances. Such information could provide useful signals for improving conversation generation. In this paper, we propose a novel reading and memory framework called Deep Reading Memory Network (DRMN) which is capable of remembering useful information of similar conversations for improving utterance generation. We apply our model to two large-scale conversation datasets of justice and e-commerce fields. Experiments prove that the proposed model outperforms the state-of-the-art approaches.

AI-lead Court Debate Case Investigation

Oct 22, 2020

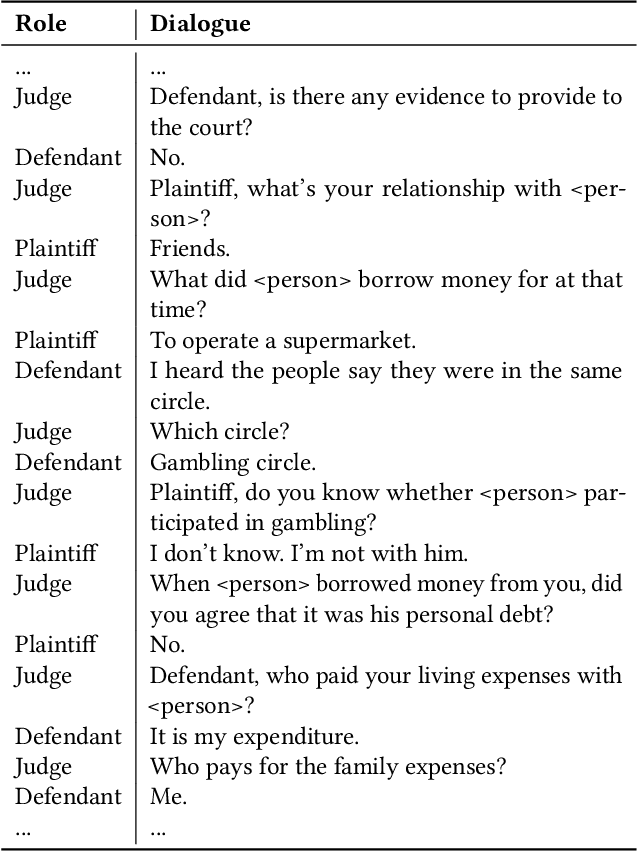

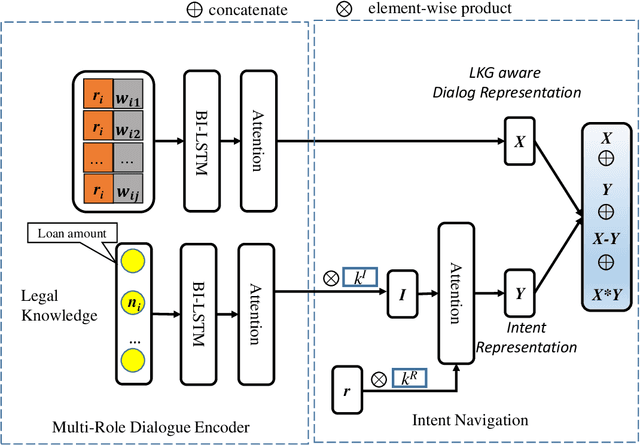

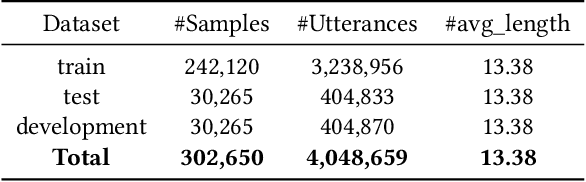

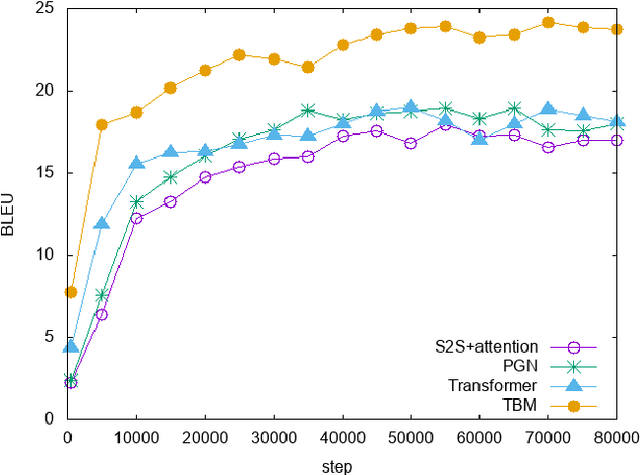

Abstract:The multi-role judicial debate composed of the plaintiff, defendant, and judge is an important part of the judicial trial. Different from other types of dialogue, questions are raised by the judge, The plaintiff, plaintiff's agent defendant, and defendant's agent would be to debating so that the trial can proceed in an orderly manner. Question generation is an important task in Natural Language Generation. In the judicial trial, it can help the judge raise efficient questions so that the judge has a clearer understanding of the case. In this work, we propose an innovative end-to-end question generation model-Trial Brain Model (TBM) to build a Trial Brain, it can generate the questions the judge wants to ask through the historical dialogue between the plaintiff and the defendant. Unlike prior efforts in natural language generation, our model can learn the judge's questioning intention through predefined knowledge. We do experiments on real-world datasets, the experimental results show that our model can provide a more accurate question in the multi-role court debate scene.

Cross Copy Network for Dialogue Generation

Oct 22, 2020

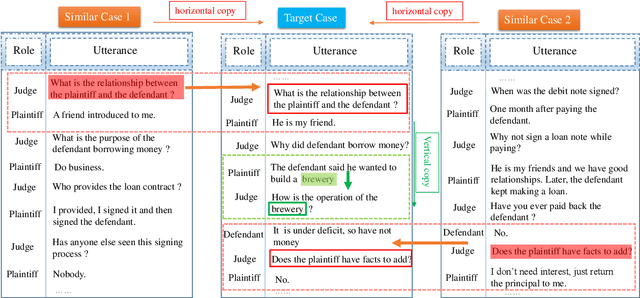

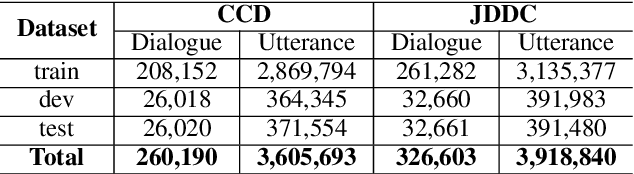

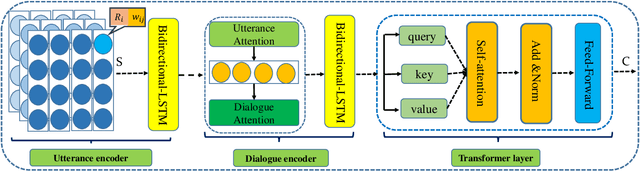

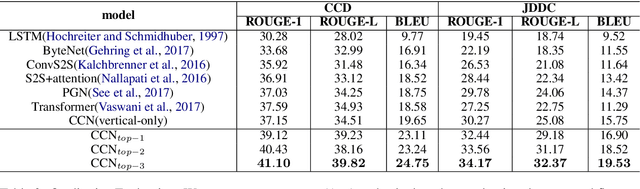

Abstract:In the past few years, audiences from different fields witness the achievements of sequence-to-sequence models (e.g., LSTM+attention, Pointer Generator Networks, and Transformer) to enhance dialogue content generation. While content fluency and accuracy often serve as the major indicators for model training, dialogue logics, carrying critical information for some particular domains, are often ignored. Take customer service and court debate dialogue as examples, compatible logics can be observed across different dialogue instances, and this information can provide vital evidence for utterance generation. In this paper, we propose a novel network architecture - Cross Copy Networks(CCN) to explore the current dialog context and similar dialogue instances' logical structure simultaneously. Experiments with two tasks, court debate and customer service content generation, proved that the proposed algorithm is superior to existing state-of-art content generation models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge