Changyu Diao

Improving Densification in 3D Gaussian Splatting for High-Fidelity Rendering

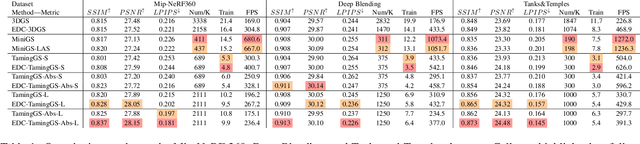

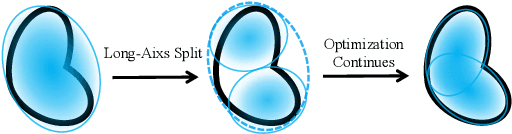

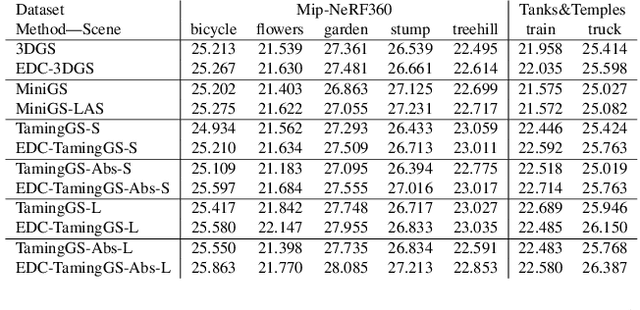

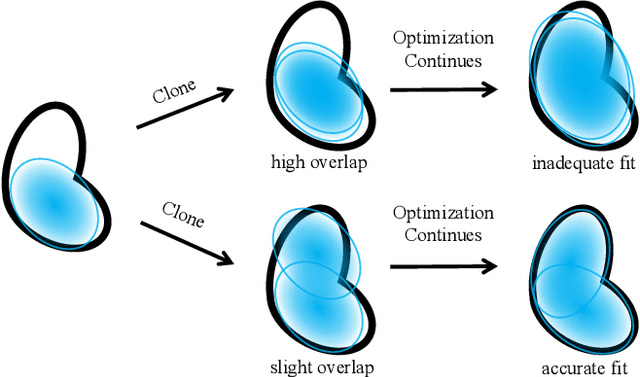

Aug 17, 2025Abstract:Although 3D Gaussian Splatting (3DGS) has achieved impressive performance in real-time rendering, its densification strategy often results in suboptimal reconstruction quality. In this work, we present a comprehensive improvement to the densification pipeline of 3DGS from three perspectives: when to densify, how to densify, and how to mitigate overfitting. Specifically, we propose an Edge-Aware Score to effectively select candidate Gaussians for splitting. We further introduce a Long-Axis Split strategy that reduces geometric distortions introduced by clone and split operations. To address overfitting, we design a set of techniques, including Recovery-Aware Pruning, Multi-step Update, and Growth Control. Our method enhances rendering fidelity without introducing additional training or inference overhead, achieving state-of-the-art performance with fewer Gaussians.

Efficient Density Control for 3D Gaussian Splatting

Nov 15, 2024

Abstract:3D Gaussian Splatting (3DGS) excels in novel view synthesis, balancing advanced rendering quality with real-time performance. However, in trained scenes, a large number of Gaussians with low opacity significantly increase rendering costs. This issue arises due to flaws in the split and clone operations during the densification process, which lead to extensive Gaussian overlap and subsequent opacity reduction. To enhance the efficiency of Gaussian utilization, we improve the adaptive density control of 3DGS. First, we introduce a more efficient long-axis split operation to replace the original clone and split, which mitigates Gaussian overlap and improves densification efficiency.Second, we propose a simple adaptive pruning technique to reduce the number of low-opacity Gaussians. Finally, by dynamically lowering the splitting threshold and applying importance weighting, the efficiency of Gaussian utilization is further improved.We evaluate our proposed method on various challenging real-world datasets. Experimental results show that our Efficient Density Control (EDC) can enhance both the rendering speed and quality.

Multi-View Photometric Stereo: A Robust Solution and Benchmark Dataset for Spatially Varying Isotropic Materials

Jan 18, 2020

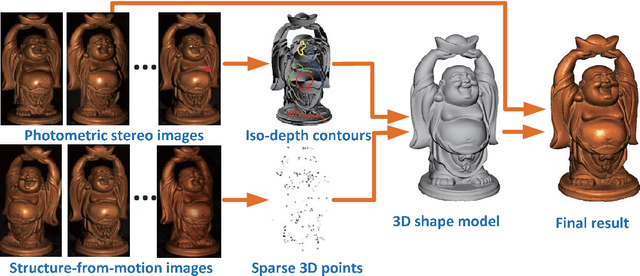

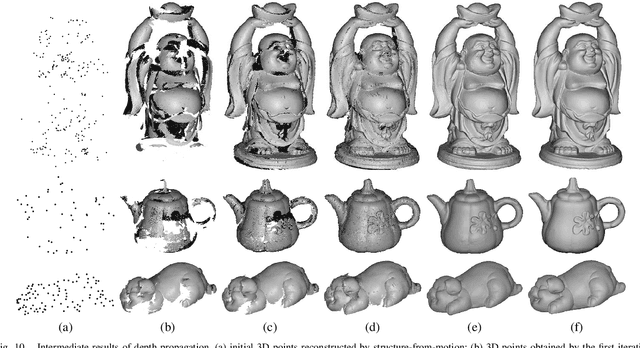

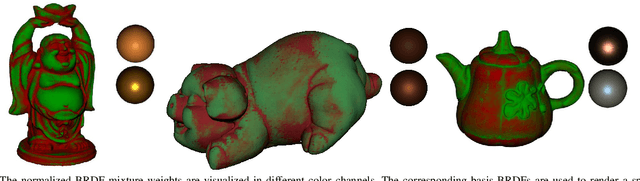

Abstract:We present a method to capture both 3D shape and spatially varying reflectance with a multi-view photometric stereo (MVPS) technique that works for general isotropic materials. Our algorithm is suitable for perspective cameras and nearby point light sources. Our data capture setup is simple, which consists of only a digital camera, some LED lights, and an optional automatic turntable. From a single viewpoint, we use a set of photometric stereo images to identify surface points with the same distance to the camera. We collect this information from multiple viewpoints and combine it with structure-from-motion to obtain a precise reconstruction of the complete 3D shape. The spatially varying isotropic bidirectional reflectance distribution function (BRDF) is captured by simultaneously inferring a set of basis BRDFs and their mixing weights at each surface point. In experiments, we demonstrate our algorithm with two different setups: a studio setup for highest precision and a desktop setup for best usability. According to our experiments, under the studio setting, the captured shapes are accurate to 0.5 millimeters and the captured reflectance has a relative root-mean-square error (RMSE) of 9%. We also quantitatively evaluate state-of-the-art MVPS on a newly collected benchmark dataset, which is publicly available for inspiring future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge