Cedomir Stefanovic

Link-Aware Energy-Frugal Continual Learning for Fault Detection in IoT Networks

Dec 15, 2025Abstract:The use of lightweight machine learning (ML) models in internet of things (IoT) networks enables resource constrained IoT devices to perform on-device inference for several critical applications. However, the inference accuracy deteriorates due to the non-stationarity in the IoT environment and limited initial training data. To counteract this, the deployed models can be updated occasionally with new observed data samples. However, this approach consumes additional energy, which is undesirable for energy constrained IoT devices. This letter introduces an event-driven communication framework that strategically integrates continual learning (CL) in IoT networks for energy-efficient fault detection. Our framework enables the IoT device and the edge server (ES) to collaboratively update the lightweight ML model by adapting to the wireless link conditions for communication and the available energy budget. Evaluation on real-world datasets show that the proposed approach can outperform both periodic sampling and non-adaptive CL in terms of inference recall; our proposed approach achieves up to a 42.8% improvement, even under tight energy and bandwidth constraint.

A Deep Reinforcement Learning Approach for Improving Age of Information in Mission-Critical IoT

Nov 23, 2023Abstract:The emerging mission-critical Internet of Things (IoT) play a vital role in remote healthcare, haptic interaction, and industrial automation, where timely delivery of status updates is crucial. The Age of Information (AoI) is an effective metric to capture and evaluate information freshness at the destination. A system design based solely on the optimization of the average AoI might not be adequate to capture the requirements of mission-critical applications, since averaging eliminates the effects of extreme events. In this paper, we introduce a Deep Reinforcement Learning (DRL)-based algorithm to improve AoI in mission-critical IoT applications. The objective is to minimize an AoI-based metric consisting of the weighted sum of the average AoI and the probability of exceeding an AoI threshold. We utilize the actor-critic method to train the algorithm to achieve optimized scheduling policy to solve the formulated problem. The performance of our proposed method is evaluated in a simulated setup and the results show a significant improvement in terms of the average AoI and the AoI violation probability compared to the related-work.

AA-DL: AoI-Aware Deep Learning Approach for D2D-Assisted Industrial IoT

Nov 22, 2023

Abstract:In real-time Industrial Internet of Things (IIoT), e.g., monitoring and control scenarios, the freshness of data is crucial to maintain the system functionality and stability. In this paper, we propose an AoI-Aware Deep Learning (AA-DL) approach to minimize the Peak Age of Information (PAoI) in D2D-assisted IIoT networks. Particularly, we analyzed the success probability and the average PAoI via stochastic geometry, and formulate an optimization problem with the objective to find the optimal scheduling policy that minimizes PAoI. In order to solve the non-convex scheduling problem, we develop a Neural Network (NN) structure that exploits the Geographic Location Information (GLI) along with feedback stages to perform unsupervised learning over randomly deployed networks. Our motivation is based on the observation that in various transmission contexts, the wireless channel intensity is mainly influenced by distancedependant path loss, which could be calculated using the GLI of each link. The performance of the AA-DL method is evaluated via numerical results that demonstrate the effectiveness of our proposed method to improve the PAoI performance compared to a recent benchmark while maintains lower complexity against the conventional iterative optimization method.

Timely and Efficient Information Delivery in Real-Time Industrial IoT Networks

Nov 22, 2023

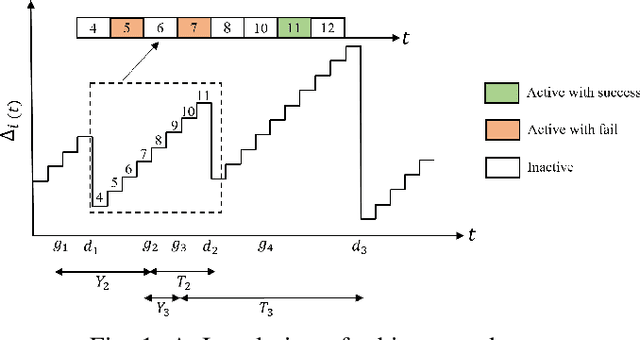

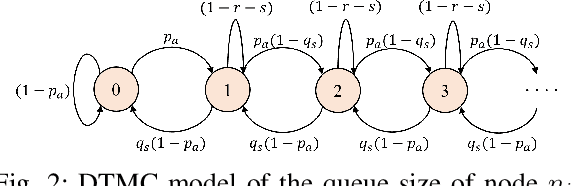

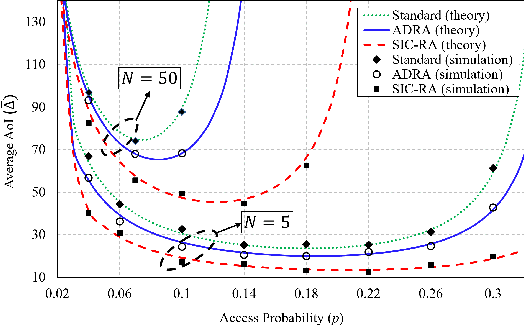

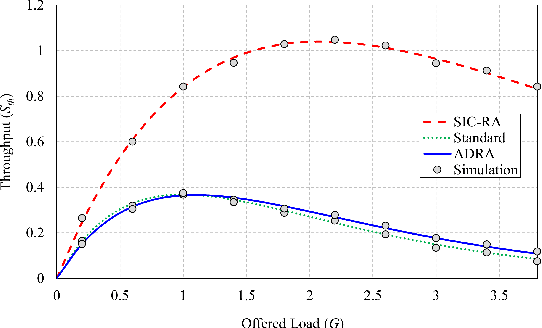

Abstract:Enabling real-time communication in Industrial Internet of Things (IIoT) networks is crucial to support autonomous, self-organized and re-configurable industrial automation for Industry 4.0 and the forthcoming Industry 5.0. In this paper, we consider a SIC-assisted real-time IIoT network, in which sensor nodes generate reports according to an event-generation probability that is specific for the monitored phenomena. The reports are delivered over a block-fading channel to a common Access Point (AP) in slotted ALOHA fashion, which leverages the imbalances in the received powers among the contending users and applies successive interference cancellation (SIC) to decode user packets from the collisions. We provide an extensive analytical treatment of the setup, deriving the Age of Information (AoI), throughput and deadline violation probability, when the AP has access to both the perfect as well as the imperfect channel-state information. We show that adopting SIC improves all the performance parameters with respect to the standard slotted ALOHA, as well as to an age-dependent access method. The analytical results agree with the simulation based ones, demonstrating that investing in the SIC capability at the receiver enables this simple access method to support timely and efficient information delivery in IIoT networks.

Multi-Objective Provisioning of Network Slices using Deep Reinforcement Learning

Aug 14, 2022

Abstract:Network Slicing (NS) is crucial for efficiently enabling divergent network applications in next generation networks. Nonetheless, the complex Quality of Service (QoS) requirements and diverse heterogeneity in network services entails high computational time for Network Slice Provisioning (NSP) optimization. The legacy optimization methods are challenging to meet the low latency and high reliability of network applications. To this end, we model the real-time NSP as an Online Network Slice Provisioning (ONSP) problem. Specifically, we formulate the ONSP problem as an online Multi-Objective Integer Programming Optimization (MOIPO) problem. Then, we approximate the solution of the MOIPO problem by applying the Proximal Policy Optimization (PPO) method to the traffic demand prediction. Our simulation results show the effectiveness of the proposed method compared to the state-of-the-art MOIPO solvers with a lower SLA violation rate and network operation cost.

Analysis and Optimization of the Latency Budget in Wireless Systems with Mobile Edge Computing

Jan 27, 2022

Abstract:We present a framework to analyse the latency budget in wireless systems with Mobile Edge Computing (MEC). Our focus is on teleoperation and telerobotics, as use cases that are representative of mission-critical uplink-intensive IoT systems with requirements on low latency and high reliability. The study is motivated by a general question: What is the optimal compression strategy in reliability and latency constrained systems? We address this question by studying the latency of an uplink connection from a multi-sensor IoT device to the base station. This is a critical link tasked with a timely and reliable transfer of potentially significant amount of data from the multitude of sensors. We introduce a comprehensive model for the latency budget, incorporating data compression and data transmission. The uplink latency is a random variable whose distribution depends on the computational capabilities of the device and on the properties of the wireless link. We formulate two optimization problems corresponding to two transmission strategies: (1) Outage-constrained, and (2) Latency-constrained. We derive the optimal system parameters under a reliability criterion. We show that the obtained results are superior compared to the ones based on the optimization of the expected latency.

Distributed Estimation of the Operating State of a Single-Bus DC MicroGrid without an External Communication Interface

Sep 14, 2016

Abstract:We propose a decentralized Maximum Likelihood solution for estimating the stochastic renewable power generation and demand in single bus Direct Current (DC) MicroGrids (MGs), with high penetration of droop controlled power electronic converters. The solution relies on the fact that the primary control parameters are set in accordance with the local power generation status of the generators. Therefore, the steady state voltage is inherently dependent on the generation capacities and the load, through a non-linear parametric model, which can be estimated. To have a well conditioned estimation problem, our solution avoids the use of an external communication interface and utilizes controlled voltage disturbances to perform distributed training. Using this tool, we develop an efficient, decentralized Maximum Likelihood Estimator (MLE) and formulate the sufficient condition for the existence of the globally optimal solution. The numerical results illustrate the promising performance of our MLE algorithm.

Search Process and Probabilistic Bifix Approach

Aug 23, 2005

Abstract:An analytical approach to a search process is a mathematical prerequisite for digital synchronization acquisition analysis and optimization. A search is performed for an arbitrary set of sequences within random but not equiprobable L-ary data. This paper derives in detail an expression for probability distribution function, from which other statistical parameters - expected value and variance - can be obtained. The probabilistic nature of (cross-) bifix indicators is shown and application examples are outlined, ranging beyond the usual telecommunication field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge