Hossam Farag

Balancing AoI and Rate for Mission-Critical and eMBB Coexistence with Puncturing, NOMA,and RSMA in Cellular Uplink

Aug 23, 2024

Abstract:Through the lens of average and peak age-of-information (AoI), this paper takes a fresh look into the uplink medium access solutions for mission-critical (MC) communication coexisting with enhanced mobile broadband (eMBB) service. Considering the stochastic packet arrivals from an MC user, we study three access schemes: orthogonal multiple access (OMA) with eMBB preemption (puncturing), non-orthogonal multiple access (NOMA), and rate-splitting multiple access (RSMA), the latter two both with concurrent eMBB transmissions. Puncturing is found to reduce both average AoI and peak AoI (PAoI) violation probability but at the expense of decreased eMBB user rates and increased signaling complexity. Conversely, NOMA and RSMA offer higher eMBB rates but may lead to MC packet loss and AoI degradation. The paper systematically investigates the conditions under which NOMA or RSMA can closely match the average AoI and PAoI violation performance of puncturing while maintaining data rate gains. Closed-form expressions for average AoI and PAoI violation probability are derived, and conditions on the eMBB and MC channel gain difference with respect to the base station are analyzed. Additionally, optimal power and rate splitting factors in RSMA are determined through an exhaustive search to minimize MC outage probability. Notably, our results indicate that with a small loss in the average AoI and PAoI violation probability the eMBB rate in NOMA and RSMA can be approximately five times higher than that achieved through puncturing.

A Deep Reinforcement Learning Approach for Improving Age of Information in Mission-Critical IoT

Nov 23, 2023Abstract:The emerging mission-critical Internet of Things (IoT) play a vital role in remote healthcare, haptic interaction, and industrial automation, where timely delivery of status updates is crucial. The Age of Information (AoI) is an effective metric to capture and evaluate information freshness at the destination. A system design based solely on the optimization of the average AoI might not be adequate to capture the requirements of mission-critical applications, since averaging eliminates the effects of extreme events. In this paper, we introduce a Deep Reinforcement Learning (DRL)-based algorithm to improve AoI in mission-critical IoT applications. The objective is to minimize an AoI-based metric consisting of the weighted sum of the average AoI and the probability of exceeding an AoI threshold. We utilize the actor-critic method to train the algorithm to achieve optimized scheduling policy to solve the formulated problem. The performance of our proposed method is evaluated in a simulated setup and the results show a significant improvement in terms of the average AoI and the AoI violation probability compared to the related-work.

Timely and Efficient Information Delivery in Real-Time Industrial IoT Networks

Nov 22, 2023

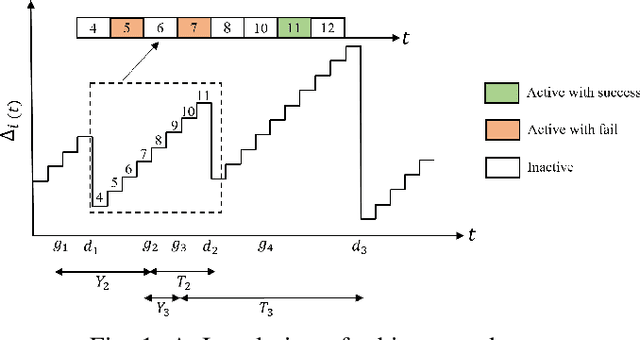

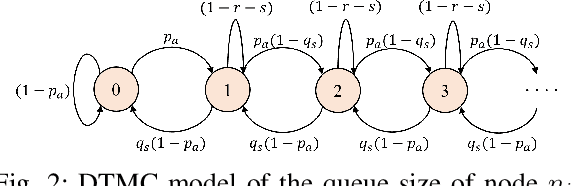

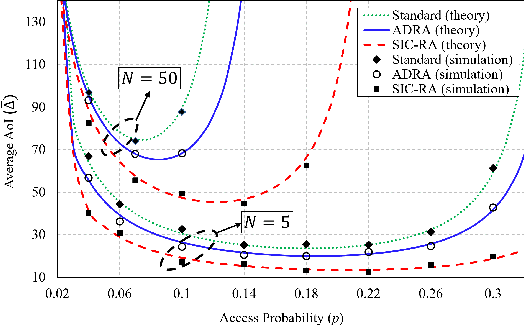

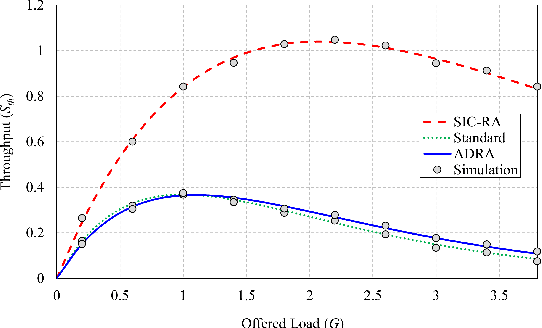

Abstract:Enabling real-time communication in Industrial Internet of Things (IIoT) networks is crucial to support autonomous, self-organized and re-configurable industrial automation for Industry 4.0 and the forthcoming Industry 5.0. In this paper, we consider a SIC-assisted real-time IIoT network, in which sensor nodes generate reports according to an event-generation probability that is specific for the monitored phenomena. The reports are delivered over a block-fading channel to a common Access Point (AP) in slotted ALOHA fashion, which leverages the imbalances in the received powers among the contending users and applies successive interference cancellation (SIC) to decode user packets from the collisions. We provide an extensive analytical treatment of the setup, deriving the Age of Information (AoI), throughput and deadline violation probability, when the AP has access to both the perfect as well as the imperfect channel-state information. We show that adopting SIC improves all the performance parameters with respect to the standard slotted ALOHA, as well as to an age-dependent access method. The analytical results agree with the simulation based ones, demonstrating that investing in the SIC capability at the receiver enables this simple access method to support timely and efficient information delivery in IIoT networks.

AA-DL: AoI-Aware Deep Learning Approach for D2D-Assisted Industrial IoT

Nov 22, 2023

Abstract:In real-time Industrial Internet of Things (IIoT), e.g., monitoring and control scenarios, the freshness of data is crucial to maintain the system functionality and stability. In this paper, we propose an AoI-Aware Deep Learning (AA-DL) approach to minimize the Peak Age of Information (PAoI) in D2D-assisted IIoT networks. Particularly, we analyzed the success probability and the average PAoI via stochastic geometry, and formulate an optimization problem with the objective to find the optimal scheduling policy that minimizes PAoI. In order to solve the non-convex scheduling problem, we develop a Neural Network (NN) structure that exploits the Geographic Location Information (GLI) along with feedback stages to perform unsupervised learning over randomly deployed networks. Our motivation is based on the observation that in various transmission contexts, the wireless channel intensity is mainly influenced by distancedependant path loss, which could be calculated using the GLI of each link. The performance of the AA-DL method is evaluated via numerical results that demonstrate the effectiveness of our proposed method to improve the PAoI performance compared to a recent benchmark while maintains lower complexity against the conventional iterative optimization method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge