Catherine Higgitt

Adversarial Deep-Unfolding Network for MA-XRF Super-Resolution on Old Master Paintings Using Minimal Training Data

Sep 14, 2024Abstract:High-quality element distribution maps enable precise analysis of the material composition and condition of Old Master paintings. These maps are typically produced from data acquired through Macro X-ray fluorescence (MA-XRF) scanning, a non-invasive technique that collects spectral information. However, MA-XRF is often limited by a trade-off between acquisition time and resolution. Achieving higher resolution requires longer scanning times, which can be impractical for detailed analysis of large artworks. Super-resolution MA-XRF provides an alternative solution by enhancing the quality of MA-XRF scans while reducing the need for extended scanning sessions. This paper introduces a tailored super-resolution approach to improve MA-XRF analysis of Old Master paintings. Our method proposes a novel adversarial neural network architecture for MA-XRF, inspired by the Learned Iterative Shrinkage-Thresholding Algorithm. It is specifically designed to work in an unsupervised manner, making efficient use of the limited available data. This design avoids the need for extensive datasets or pre-trained networks, allowing it to be trained using just a single high-resolution RGB image alongside low-resolution MA-XRF data. Numerical results demonstrate that our method outperforms existing state-of-the-art super-resolution techniques for MA-XRF scans of Old Master paintings.

A Fast Automatic Method for Deconvoluting Macro X-ray Fluorescence Data Collected from Easel Paintings

Oct 31, 2022Abstract:Macro X-ray Fluorescence (MA-XRF) scanning is increasingly widely used by researchers in heritage science to analyse easel paintings as one of a suite of non-invasive imaging techniques. The task of processing the resulting MA-XRF datacube generated in order to produce individual chemical element maps is called MA-XRF deconvolution. While there are several existing methods that have been proposed for MA-XRF deconvolution, they require a degree of manual intervention from the user that can affect the final results. The state-of-the-art AFRID approach can automatically deconvolute the datacube without user input, but it has a long processing time and does not exploit spatial dependency. In this paper, we propose two versions of a fast automatic deconvolution (FAD) method for MA-XRF datacubes collected from easel paintings with ADMM (alternating direction method of multipliers) and FISTA (fast iterative shrinkage-thresholding algorithm). The proposed FAD method not only automatically analyses the datacube and produces element distribution maps of high-quality with spatial dependency considered, but also significantly reduces the running time. The results generated on the MA-XRF datacubes collected from two easel paintings from the National Gallery, London, verify the performance of the proposed FAD method.

Mixed X-Ray Image Separation for Artworks with Concealed Designs

Jan 23, 2022

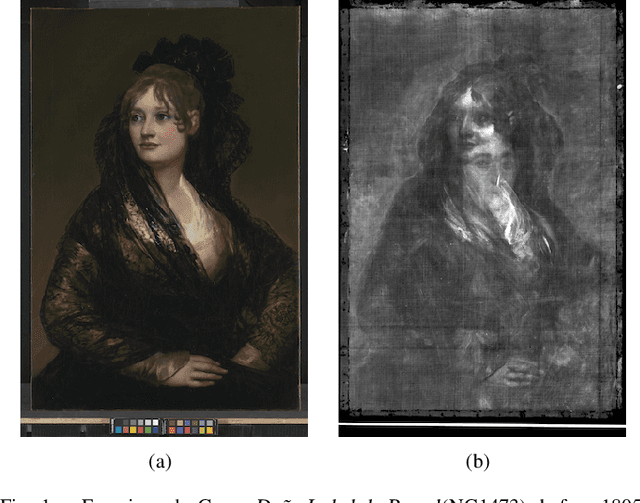

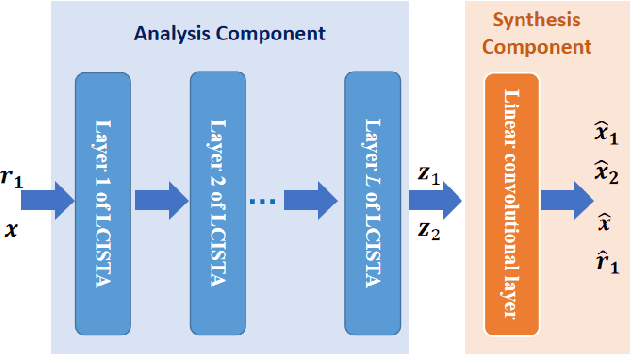

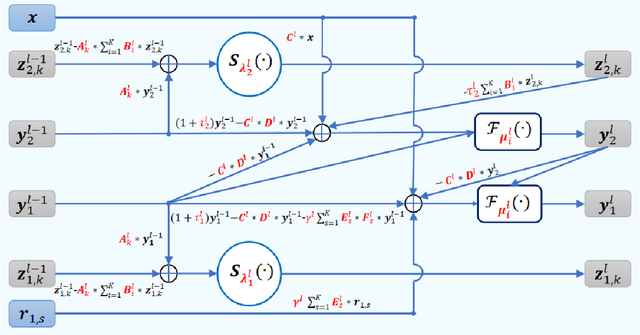

Abstract:In this paper, we focus on X-ray images of paintings with concealed sub-surface designs (e.g., deriving from reuse of the painting support or revision of a composition by the artist), which include contributions from both the surface painting and the concealed features. In particular, we propose a self-supervised deep learning-based image separation approach that can be applied to the X-ray images from such paintings to separate them into two hypothetical X-ray images. One of these reconstructed images is related to the X-ray image of the concealed painting, while the second one contains only information related to the X-ray of the visible painting. The proposed separation network consists of two components: the analysis and the synthesis sub-networks. The analysis sub-network is based on learned coupled iterative shrinkage thresholding algorithms (LCISTA) designed using algorithm unrolling techniques, and the synthesis sub-network consists of several linear mappings. The learning algorithm operates in a totally self-supervised fashion without requiring a sample set that contains both the mixed X-ray images and the separated ones. The proposed method is demonstrated on a real painting with concealed content, Do\~na Isabel de Porcel by Francisco de Goya, to show its effectiveness.

Image Separation with Side Information: A Connected Auto-Encoders Based Approach

Sep 16, 2020

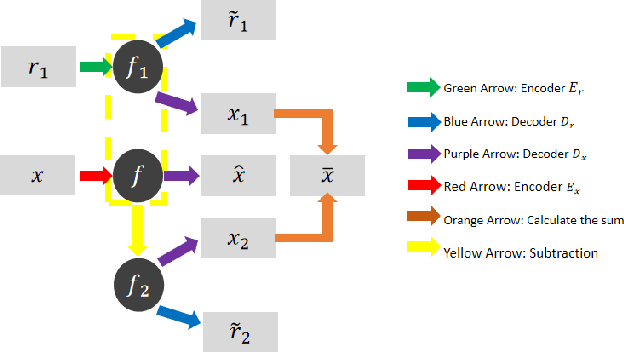

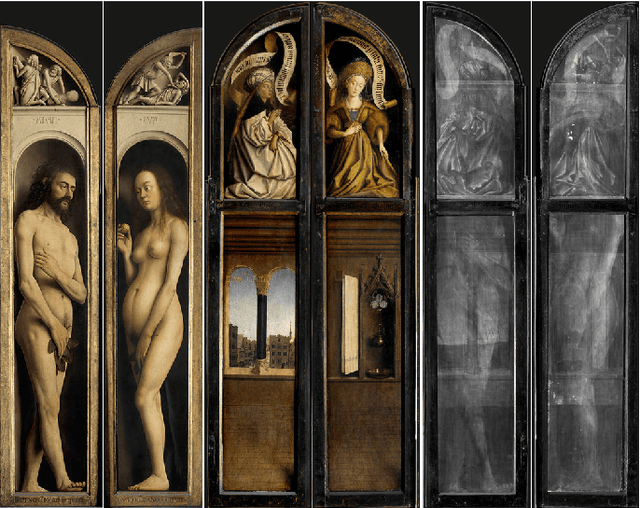

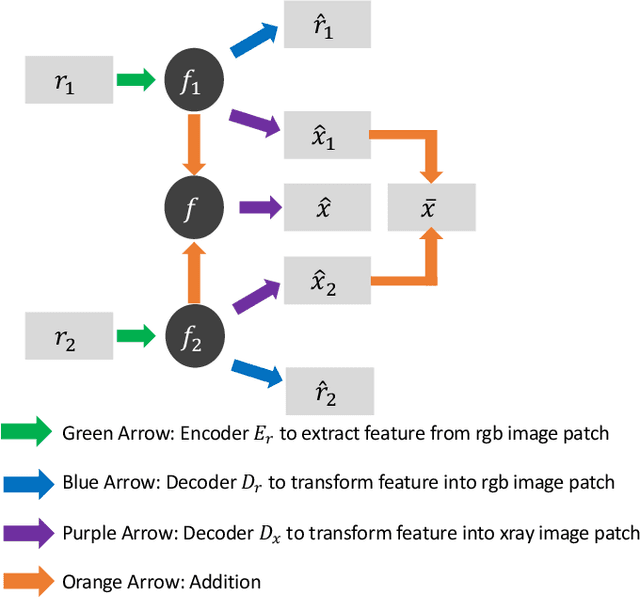

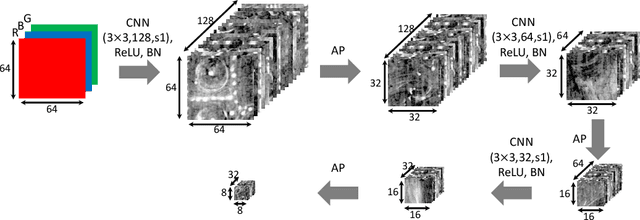

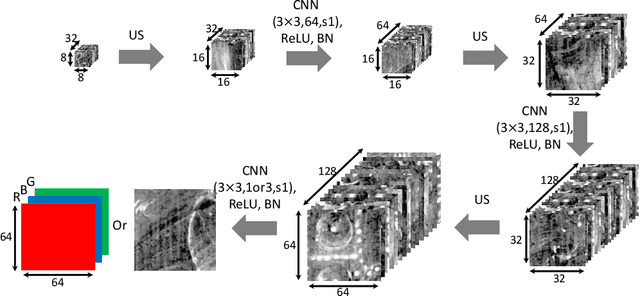

Abstract:X-radiography (X-ray imaging) is a widely used imaging technique in art investigation. It can provide information about the condition of a painting as well as insights into an artist's techniques and working methods, often revealing hidden information invisible to the naked eye. In this paper, we deal with the problem of separating mixed X-ray images originating from the radiography of double-sided paintings. Using the visible color images (RGB images) from each side of the painting, we propose a new Neural Network architecture, based upon 'connected' auto-encoders, designed to separate the mixed X-ray image into two simulated X-ray images corresponding to each side. In this proposed architecture, the convolutional auto encoders extract features from the RGB images. These features are then used to (1) reproduce both of the original RGB images, (2) reconstruct the hypothetical separated X-ray images, and (3) regenerate the mixed X-ray image. The algorithm operates in a totally self-supervised fashion without requiring a sample set that contains both the mixed X-ray images and the separated ones. The methodology was tested on images from the double-sided wing panels of the \textsl{Ghent Altarpiece}, painted in 1432 by the brothers Hubert and Jan van Eyck. These tests show that the proposed approach outperforms other state-of-the-art X-ray image separation methods for art investigation applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge