Caroline Favart

FastEstimator: A Deep Learning Library for Fast Prototyping and Productization

Nov 18, 2019

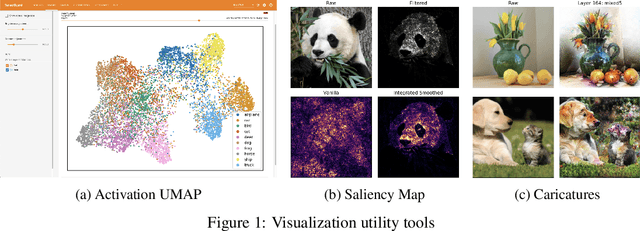

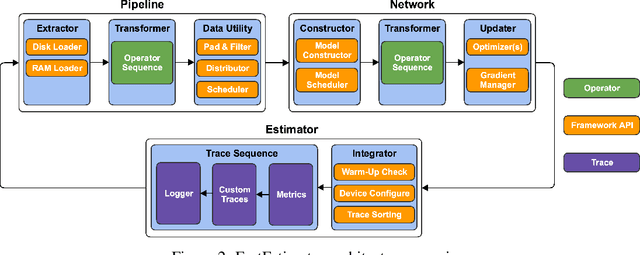

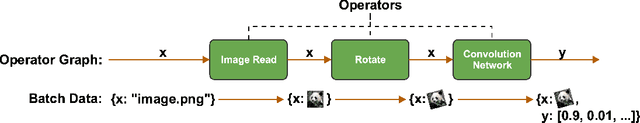

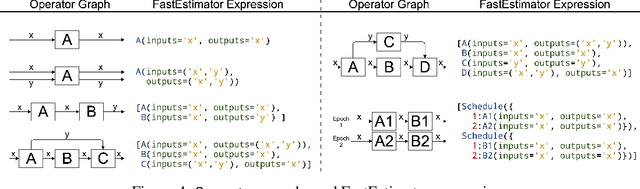

Abstract:As the complexity of state-of-the-art deep learning models increases by the month, implementation, interpretation, and traceability become ever-more-burdensome challenges for AI practitioners around the world. Several AI frameworks have risen in an effort to stem this tide, but the steady advance of the field has begun to test the bounds of their flexibility, expressiveness, and ease of use. To address these concerns, we introduce a radically flexible high-level open source deep learning framework for both research and industry. We introduce FastEstimator.

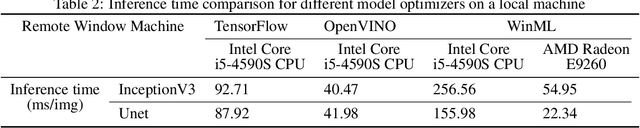

Impact of Inference Accelerators on hardware selection

Oct 07, 2019

Abstract:As opportunities for AI-assisted healthcare grow steadily, model deployment faces challenges due to the specific characteristics of the industry. The configuration choice for a production device can impact model performance while influencing operational costs. Moreover, in healthcare some situations might require fast, but not real time, inference. We study different configurations and conduct a cost-performance analysis to determine the optimized hardware for the deployment of a model subject to healthcare domain constraints. We observe that a naive performance comparison may not lead to an optimal configuration selection. In fact, given realistic domain constraints, CPU execution might be preferable to GPU accelerators. Hence, defining beforehand precise expectations for model deployment is crucial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge