Carol Sadek

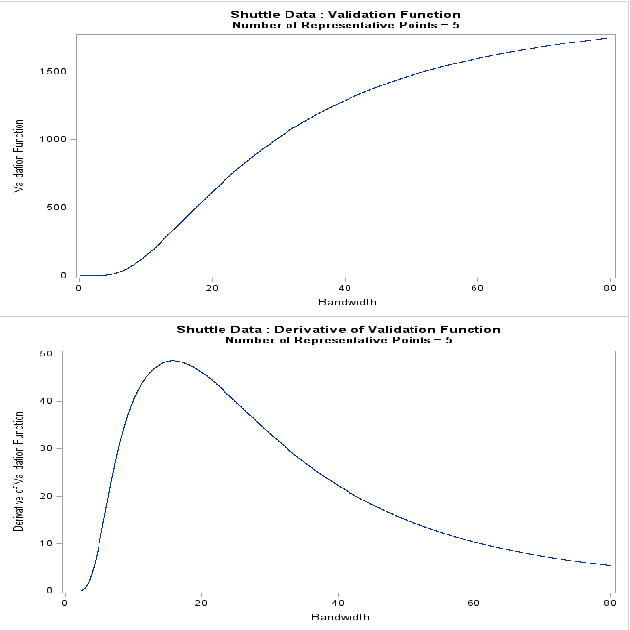

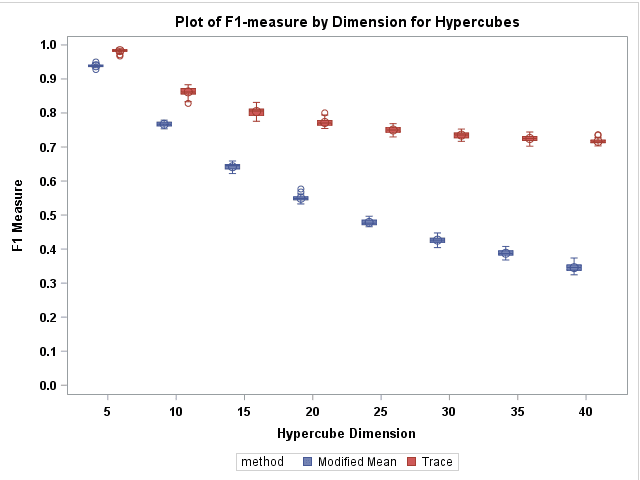

The Trace Criterion for Kernel Bandwidth Selection for Support Vector Data Description

Nov 15, 2018

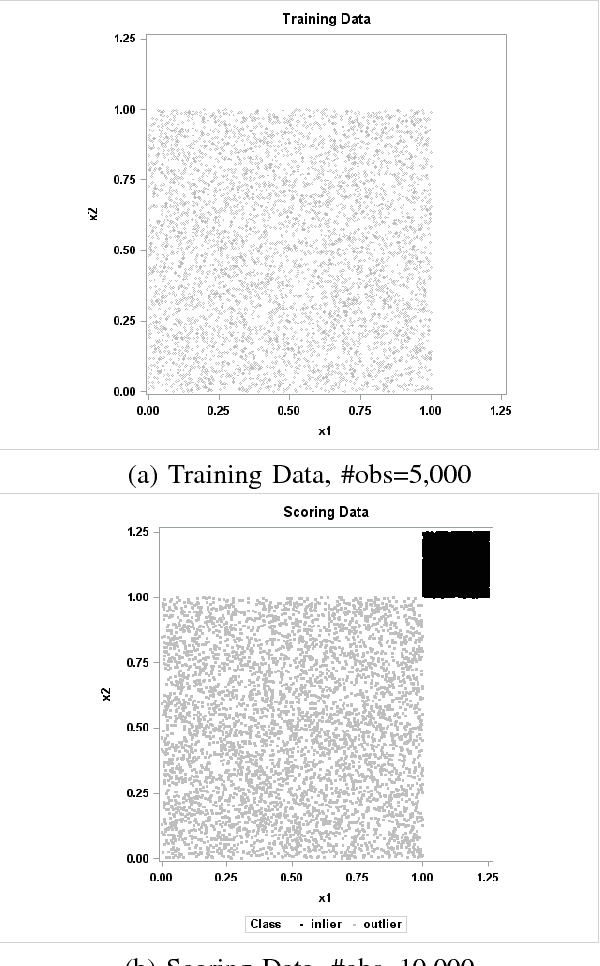

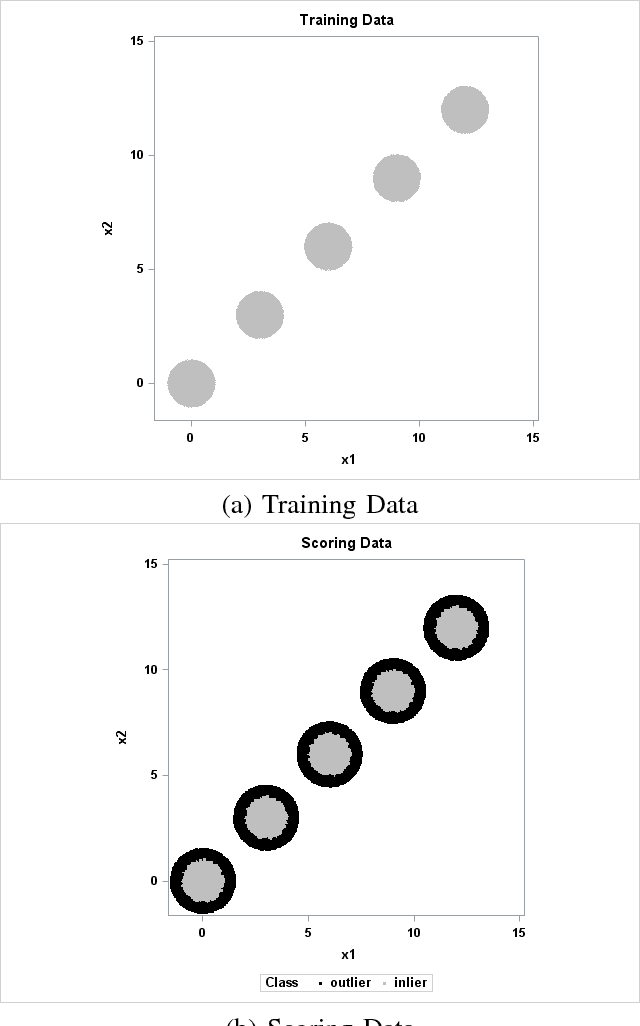

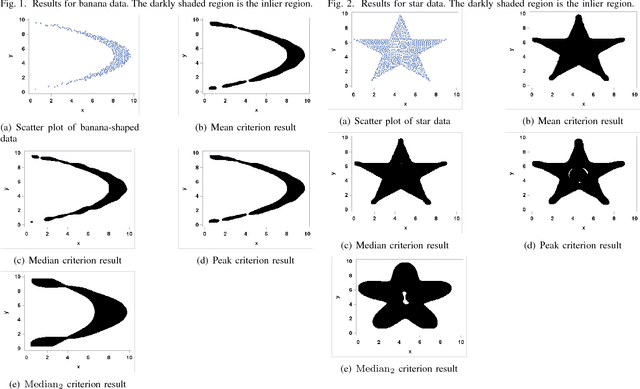

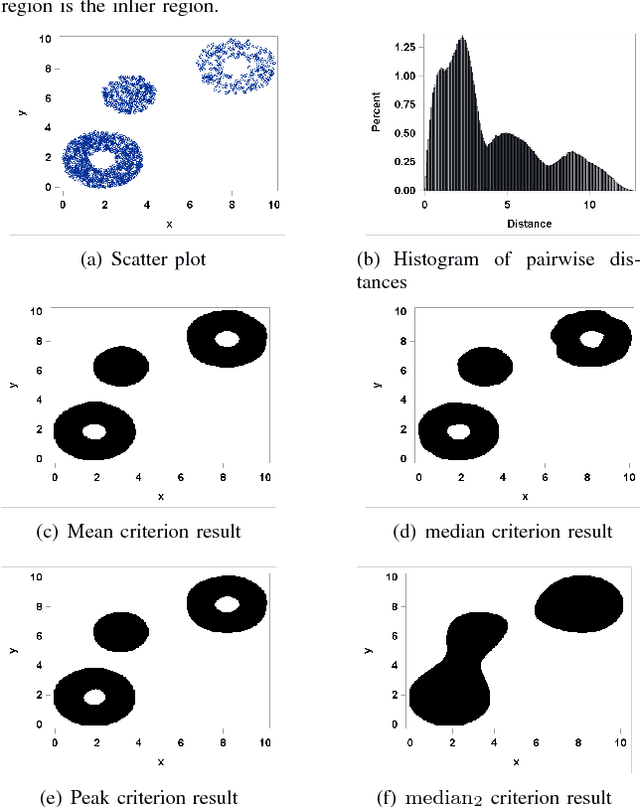

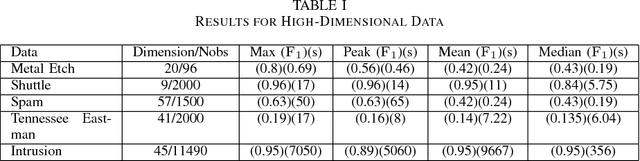

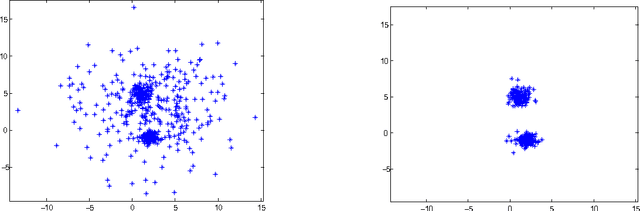

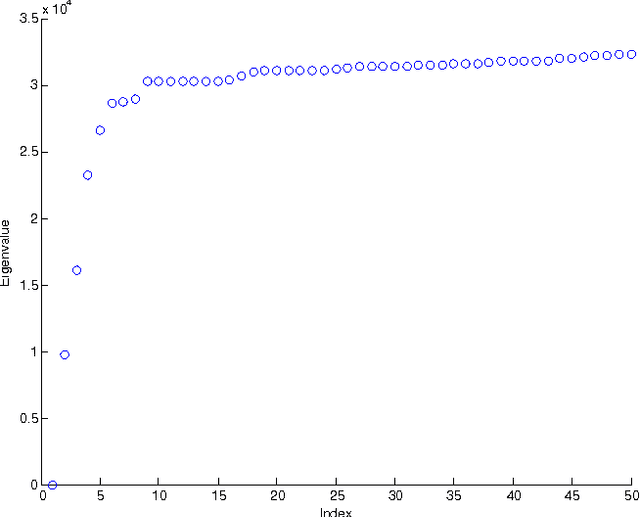

Abstract:Support vector data description (SVDD) is a popular anomaly detection technique. The SVDD classifier partitions the whole data space into an $\textit{inlier}$ region, which consists of the region $\textit{near}$ the training data, and an $\textit{outlier}$ region, which consists of points $\textit{away}$ from the training data. The computation of the SVDD classifier requires a kernel function, for which the Gaussian kernel is a common choice. The Gaussian kernel has a bandwidth parameter, and it is important to set the value of this parameter correctly for good results. A small bandwidth leads to overfitting such that the resulting SVDD classifier overestimates the number of anomalies, whereas a large bandwidth leads to underfitting and an inability to detect many anomalies. In this paper, we present a new unsupervised method for selecting the Gaussian kernel bandwidth. Our method, which exploits the low-rank representation of the kernel matrix to suggest a kernel bandwidth value, is competitive with existing bandwidth selection methods.

The Mean and Median Criterion for Automatic Kernel Bandwidth Selection for Support Vector Data Description

Aug 21, 2017

Abstract:Support vector data description (SVDD) is a popular technique for detecting anomalies. The SVDD classifier partitions the whole space into an inlier region, which consists of the region near the training data, and an outlier region, which consists of points away from the training data. The computation of the SVDD classifier requires a kernel function, and the Gaussian kernel is a common choice for the kernel function. The Gaussian kernel has a bandwidth parameter, whose value is important for good results. A small bandwidth leads to overfitting, and the resulting SVDD classifier overestimates the number of anomalies. A large bandwidth leads to underfitting, and the classifier fails to detect many anomalies. In this paper we present a new automatic, unsupervised method for selecting the Gaussian kernel bandwidth. The selected value can be computed quickly, and it is competitive with existing bandwidth selection methods.

A Case Study in Text Mining: Interpreting Twitter Data From World Cup Tweets

Aug 21, 2014

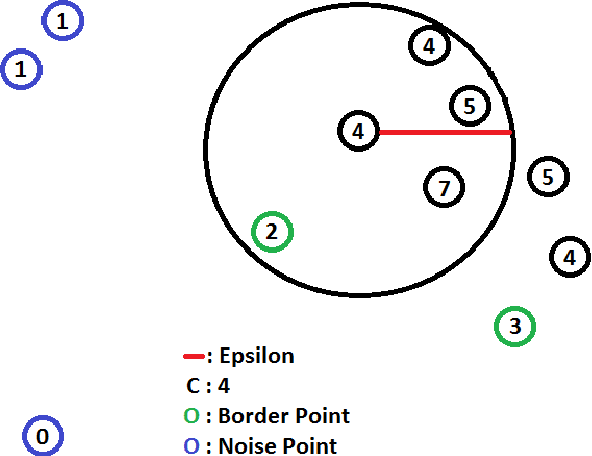

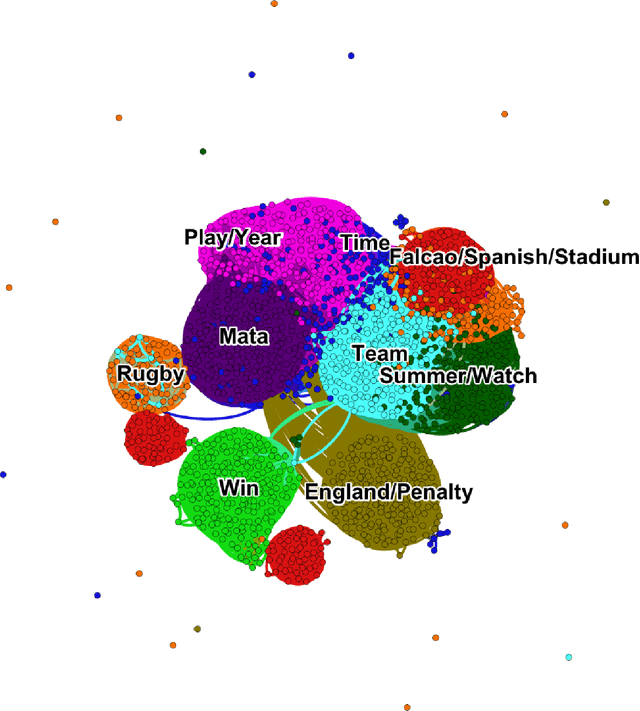

Abstract:Cluster analysis is a field of data analysis that extracts underlying patterns in data. One application of cluster analysis is in text-mining, the analysis of large collections of text to find similarities between documents. We used a collection of about 30,000 tweets extracted from Twitter just before the World Cup started. A common problem with real world text data is the presence of linguistic noise. In our case it would be extraneous tweets that are unrelated to dominant themes. To combat this problem, we created an algorithm that combined the DBSCAN algorithm and a consensus matrix. This way we are left with the tweets that are related to those dominant themes. We then used cluster analysis to find those topics that the tweets describe. We clustered the tweets using k-means, a commonly used clustering algorithm, and Non-Negative Matrix Factorization (NMF) and compared the results. The two algorithms gave similar results, but NMF proved to be faster and provided more easily interpreted results. We explored our results using two visualization tools, Gephi and Wordle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge