Carlos Cotta

Epoch-based Application of Problem-Aware Operators in a Multiobjective Memetic Algorithm for Portfolio Optimization

Dec 05, 2024Abstract:We consider the issue of intensification/diversification balance in the context of a memetic algorithm for the multiobjective optimization of investment portfolios with cardinality constraints. We approach this issue in this work by considering the selective application of knowledge-augmented operators (local search and a memory of elite solutions) based on the search epoch in which the algorithm finds itself, hence alternating between unbiased search (guided uniquely by the built-in search mechanics of the algorithm) and focused search (intensified by the use of the problem-aware operators). These operators exploit Sharpe index (a measure of the relationship between return and risk) as a source of problem knowledge. We have conducted a sensibility analysis to determine in which phases of the search the application of these operators leads to better results. Our findings indicate that the resulting algorithm is quite robust in terms of parameterization from the point of view of this problem-specific indicator. Furthermore, it is shown that not only can other non-memetic counterparts be outperformed, but that there is a range of parameters in which the MA is also competitive when not better in terms of standard multiobjective performance indicators.

* 13 pages, 2 figures

Metaheuristics for the Template Design Problem: Encoding, Symmetry and Hybridisation

Nov 05, 2024Abstract:The template design problem (TDP) is a hard combinatorial problem with a high number of symmetries which makes solving it more complicated. A number of techniques have been proposed in the literature to optimise its resolution, ranging from complete methods to stochastic ones. However, although metaheuristics are considered efficient methods that can find enough-quality solutions at a reasonable computational cost, these techniques have not proven to be truly efficient enough to deal with this problem. This paper explores and analyses a wide range of metaheuristics to tackle the problem with the aim of assessing their suitability for finding template designs. We tackle the problem using a wide set of metaheuristics whose implementation is guided by a number of issues such as problem formulation, solution encoding, the symmetrical nature of the problem, and distinct forms of hybridisation. For the TDP, we also propose a slot-based alternative problem formulation (distinct to other slot-based proposals), which represents another option other than the classical variation-based formulation of the problem. An empirical analysis, assessing the performance of all the metaheuristics (i.e., basic, integrative and collaborative algorithms working on different search spaces and with/without symmetry breaking) shows that some of our proposals can be considered the state-of-the-art when they are applied to specific problem instances.

Memetic collaborative approaches for finding balanced incomplete block designs

Nov 04, 2024

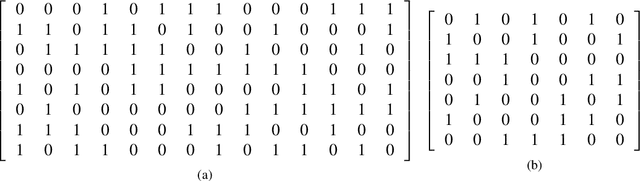

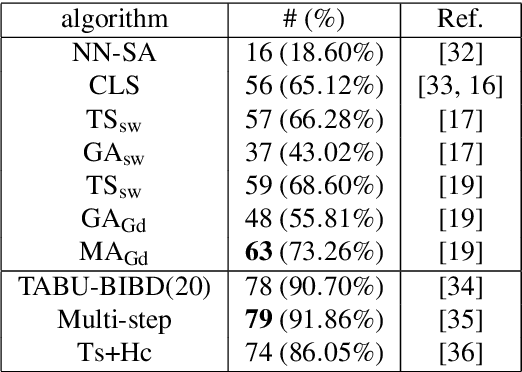

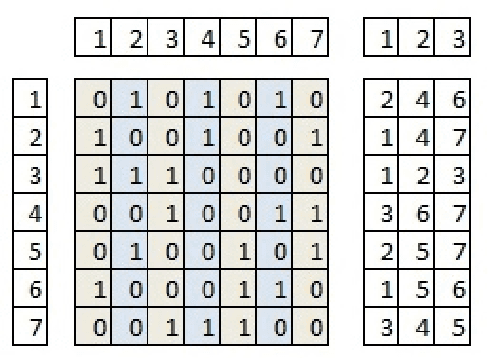

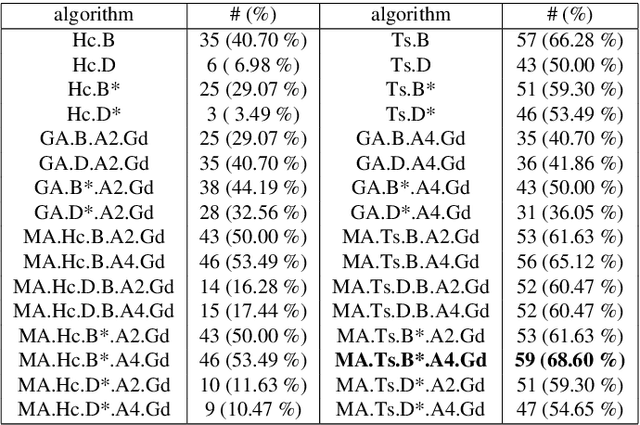

Abstract:The balanced incomplete block design (BIBD) problem is a difficult combinatorial problem with a large number of symmetries, which add complexity to its resolution. In this paper, we propose a dual (integer) problem representation that serves as an alternative to the classical binary formulation of the problem. We attack this problem incrementally: firstly, we propose basic algorithms (i.e. local search techniques and genetic algorithms) intended to work separately on the two different search spaces (i.e. binary and integer); secondly, we propose two hybrid schemes: an integrative approach (i.e. a memetic algorithm) and a collaborative model in which the previous methods work in parallel, occasionally exchanging information. Three distinct two-dimensional structures are proposed as communication topology among the algorithms involved in the collaborative model, as well as a number of migration and acceptance criteria for sending and receiving data. An empirical analysis comparing a large number of instances of our schemes (with algorithms possibly working on different search spaces and with/without symmetry breaking methods) shows that some of these algorithms can be considered the state of the art of the metaheuristic methods applied to finding BIBDs. Moreover, our cooperative proposal is a general scheme from which distinct algorithmic variants can be instantiated to handle symmetrical optimisation problems. For this reason, we have also analysed its key parameters, thereby providing general guidelines for the design of efficient/robust cooperative algorithms devised from our proposal.

* 16 pages, 7 figures

Deep memetic models for combinatorial optimization problems: application to the tool switching problem

Nov 04, 2024Abstract:Memetic algorithms are techniques that orchestrate the interplay between population-based and trajectory-based algorithmic components. In particular, some memetic models can be regarded under this broad interpretation as a group of autonomous basic optimization algorithms that interact among them in a cooperative way in order to deal with a specific optimization problem, aiming to obtain better results than the algorithms that constitute it separately. Going one step beyond this traditional view of cooperative optimization algorithms, this work tackles deep meta-cooperation, namely the use of cooperative optimization algorithms in which some components can in turn be cooperative methods themselves, thus exhibiting a deep algorithmic architecture. The objective of this paper is to demonstrate that such models can be considered as an efficient alternative to other traditional forms of cooperative algorithms. To validate this claim, different structural parameters, such as the communication topology between the agents, or the parameter that influences the depth of the cooperative effort (the depth of meta-cooperation), have been analyzed. To do this, a comparison with the state-of-the-art cooperative methods to solve a specific combinatorial problem, the Tool Switching Problem, has been performed. Results show that deep models are effective to solve this problem, outperforming metaheuristics proposed in the literature.

* 32 pages, 5 figures

Optimizing Hearthstone Agents using an Evolutionary Algorithm

Oct 25, 2024

Abstract:Digital collectible card games are not only a growing part of the video game industry, but also an interesting research area for the field of computational intelligence. This game genre allows researchers to deal with hidden information, uncertainty and planning, among other aspects. This paper proposes the use of evolutionary algorithms (EAs) to develop agents who play a card game, Hearthstone, by optimizing a data-driven decision-making mechanism that takes into account all the elements currently in play. Agents feature self-learning by means of a competitive coevolutionary training approach, whereby no external sparring element defined by the user is required for the optimization process. One of the agents developed through the proposed approach was runner-up (best 6%) in an international Hearthstone Artificial Intelligence (AI) competition. Our proposal performed remarkably well, even when it faced state-of-the-art techniques that attempted to take into account future game states, such as Monte-Carlo Tree search. This outcome shows how evolutionary computation could represent a considerable advantage in developing AIs for collectible card games such as Hearthstone.

* 43 pages, 11 figures

New perspectives on the optimal placement of detectors for suicide bombers using metaheuristics

May 29, 2024Abstract:We consider an operational model of suicide bombing attacks -- an increasingly prevalent form of terrorism -- against specific targets, and the use of protective countermeasures based on the deployment of detectors over the area under threat. These detectors have to be carefully located in order to minimize the expected number of casualties or the economic damage suffered, resulting in a hard optimization problem for which different metaheuristics have been proposed. Rather than assuming random decisions by the attacker, the problem is approached by considering different models of the latter, whereby he takes informed decisions on which objective must be targeted and through which path it has to be reached based on knowledge on the importance or value of the objectives or on the defensive strategy of the defender (a scenario that can be regarded as an adversarial game). We consider four different algorithms, namely a greedy heuristic, a hill climber, tabu search and an evolutionary algorithm, and study their performance on a broad collection of problem instances trying to resemble different realistic settings such as a coastal area, a modern urban area, and the historic core of an old town. It is shown that the adversarial scenario is harder for all techniques, and that the evolutionary algorithm seems to adapt better to the complexity of the resulting search landscape.

Evolutionary Algorithms for Optimizing Emergency Exit Placement in Indoor Environments

May 28, 2024Abstract:The problem of finding the optimal placement of emergency exits in an indoor environment to facilitate the rapid and orderly evacuation of crowds is addressed in this work. A cellular-automaton model is used to simulate the behavior of pedestrians in such scenarios, taking into account factors such as the environment, the pedestrians themselves, and the interactions among them. A metric is proposed to determine how successful or satisfactory an evacuation was. Subsequently, two metaheuristic algorithms, namely an iterated greedy heuristic and an evolutionary algorithm (EA) are proposed to solve the optimization problem. A comparative analysis shows that the proposed EA is able to find effective solutions for different scenarios, and that an island-based version of it outperforms the other two algorithms in terms of solution quality.

Metaheuristic approaches to the placement of suicide bomber detectors

May 28, 2024Abstract:Suicide bombing is an infamous form of terrorism that is becoming increasingly prevalent in the current era of global terror warfare. We consider the case of targeted attacks of this kind, and the use of detectors distributed over the area under threat as a protective countermeasure. Such detectors are non-fully reliable, and must be strategically placed in order to maximize the chances of detecting the attack, hence minimizing the expected number of casualties. To this end, different metaheuristic approaches based on local search and on population-based search are considered and benchmarked against a powerful greedy heuristic from the literature. We conduct an extensive empirical evaluation on synthetic instances featuring very diverse properties. Most metaheuristics outperform the greedy algorithm, and a hill-climber is shown to be superior to remaining approaches. This hill-climber is subsequently subject to a sensitivity analysis to determine which problem features make it stand above the greedy approach, and is finally deployed on a number of problem instances built after realistic scenarios, corroborating the good performance of the heuristic.

Solving Weighted Constraint Satisfaction Problems with Memetic/Exact Hybrid Algorithms

Jan 15, 2014

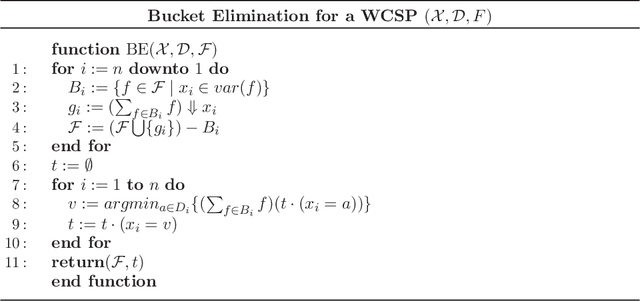

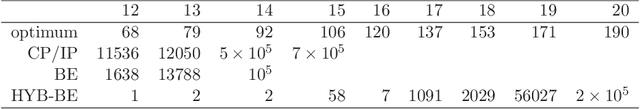

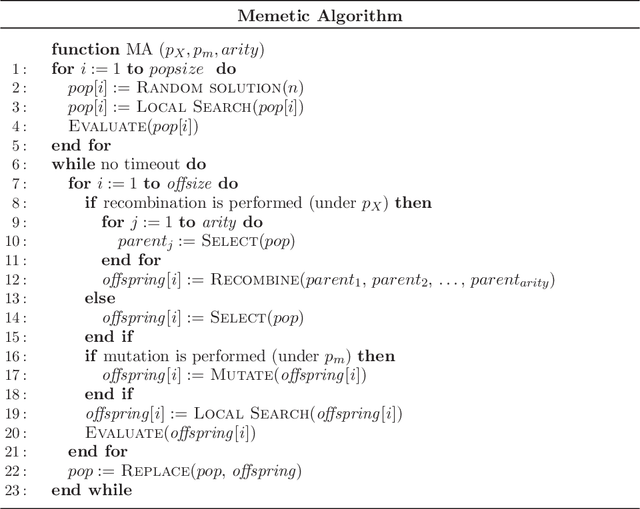

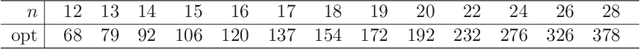

Abstract:A weighted constraint satisfaction problem (WCSP) is a constraint satisfaction problem in which preferences among solutions can be expressed. Bucket elimination is a complete technique commonly used to solve this kind of constraint satisfaction problem. When the memory required to apply bucket elimination is too high, a heuristic method based on it (denominated mini-buckets) can be used to calculate bounds for the optimal solution. Nevertheless, the curse of dimensionality makes these techniques impractical on large scale problems. In response to this situation, we present a memetic algorithm for WCSPs in which bucket elimination is used as a mechanism for recombining solutions, providing the best possible child from the parental set. Subsequently, a multi-level model in which this exact/metaheuristic hybrid is further hybridized with branch-and-bound techniques and mini-buckets is studied. As a case study, we have applied these algorithms to the resolution of the maximum density still life problem, a hard constraint optimization problem based on Conways game of life. The resulting algorithm consistently finds optimal patterns for up to date solved instances in less time than current approaches. Moreover, it is shown that this proposal provides new best known solutions for very large instances.

* arXiv admin note: substantial text overlap with arXiv:0812.4170

An experimental study of exhaustive solutions for the Mastermind puzzle

Jul 05, 2012

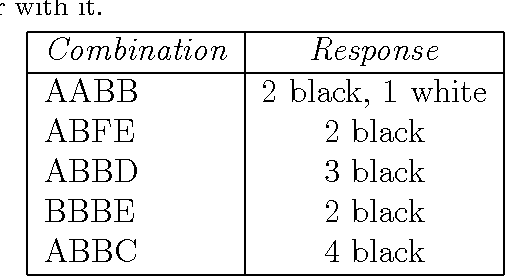

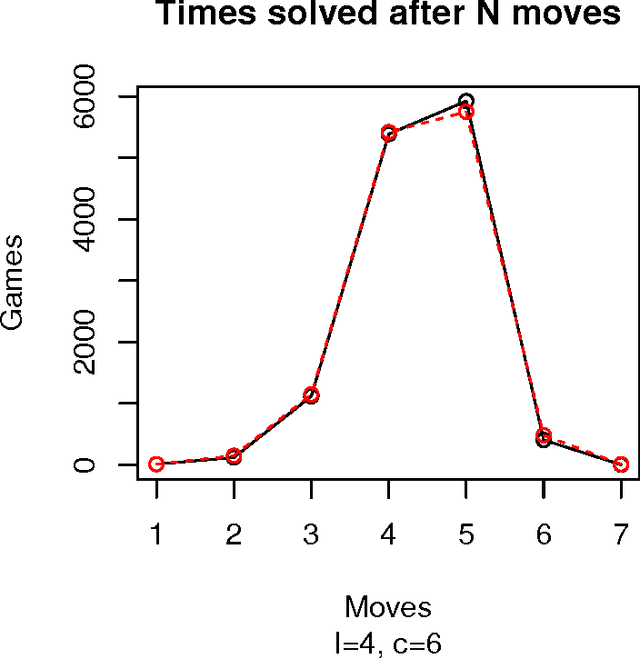

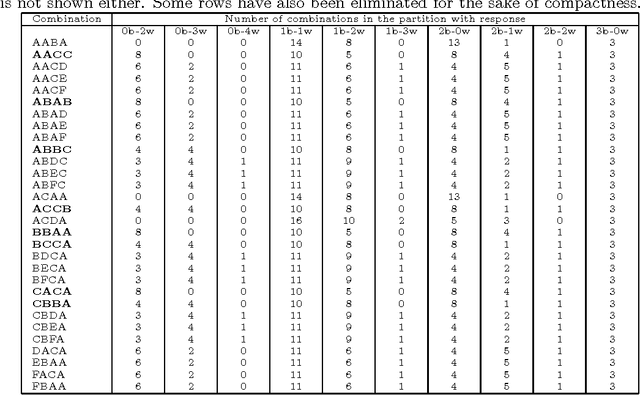

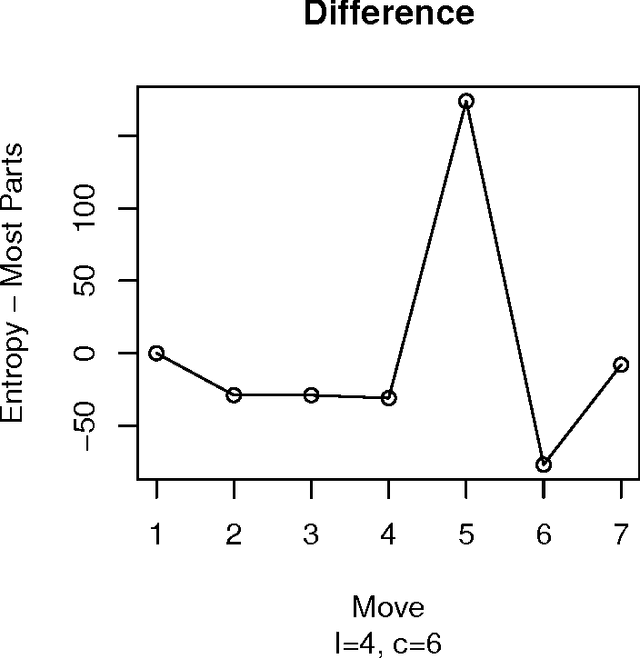

Abstract:Mastermind is in essence a search problem in which a string of symbols that is kept secret must be found by sequentially playing strings that use the same alphabet, and using the responses that indicate how close are those other strings to the secret one as hints. Although it is commercialized as a game, it is a combinatorial problem of high complexity, with applications on fields that range from computer security to genomics. As such a kind of problem, there are no exact solutions; even exhaustive search methods rely on heuristics to choose, at every step, strings to get the best possible hint. These methods mostly try to play the move that offers the best reduction in search space size in the next step; this move is chosen according to an empirical score. However, in this paper we will examine several state of the art exhaustive search methods and show that another factor, the presence of the actual solution among the candidate moves, or, in other words, the fact that the actual solution has the highest score, plays also a very important role. Using that, we will propose new exhaustive search approaches that obtain results which are comparable to the classic ones, and besides, are better suited as a basis for non-exhaustive search strategies such as evolutionary algorithms, since their behavior in a series of key indicators is better than the classical algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge