Carleton Coffrin

High-quality Thermal Gibbs Sampling with Quantum Annealing Hardware

Sep 03, 2021

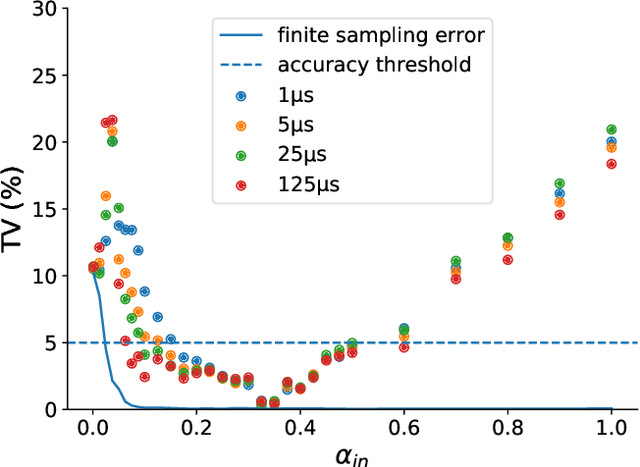

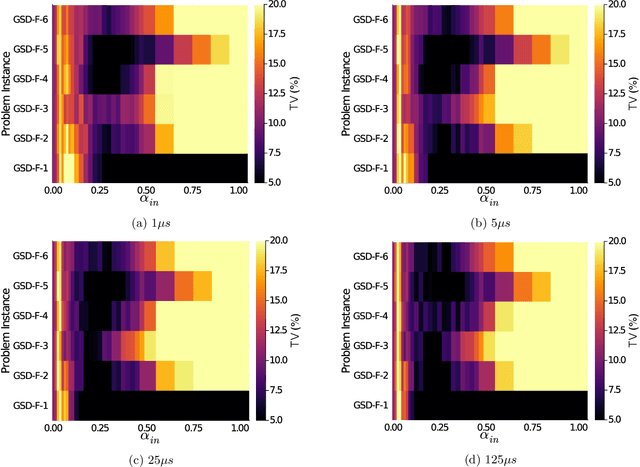

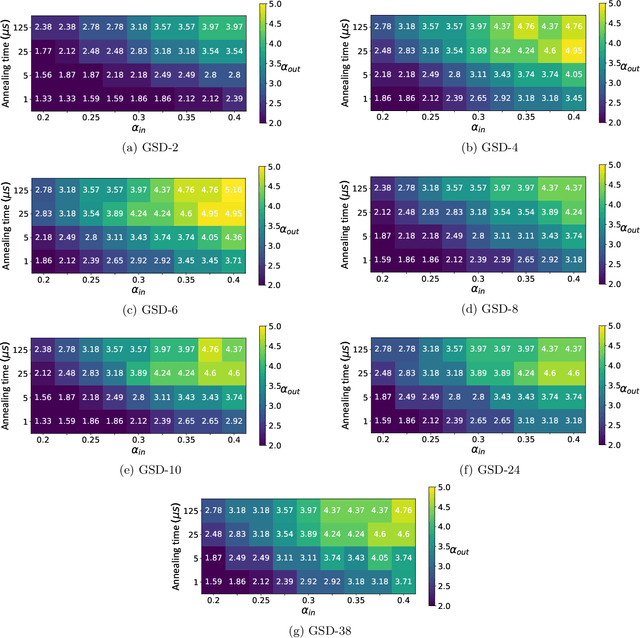

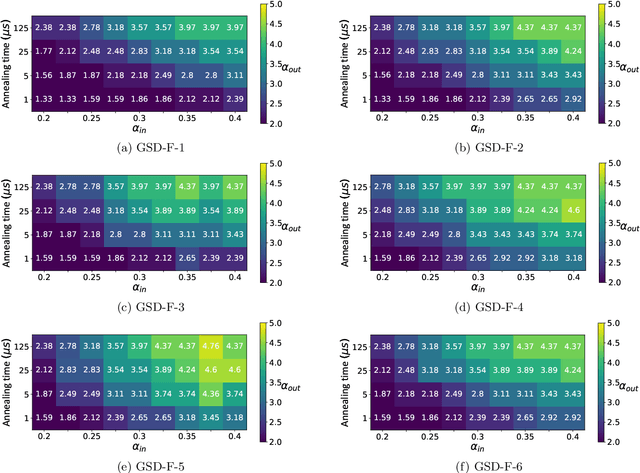

Abstract:Quantum Annealing (QA) was originally intended for accelerating the solution of combinatorial optimization tasks that have natural encodings as Ising models. However, recent experiments on QA hardware platforms have demonstrated that, in the operating regime corresponding to weak interactions, the QA hardware behaves like a noisy Gibbs sampler at a hardware-specific effective temperature. This work builds on those insights and identifies a class of small hardware-native Ising models that are robust to noise effects and proposes a novel procedure for executing these models on QA hardware to maximize Gibbs sampling performance. Experimental results indicate that the proposed protocol results in high-quality Gibbs samples from a hardware-specific effective temperature and that the QA annealing time can be used to adjust the effective temperature of the output distribution. The procedure proposed in this work provides a new approach to using QA hardware for Ising model sampling presenting potential new opportunities for applications in machine learning and physics simulation.

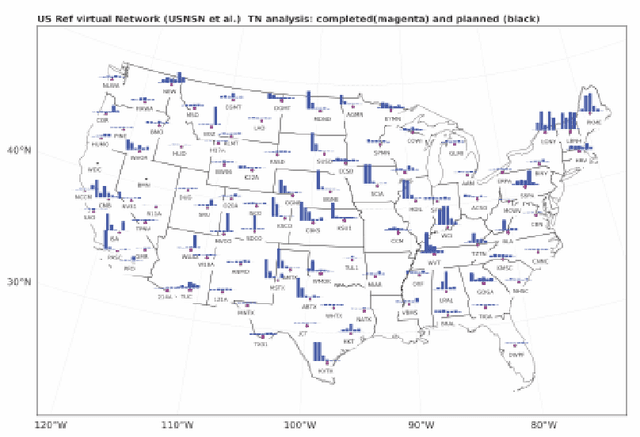

Single-Qubit Fidelity Assessment of Quantum Annealing Hardware

Apr 07, 2021

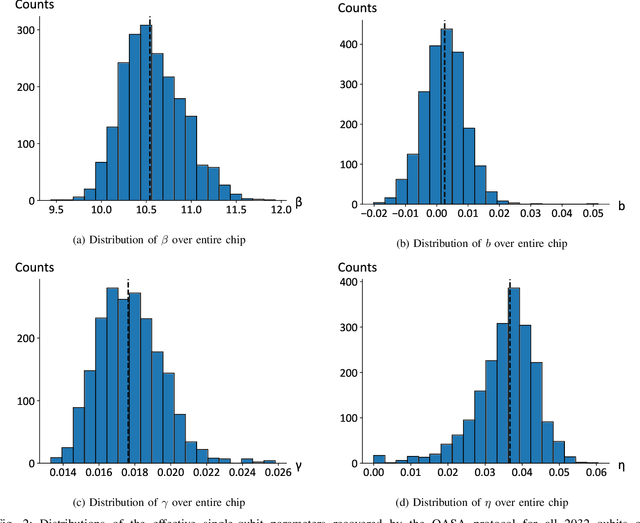

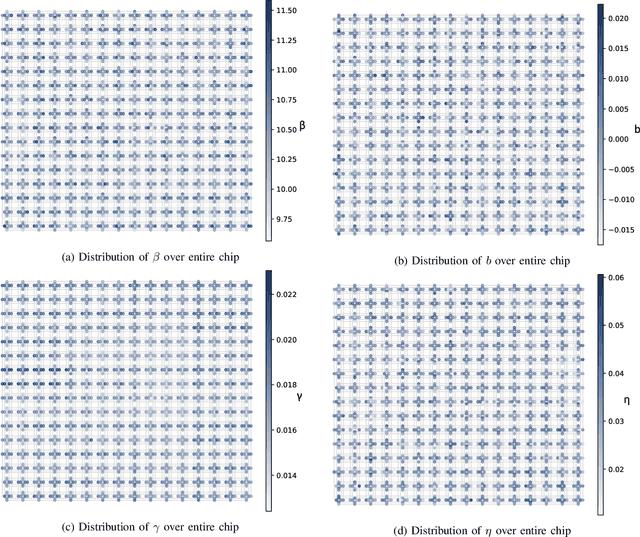

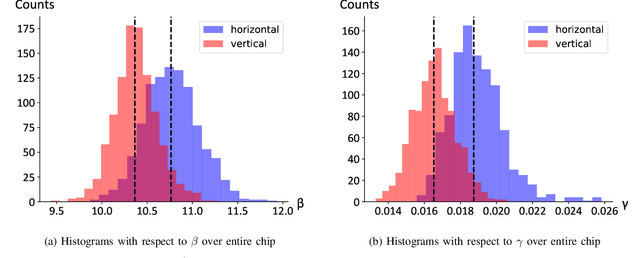

Abstract:As a wide variety of quantum computing platforms become available, methods for assessing and comparing the performance of these devices are of increasing interest and importance. Inspired by the success of single-qubit error rate computations for tracking the progress of gate-based quantum computers, this work proposes a Quantum Annealing Single-qubit Assessment (QASA) protocol for quantifying the performance of individual qubits in quantum annealing computers. The proposed protocol scales to large quantum annealers with thousands of qubits and provides unique insights into the distribution of qubit properties within a particular hardware device. The efficacy of the QASA protocol is demonstrated by analyzing the properties of a D-Wave 2000Q system, revealing unanticipated correlations in the qubit performance of that device. A study repeating the QASA protocol at different annealing times highlights how the method can be utilized to understand the impact of annealing parameters on qubit performance. Overall, the proposed QASA protocol provides a useful tool for assessing the performance of current and emerging quantum annealing devices.

Programmable Quantum Annealers as Noisy Gibbs Samplers

Dec 16, 2020

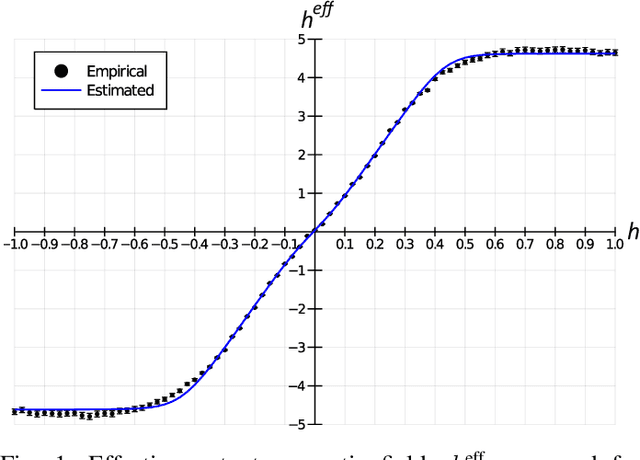

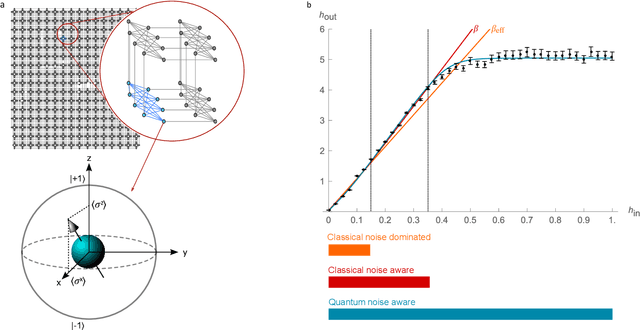

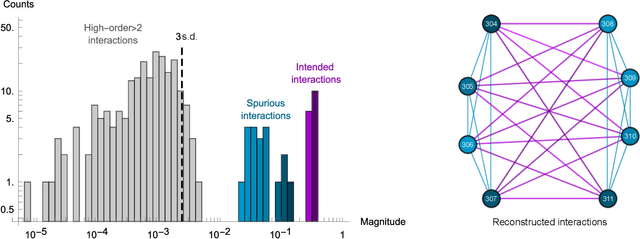

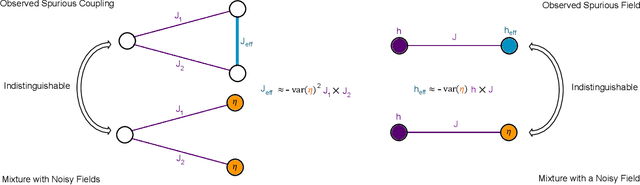

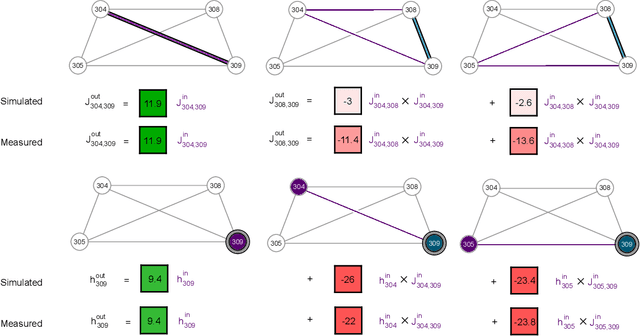

Abstract:Drawing independent samples from high-dimensional probability distributions represents the major computational bottleneck for modern algorithms, including powerful machine learning frameworks such as deep learning. The quest for discovering larger families of distributions for which sampling can be efficiently realized has inspired an exploration beyond established computing methods and turning to novel physical devices that leverage the principles of quantum computation. Quantum annealing embodies a promising computational paradigm that is intimately related to the complexity of energy landscapes in Gibbs distributions, which relate the probabilities of system states to the energies of these states. Here, we study the sampling properties of physical realizations of quantum annealers which are implemented through programmable lattices of superconducting flux qubits. Comprehensive statistical analysis of the data produced by these quantum machines shows that quantum annealers behave as samplers that generate independent configurations from low-temperature noisy Gibbs distributions. We show that the structure of the output distribution probes the intrinsic physical properties of the quantum device such as effective temperature of individual qubits and magnitude of local qubit noise, which result in a non-linear response function and spurious interactions that are absent in the hardware implementation. We anticipate that our methodology will find widespread use in characterization of future generations of quantum annealers and other emerging analog computing devices.

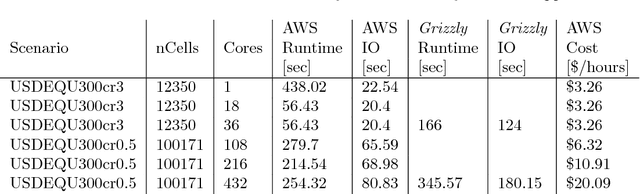

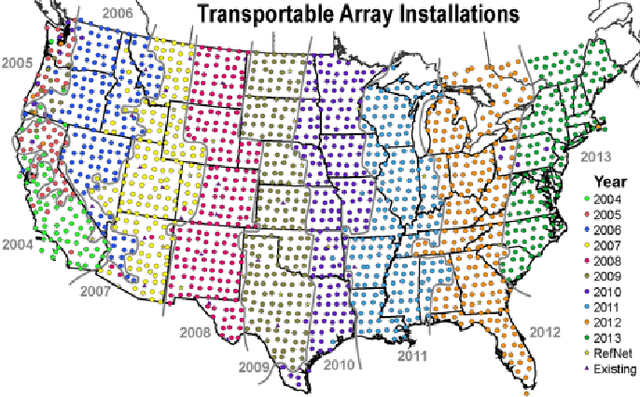

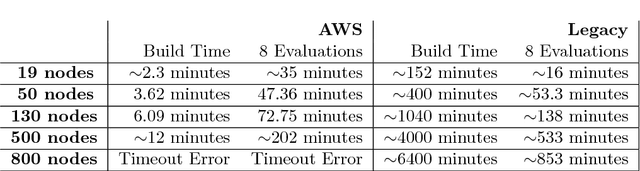

The ISTI Rapid Response on Exploring Cloud Computing 2018

Jan 04, 2019

Abstract:This report describes eighteen projects that explored how commercial cloud computing services can be utilized for scientific computation at national laboratories. These demonstrations ranged from deploying proprietary software in a cloud environment to leveraging established cloud-based analytics workflows for processing scientific datasets. By and large, the projects were successful and collectively they suggest that cloud computing can be a valuable computational resource for scientific computation at national laboratories.

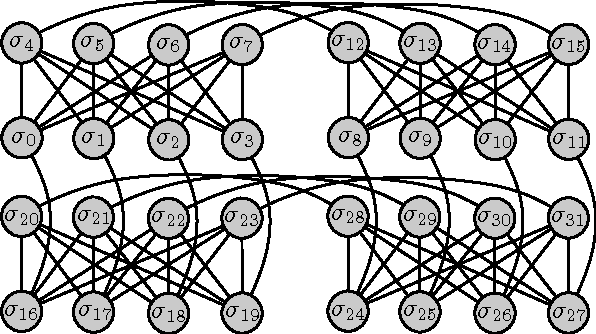

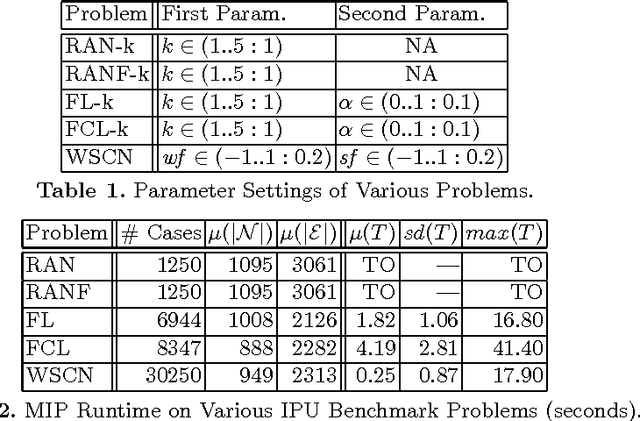

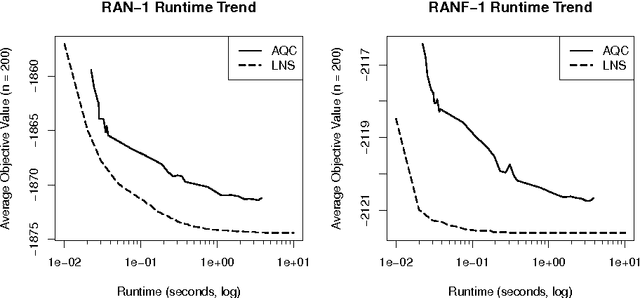

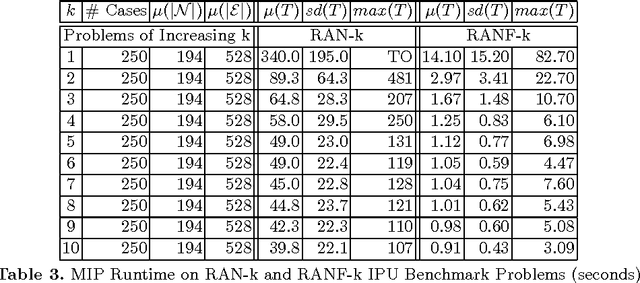

Ising Processing Units: Potential and Challenges for Discrete Optimization

Jul 02, 2017

Abstract:The recent emergence of novel computational devices, such as adiabatic quantum computers, CMOS annealers, and optical parametric oscillators, presents new opportunities for hybrid-optimization algorithms that leverage these kinds of specialized hardware. In this work, we propose the idea of an Ising processing unit as a computational abstraction for these emerging tools. Challenges involved in using and benchmarking these devices are presented, and open-source software tools are proposed to address some of these challenges. The proposed benchmarking tools and methodology are demonstrated by conducting a baseline study of established solution methods to a D-Wave 2X adiabatic quantum computer, one example of a commercially available Ising processing unit.

NESTA, The NICTA Energy System Test Case Archive

Aug 11, 2016

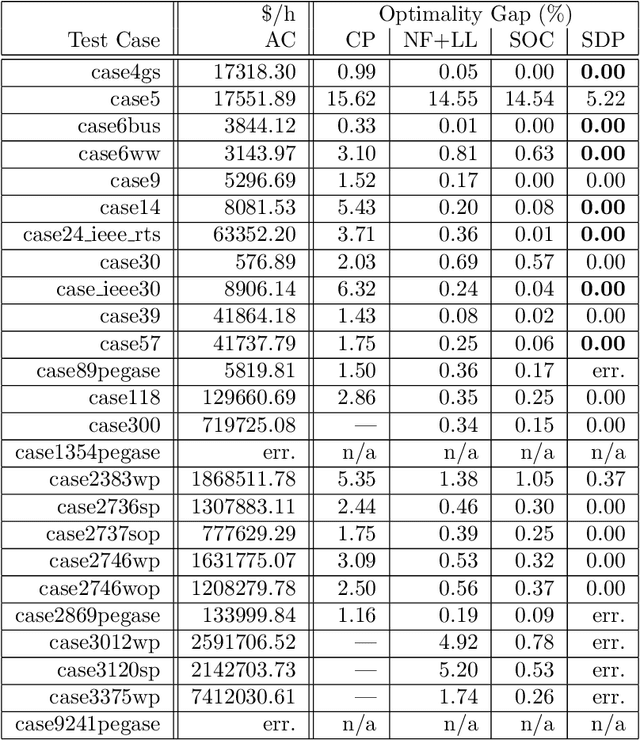

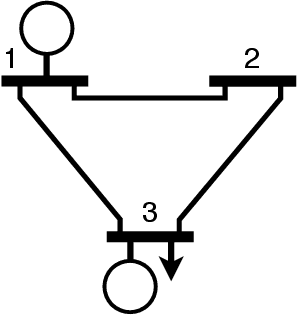

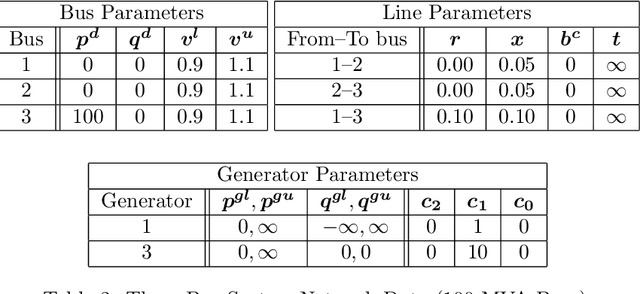

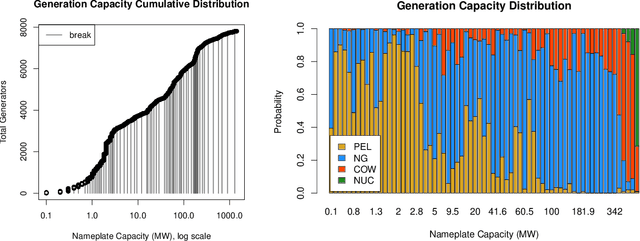

Abstract:In recent years the power systems research community has seen an explosion of work applying operations research techniques to challenging power network optimization problems. Regardless of the application under consideration, all of these works rely on power system test cases for evaluation and validation. However, many of the well established power system test cases were developed as far back as the 1960s with the aim of testing AC power flow algorithms. It is unclear if these power flow test cases are suitable for power system optimization studies. This report surveys all of the publicly available AC transmission system test cases, to the best of our knowledge, and assess their suitability for optimization tasks. It finds that many of the traditional test cases are missing key network operation constraints, such as line thermal limits and generator capability curves. To incorporate these missing constraints, data driven models are developed from a variety of publicly available data sources. The resulting extended test cases form a compressive archive, NESTA, for the evaluation and validation of power system optimization algorithms.

A Linear-Programming Approximation of AC Power Flows

Aug 06, 2013

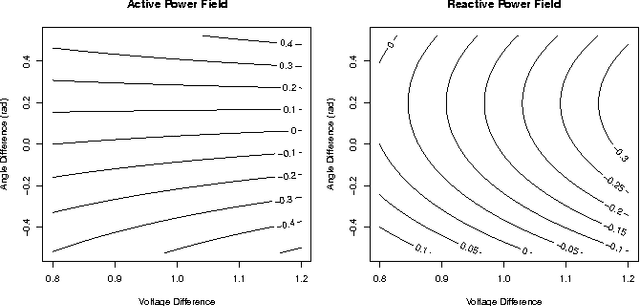

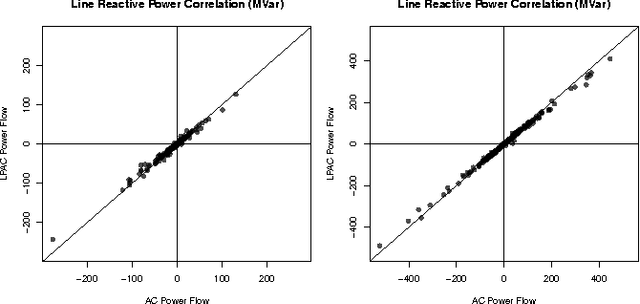

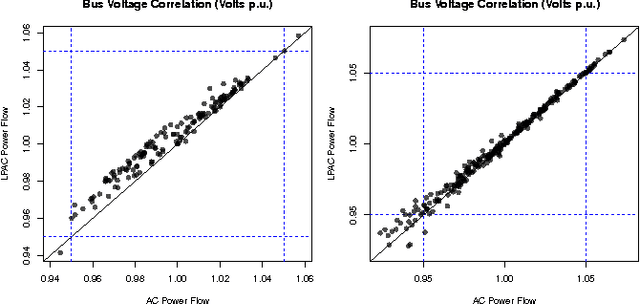

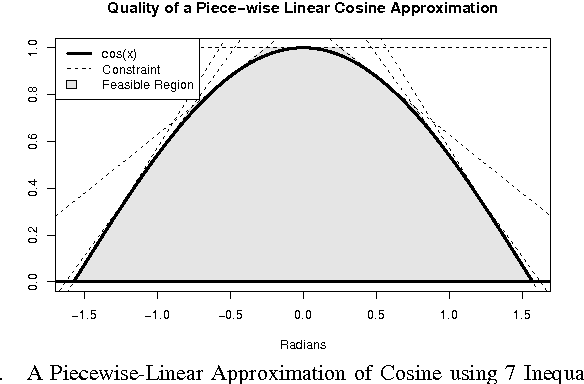

Abstract:Linear active-power-only DC power flow approximations are pervasive in the planning and control of power systems. However, these approximations fail to capture reactive power and voltage magnitudes, both of which are necessary in many applications to ensure voltage stability and AC power flow feasibility. This paper proposes linear-programming models (the LPAC models) that incorporate reactive power and voltage magnitudes in a linear power flow approximation. The LPAC models are built on a convex approximation of the cosine terms in the AC equations, as well as Taylor approximations of the remaining nonlinear terms. Experimental comparisons with AC solutions on a variety of standard IEEE and MatPower benchmarks show that the LPAC models produce accurate values for active and reactive power, phase angles, and voltage magnitudes. The potential benefits of the LPAC models are illustrated on two "proof-of-concept" studies in power restoration and capacitor placement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge