César Hervás-Martínez

Splitting criteria for ordinal decision trees: an experimental study

Dec 18, 2024Abstract:Ordinal Classification (OC) is a machine learning field that addresses classification tasks where the labels exhibit a natural order. Unlike nominal classification, which treats all classes as equally distinct, OC takes the ordinal relationship into account, producing more accurate and relevant results. This is particularly critical in applications where the magnitude of classification errors has implications. Despite this, OC problems are often tackled using nominal methods, leading to suboptimal solutions. Although decision trees are one of the most popular classification approaches, ordinal tree-based approaches have received less attention when compared to other classifiers. This work conducts an experimental study of tree-based methodologies specifically designed to capture ordinal relationships. A comprehensive survey of ordinal splitting criteria is provided, standardising the notations used in the literature for clarity. Three ordinal splitting criteria, Ordinal Gini (OGini), Weighted Information Gain (WIG), and Ranking Impurity (RI), are compared to the nominal counterparts of the first two (Gini and information gain), by incorporating them into a decision tree classifier. An extensive repository considering 45 publicly available OC datasets is presented, supporting the first experimental comparison of ordinal and nominal splitting criteria using well-known OC evaluation metrics. Statistical analysis of the results highlights OGini as the most effective ordinal splitting criterion to date. Source code, datasets, and results are made available to the research community.

dlordinal: a Python package for deep ordinal classification

Jul 24, 2024Abstract:dlordinal is a new Python library that unifies many recent deep ordinal classification methodologies available in the literature. Developed using PyTorch as underlying framework, it implements the top performing state-of-the-art deep learning techniques for ordinal classification problems. Ordinal approaches are designed to leverage the ordering information present in the target variable. Specifically, it includes loss functions, various output layers, dropout techniques, soft labelling methodologies, and other classification strategies, all of which are appropriately designed to incorporate the ordinal information. Furthermore, as the performance metrics to assess novel proposals in ordinal classification depend on the distance between target and predicted classes in the ordinal scale, suitable ordinal evaluation metrics are also included. dlordinal is distributed under the BSD-3-Clause license and is available at https://github.com/ayrna/dlordinal.

Improving the classification of extreme classes by means of loss regularisation and generalised beta distributions

Jul 17, 2024Abstract:An ordinal classification problem is one in which the target variable takes values on an ordinal scale. Nowadays, there are many of these problems associated with real-world tasks where it is crucial to accurately classify the extreme classes of the ordinal structure. In this work, we propose a unimodal regularisation approach that can be applied to any loss function to improve the classification performance of the first and last classes while maintaining good performance for the remainder. The proposed methodology is tested on six datasets with different numbers of classes, and compared with other unimodal regularisation methods in the literature. In addition, performance in the extreme classes is compared using a new metric that takes into account their sensitivities. Experimental results and statistical analysis show that the proposed methodology obtains a superior average performance considering different metrics. The results for the proposed metric show that the generalised beta distribution generally improves classification performance in the extreme classes. At the same time, the other five nominal and ordinal metrics considered show that the overall performance is aligned with the performance of previous alternatives.

A two-stage algorithm in evolutionary product unit neural networks for classification

Feb 09, 2024Abstract:This paper presents a procedure to add broader diversity at the beginning of the evolutionary process. It consists of creating two initial populations with different parameter settings, evolving them for a small number of generations, selecting the best individuals from each population in the same proportion and combining them to constitute a new initial population. At this point the main loop of an evolutionary algorithm is applied to the new population. The results show that our proposal considerably improves both the efficiency of previous methodologies and also, significantly, their efficacy in most of the data sets. We have carried out our experimentation on twelve data sets from the UCI repository and two complex real-world problems which differ in their number of instances, features and classes.

* 12 pages, 5 figures

Convolutional and Deep Learning based techniques for Time Series Ordinal Classification

Jun 16, 2023Abstract:Time Series Classification (TSC) covers the supervised learning problem where input data is provided in the form of series of values observed through repeated measurements over time, and whose objective is to predict the category to which they belong. When the class values are ordinal, classifiers that take this into account can perform better than nominal classifiers. Time Series Ordinal Classification (TSOC) is the field covering this gap, yet unexplored in the literature. There are a wide range of time series problems showing an ordered label structure, and TSC techniques that ignore the order relationship discard useful information. Hence, this paper presents a first benchmarking of TSOC methodologies, exploiting the ordering of the target labels to boost the performance of current TSC state-of-the-art. Both convolutional- and deep learning-based methodologies (among the best performing alternatives for nominal TSC) are adapted for TSOC. For the experiments, a selection of 18 ordinal problems from two well-known archives has been made. In this way, this paper contributes to the establishment of the state-of-the-art in TSOC. The results obtained by ordinal versions are found to be significantly better than current nominal TSC techniques in terms of ordinal performance metrics, outlining the importance of considering the ordering of the labels when dealing with this kind of problems.

A hybrid feature learning approach based on convolutional kernels for ATM fault prediction using event-log data

May 17, 2023Abstract:Predictive Maintenance (PdM) methods aim to facilitate the scheduling of maintenance work before equipment failure. In this context, detecting early faults in automated teller machines (ATMs) has become increasingly important since these machines are susceptible to various types of unpredictable failures. ATMs track execution status by generating massive event-log data that collect system messages unrelated to the failure event. Predicting machine failure based on event logs poses additional challenges, mainly in extracting features that might represent sequences of events indicating impending failures. Accordingly, feature learning approaches are currently being used in PdM, where informative features are learned automatically from minimally processed sensor data. However, a gap remains to be seen on how these approaches can be exploited for deriving relevant features from event-log-based data. To fill this gap, we present a predictive model based on a convolutional kernel (MiniROCKET and HYDRA) to extract features from the original event-log data and a linear classifier to classify the sample based on the learned features. The proposed methodology is applied to a significant real-world collected dataset. Experimental results demonstrated how one of the proposed convolutional kernels (i.e. HYDRA) exhibited the best classification performance (accuracy of 0.759 and AUC of 0.693). In addition, statistical analysis revealed that the HYDRA and MiniROCKET models significantly overcome one of the established state-of-the-art approaches in time series classification (InceptionTime), and three non-temporal ML methods from the literature. The predictive model was integrated into a container-based decision support system to support operators in the timely maintenance of ATMs.

An ordinal CNN approach for the assessment of neurological damage in Parkinson's disease patients

May 31, 2021

Abstract:3D image scans are an assessment tool for neurological damage in Parkinson's disease (PD) patients. This diagnosis process can be automatized to help medical staff through Decision Support Systems (DSSs), and Convolutional Neural Networks (CNNs) are good candidates, because they are effective when applied to spatial data. This paper proposes a 3D CNN ordinal model for assessing the level or neurological damage in PD patients. Given that CNNs need large datasets to achieve acceptable performance, a data augmentation method is adapted to work with spatial data. We consider the Ordinal Graph-based Oversampling via Shortest Paths (OGO-SP) method, which applies a gamma probability distribution for inter-class data generation. A modification of OGO-SP is proposed, the OGO-SP-$\beta$ algorithm, which applies the beta distribution for generating synthetic samples in the inter-class region, a better suited distribution when compared to gamma. The evaluation of the different methods is based on a novel 3D image dataset provided by the Hospital Universitario 'Reina Sof\'ia' (C\'ordoba, Spain). We show how the ordinal methodology improves the performance with respect to the nominal one, and how OGO-SP-$\beta$ yields better performance than OGO-SP.

Deep ordinal classification based on cumulative link models

May 27, 2019

Abstract:This paper proposes a deep convolutional neural network model for ordinal regression by considering a family of probabilistic ordinal link functions in the output layer. The link functions are those used for cumulative link models, which are traditional statistical linear models based on projecting each pattern into a 1-dimensional space. A set of ordered thresholds splits this space into the different classes of the problem. In our case, the projections are estimated by a non-linear deep neural network. To further improve the results, we combine these ordinal models with a loss function that takes into account the distance between the categories, based on the weighted Kappa index. Three different link functions are studied in the experimental study, and the results are contrasted with statistical analysis. The experiments run over two different ordinal classification problems, and the statistical tests confirm that these models improve the results of a nominal model and outperform other proposals considered in the literature.

Time series clustering based on the characterisation of segment typologies

Oct 27, 2018

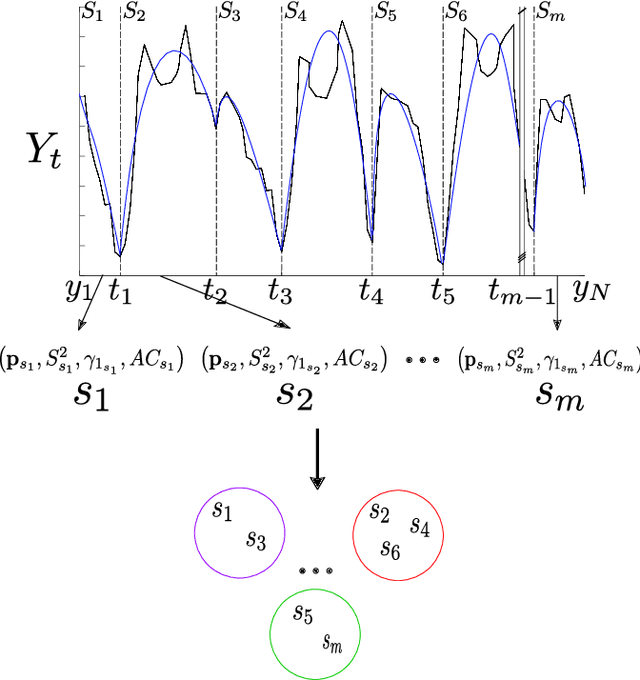

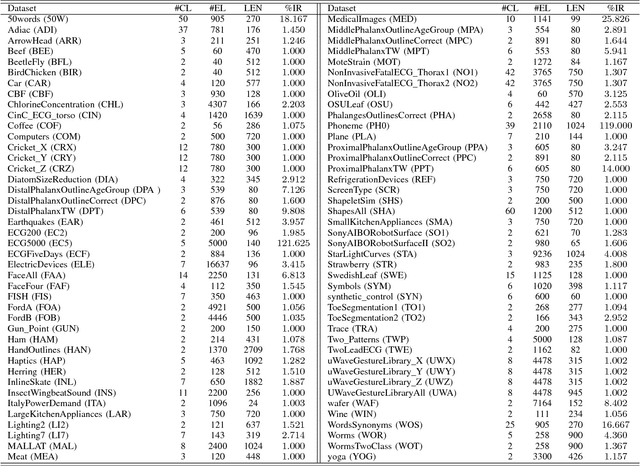

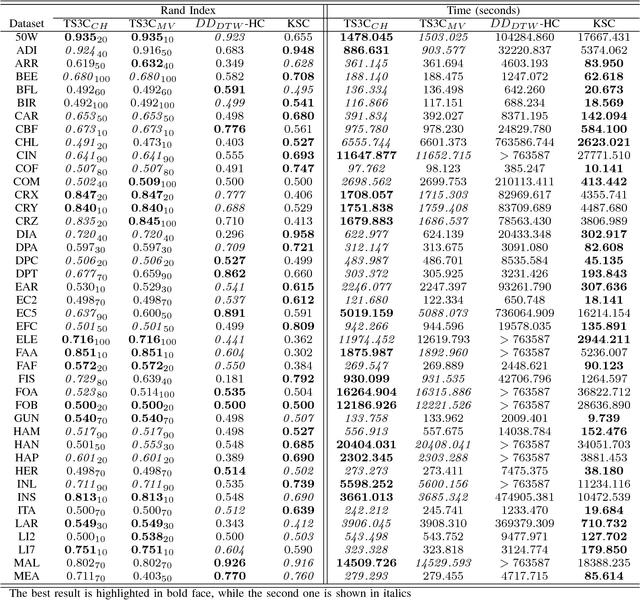

Abstract:Time series clustering is the process of grouping time series with respect to their similarity or characteristics. Previous approaches usually combine a specific distance measure for time series and a standard clustering method. However, these approaches do not take the similarity of the different subsequences of each time series into account, which can be used to better compare the time series objects of the dataset. In this paper, we propose a novel technique of time series clustering based on two clustering stages. In a first step, a least squares polynomial segmentation procedure is applied to each time series, which is based on a growing window technique that returns different-length segments. Then, all the segments are projected into same dimensional space, based on the coefficients of the model that approximates the segment and a set of statistical features. After mapping, a first hierarchical clustering phase is applied to all mapped segments, returning groups of segments for each time series. These clusters are used to represent all time series in the same dimensional space, after defining another specific mapping process. In a second and final clustering stage, all the time series objects are grouped. We consider internal clustering quality to automatically adjust the main parameter of the algorithm, which is an error threshold for the segmenta- tion. The results obtained on 84 datasets from the UCR Time Series Classification Archive have been compared against two state-of-the-art methods, showing that the performance of this methodology is very promising.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge