Brian Wilcox

Verde: Verification via Refereed Delegation for Machine Learning Programs

Feb 26, 2025Abstract:Machine learning programs, such as those performing inference, fine-tuning, and training of LLMs, are commonly delegated to untrusted compute providers. To provide correctness guarantees for the client, we propose adapting the cryptographic notion of refereed delegation to the machine learning setting. This approach enables a computationally limited client to delegate a program to multiple untrusted compute providers, with a guarantee of obtaining the correct result if at least one of them is honest. Refereed delegation of ML programs poses two technical hurdles: (1) an arbitration protocol to resolve disputes when compute providers disagree on the output, and (2) the ability to bitwise reproduce ML programs across different hardware setups, For (1), we design Verde, a dispute arbitration protocol that efficiently handles the large scale and graph-based computational model of modern ML programs. For (2), we build RepOps (Reproducible Operators), a library that eliminates hardware "non-determinism" by controlling the order of floating point operations performed on all hardware. Our implementation shows that refereed delegation achieves both strong guarantees for clients and practical overheads for compute providers.

Deep Active Lesion Segmentation

Aug 21, 2019

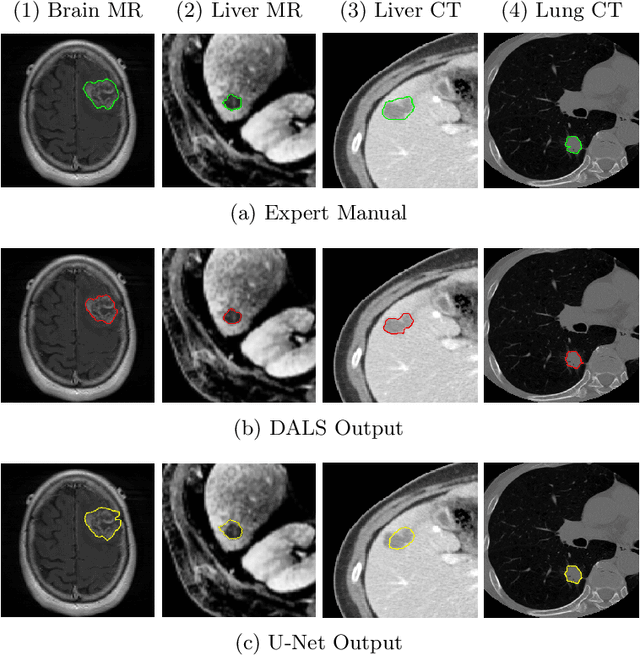

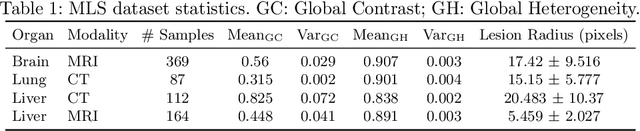

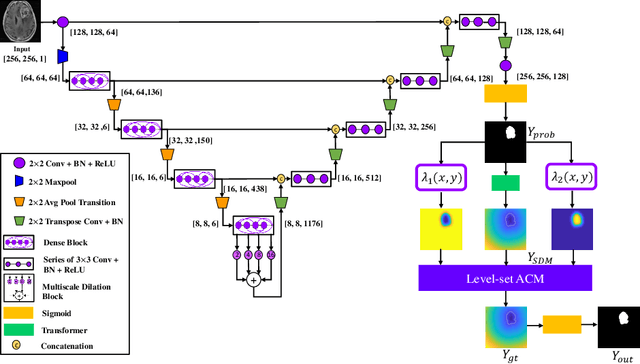

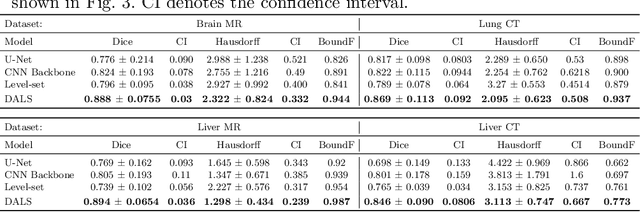

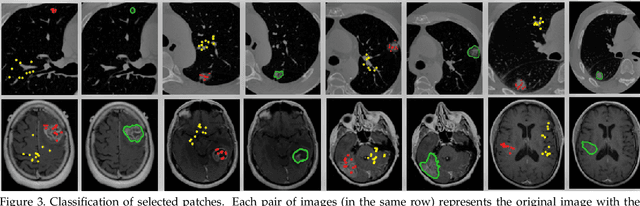

Abstract:Lesion segmentation is an important problem in computer-assisted diagnosis that remains challenging due to the prevalence of low contrast, irregular boundaries that are unamenable to shape priors. We introduce Deep Active Lesion Segmentation (DALS), a fully automated segmentation framework for that leverages the powerful nonlinear feature extraction abilities of fully Convolutional Neural Networks (CNNs) and the precise boundary delineation abilities of Active Contour Models (ACMs). Our DALS framework benefits from an improved level-set ACM formulation with a per-pixel-parameterized energy functional and a novel multiscale encoder-decoder CNN that learns an initialization probability map along with parameter maps for the ACM. We evaluate our lesion segmentation model on a new Multiorgan Lesion Segmentation (MLS) dataset that contains images of various organs, including brain, liver, and lung, across different imaging modalities---MR and CT. Our results demonstrate favorable performance compared to competing methods, especially for small training datasets.

* Accepted to Machine Learning in Medical Imaging (MLMI 2019)

Self-Attention Capsule Networks for Image Classification

Apr 29, 2019

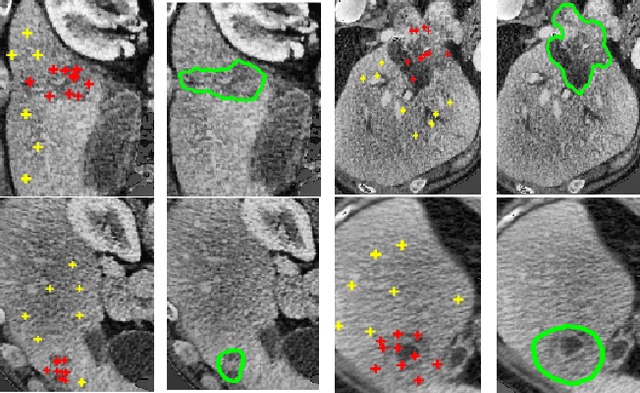

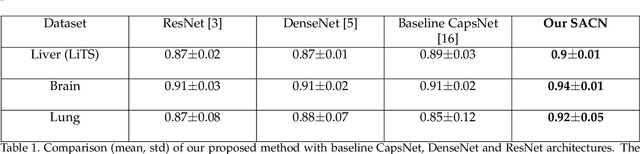

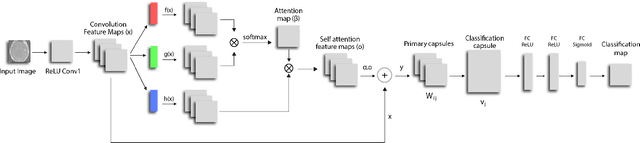

Abstract:We propose a novel architecture for image classification, called Self-Attention Capsule Networks (SACN). SACN is the first model that incorporates the Self-Attention mechanism as an integral layer within the Capsule Network (CapsNet). While the Self-Attention mechanism selects the more dominant image regions to focus on, the CapsNet analyzes the relevant features and their spatial correlations inside these regions only. The features are extracted in the convolutional layer. Then, the Self-Attention layer learns to suppress irrelevant regions based on features analysis, and highlights salient features useful for a specific task. The attention map is then fed into the CapsNet primary layer that is followed by a classification layer. The SACN proposed model was designed to use a relatively shallow CapsNet architecture to reduce computational load, and compensates for the absence of a deeper network by using the Self-Attention module to significantly improve the results. The proposed Self-Attention CapsNet architecture was extensively evaluated on five different datasets, mainly on three different medical sets, in addition to the natural MNIST and SVHN. The model was able to classify images and their patches with diverse and complex backgrounds better than the baseline CapsNet. As a result, the proposed Self-Attention CapsNet significantly improved classification performance within and across different datasets and outperformed the baseline CapsNet not only in classification accuracy but also in robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge