Brenna D. Argall

Learning to Control Complex Robots Using High-Dimensional Interfaces: Preliminary Insights

Oct 09, 2021

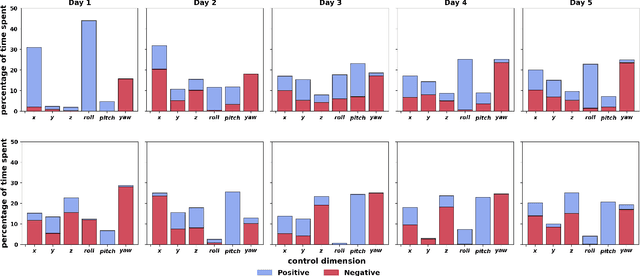

Abstract:Human body motions can be captured as a high-dimensional continuous signal using motion sensor technologies. The resulting data can be surprisingly rich in information, even when captured from persons with limited mobility. In this work, we explore the use of limited upper-body motions, captured via motion sensors, as inputs to control a 7 degree-of-freedom assistive robotic arm. It is possible that even dense sensor signals lack the salient information and independence necessary for reliable high-dimensional robot control. As the human learns over time in the context of this limitation, intelligence on the robot can be leveraged to better identify key learning challenges, provide useful feedback, and support individuals until the challenges are managed. In this short paper, we examine two uninjured participants' data from an ongoing study, to extract preliminary results and share insights. We observe opportunities for robot intelligence to step in, including the identification of inconsistencies in time spent across all control dimensions, asymmetries in individual control dimensions, and user progress in learning. Machine reasoning about these situations may facilitate novel interface learning in the future.

Characterization of Assistive Robot Arm Teleoperation: A Preliminary Study to Inform Shared Control

Jul 31, 2020

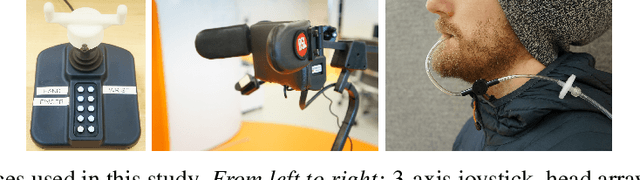

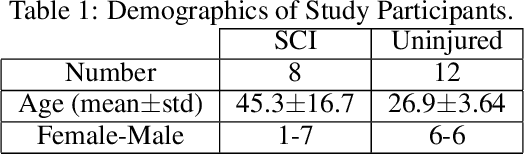

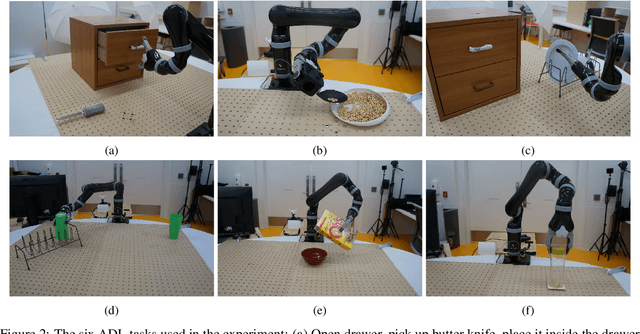

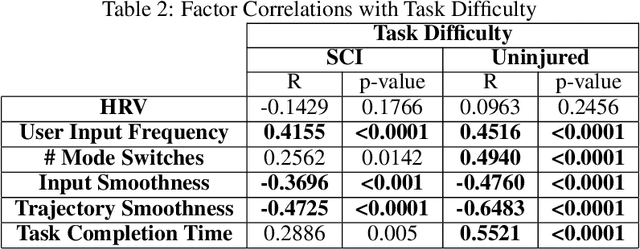

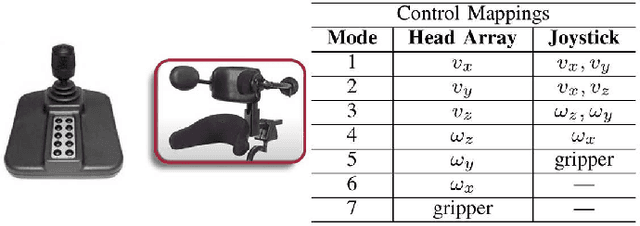

Abstract:Assistive robotic devices can increase the independence of individuals with motor impairments. However, each person is unique in their level of injury, preferences, and skills, which moreover can change over time. Further, the amount of assistance required can vary throughout the day due to pain or fatigue, or over longer periods due to rehabilitation, debilitating conditions, or aging. Therefore, in order to become an effective team member, the assistive machine should be able to learn from and adapt to the human user. To do so, we need to be able to characterize the user's control commands to determine when and how autonomy should change to best assist the user. We perform a 20 person pilot study in order to establish a set of meaningful performance measures which can be used to characterize the user's control signals and as cues for the autonomy to modify the level and amount of assistance. Our study includes 8 spinal cord injured and 12 uninjured individuals. The results unveil a set of objective, runtime-computable metrics that are correlated with user-perceived task difficulty, and thus could be used by an autonomy system when deciding whether assistance is required. The results further show that metrics which evaluate the user interaction with the robotic device, robot execution, and the perceived task difficulty show differences among spinal cord injured and uninjured groups, and are affected by the type of control interface used. The results will be used to develop an adaptable, user-centered, and individually customized shared-control algorithms.

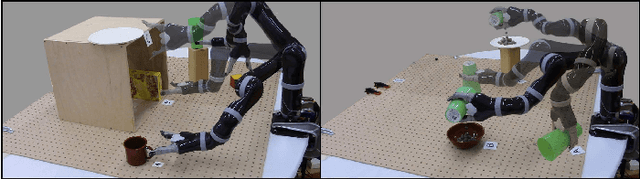

Customized Handling of Unintended Interface Operation in Assistive Robots

Jul 04, 2020

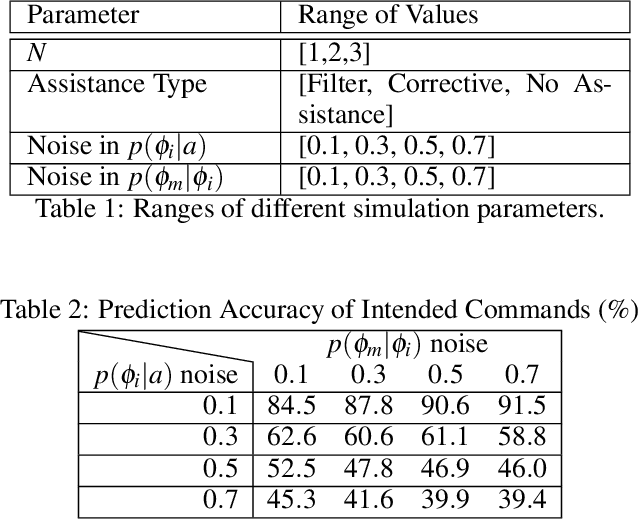

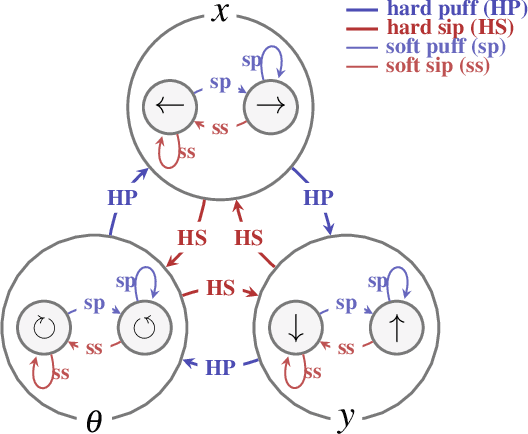

Abstract:Teleoperation of physically assistive machines is usually facilitated by interfaces that are low-dimensional and have unique physical mechanisms for their activation. Accidental deviations from intended user input commands due to motor limitations can potentially affect user satisfaction and task performance. In this paper, we present an assistance system that reasons about a human's intended actions during robot teleoperation in order to provide appropriate corrections for unintended behavior. We model the human's physical interaction with a control interface during robot teleoperation using the framework of dynamic Bayesian Networks in which we distinguish between intended and measured physical actions explicitly. By reasoning over the unobserved intentions using model-based inference techniques, our assistive system provides customized corrections on a user's issued commands. We present results from (1) a simulation-based study in which we validate our algorithm and (2) a 10-person human subject study in which we evaluate the performance of the proposed assistance paradigms. Our results suggest that (a) the corrective assistance paradigm helped to significantly reduce objective task effort as measured by task completion time and number of mode switches and (b) the assistance paradigms helped to reduce cognitive workload and user frustration and improve overall satisfaction.

Active Intent Disambiguation for Shared Control Robots

May 07, 2020

Abstract:Assistive shared-control robots have the potential to transform the lives of millions of people afflicted with severe motor impairments. The usefulness of shared-control robots typically relies on the underlying autonomy's ability to infer the user's needs and intentions, and the ability to do so unambiguously is often a limiting factor for providing appropriate assistance confidently and accurately. The contributions of this paper are four-fold. First, we introduce the idea of intent disambiguation via control mode selection, and present a mathematical formalism for the same. Second, we develop a control mode selection algorithm which selects the control mode in which the user-initiated motion helps the autonomy to maximally disambiguate user intent. Third, we present a pilot study with eight subjects to evaluate the efficacy of the disambiguation algorithm. Our results suggest that the disambiguation system (a) helps to significantly reduce task effort, as measured by number of button presses, and (b) is of greater utility for more limited control interfaces and more complex tasks. We also observe that (c) subjects demonstrated a wide range of disambiguation request behaviors, with the common thread of concentrating requests early in the execution. As our last contribution, we introduce a novel field-theoretic approach to intent inference inspired by dynamic field theory that works in tandem with the disambiguation scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge