Customized Handling of Unintended Interface Operation in Assistive Robots

Paper and Code

Jul 04, 2020

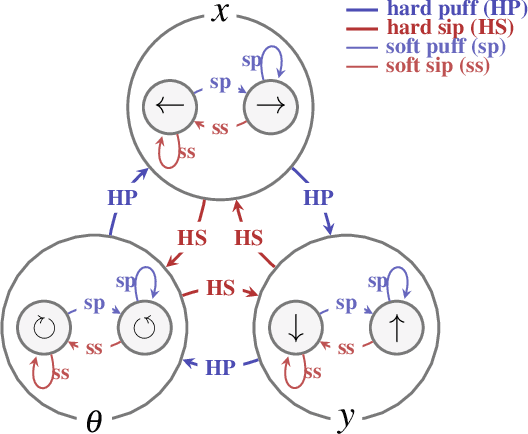

Teleoperation of physically assistive machines is usually facilitated by interfaces that are low-dimensional and have unique physical mechanisms for their activation. Accidental deviations from intended user input commands due to motor limitations can potentially affect user satisfaction and task performance. In this paper, we present an assistance system that reasons about a human's intended actions during robot teleoperation in order to provide appropriate corrections for unintended behavior. We model the human's physical interaction with a control interface during robot teleoperation using the framework of dynamic Bayesian Networks in which we distinguish between intended and measured physical actions explicitly. By reasoning over the unobserved intentions using model-based inference techniques, our assistive system provides customized corrections on a user's issued commands. We present results from (1) a simulation-based study in which we validate our algorithm and (2) a 10-person human subject study in which we evaluate the performance of the proposed assistance paradigms. Our results suggest that (a) the corrective assistance paradigm helped to significantly reduce objective task effort as measured by task completion time and number of mode switches and (b) the assistance paradigms helped to reduce cognitive workload and user frustration and improve overall satisfaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge