Ferdinando A. Mussa-Ivaldi

Learning to Control Complex Robots Using High-Dimensional Interfaces: Preliminary Insights

Oct 09, 2021

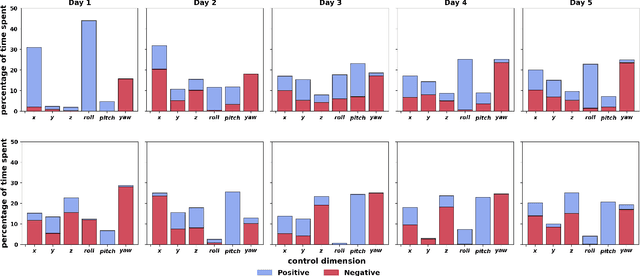

Abstract:Human body motions can be captured as a high-dimensional continuous signal using motion sensor technologies. The resulting data can be surprisingly rich in information, even when captured from persons with limited mobility. In this work, we explore the use of limited upper-body motions, captured via motion sensors, as inputs to control a 7 degree-of-freedom assistive robotic arm. It is possible that even dense sensor signals lack the salient information and independence necessary for reliable high-dimensional robot control. As the human learns over time in the context of this limitation, intelligence on the robot can be leveraged to better identify key learning challenges, provide useful feedback, and support individuals until the challenges are managed. In this short paper, we examine two uninjured participants' data from an ongoing study, to extract preliminary results and share insights. We observe opportunities for robot intelligence to step in, including the identification of inconsistencies in time spent across all control dimensions, asymmetries in individual control dimensions, and user progress in learning. Machine reasoning about these situations may facilitate novel interface learning in the future.

Controlling wheelchairs by body motions: A learning framework for the adaptive remapping of space

Jul 27, 2011

Abstract:Learning to operate a vehicle is generally accomplished by forming a new cognitive map between the body motions and extrapersonal space. Here, we consider the challenge of remapping movement-to-space representations in survivors of spinal cord injury, for the control of powered wheelchairs. Our goal is to facilitate this remapping by developing interfaces between residual body motions and navigational commands that exploit the degrees of freedom that disabled individuals are most capable to coordinate. We present a new framework for allowing spinal cord injured persons to control powered wheelchairs through signals derived from their residual mobility. The main novelty of this approach lies in substituting the more common joystick controllers of powered wheelchairs with a sensor shirt. This allows the whole upper body of the user to operate as an adaptive joystick. Considerations about learning and risks have lead us to develop a safe testing environment in 3D Virtual Reality. A Personal Augmented Reality Immersive System (PARIS) allows us to analyse learning skills and provide users with an adequate training to control a simulated wheelchair through the signals generated by body motions in a safe environment. We provide a description of the basic theory, of the development phases and of the operation of the complete system. We also present preliminary results illustrating the processing of the data and supporting of the feasibility of this approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge