Temesgen Gebrekristos

Learning to Control Complex Robots Using High-Dimensional Interfaces: Preliminary Insights

Oct 09, 2021

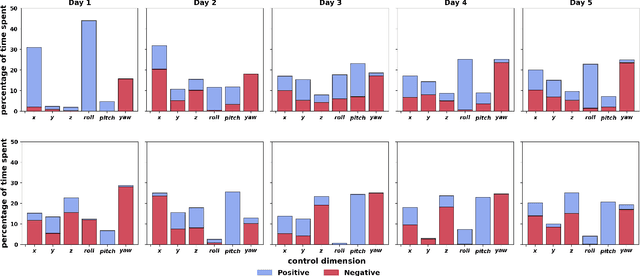

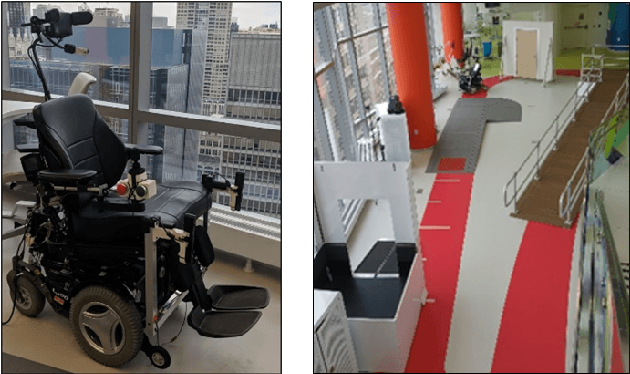

Abstract:Human body motions can be captured as a high-dimensional continuous signal using motion sensor technologies. The resulting data can be surprisingly rich in information, even when captured from persons with limited mobility. In this work, we explore the use of limited upper-body motions, captured via motion sensors, as inputs to control a 7 degree-of-freedom assistive robotic arm. It is possible that even dense sensor signals lack the salient information and independence necessary for reliable high-dimensional robot control. As the human learns over time in the context of this limitation, intelligence on the robot can be leveraged to better identify key learning challenges, provide useful feedback, and support individuals until the challenges are managed. In this short paper, we examine two uninjured participants' data from an ongoing study, to extract preliminary results and share insights. We observe opportunities for robot intelligence to step in, including the identification of inconsistencies in time spent across all control dimensions, asymmetries in individual control dimensions, and user progress in learning. Machine reasoning about these situations may facilitate novel interface learning in the future.

An Analysis of Human-Robot Information Streams to Inform Dynamic Autonomy Allocation

Aug 03, 2021

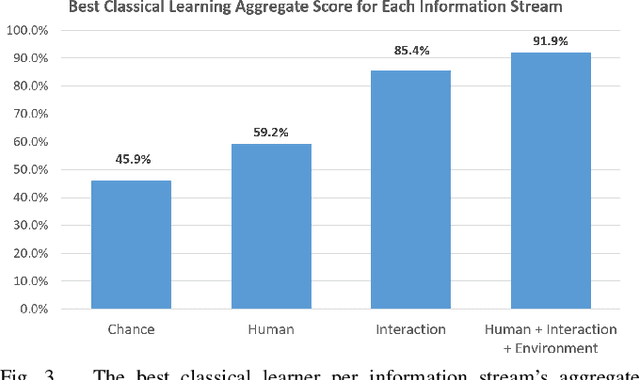

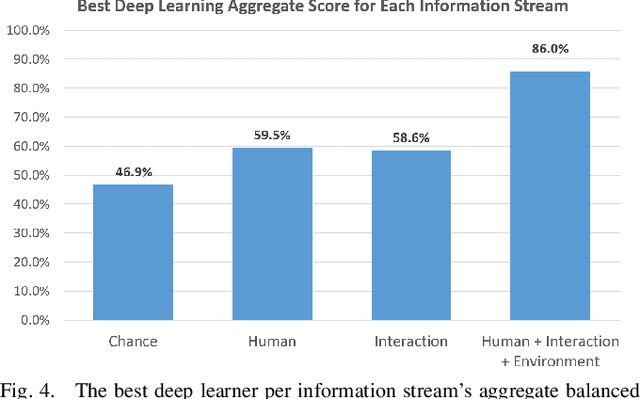

Abstract:A dynamic autonomy allocation framework automatically shifts how much control lies with the human versus the robotics autonomy, for example based on factors such as environmental safety or user preference. To investigate the question of which factors should drive dynamic autonomy allocation, we perform a human subject study to collect ground truth data that shifts between levels of autonomy during shared-control robot operation. Information streams from the human, the interaction between the human and the robot, and the environment are analyzed. Machine learning methods -- both classical and deep learning -- are trained on this data. An analysis of information streams from the human-robot team suggests features which capture the interaction between the human and the robotics autonomy are the most informative in predicting when to shift autonomy levels. Even the addition of data from the environment does little to improve upon this predictive power. The features learned by deep networks, in comparison to the hand-engineered features, prove variable in their ability to represent shift-relevant information. This work demonstrates the classification power of human-only and human-robot interaction information streams for use in the design of shared-control frameworks, and provides insights into the comparative utility of various data streams and methods to extract shift-relevant information from those data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge