Christopher X. Miller

An Analysis of Human-Robot Information Streams to Inform Dynamic Autonomy Allocation

Aug 03, 2021

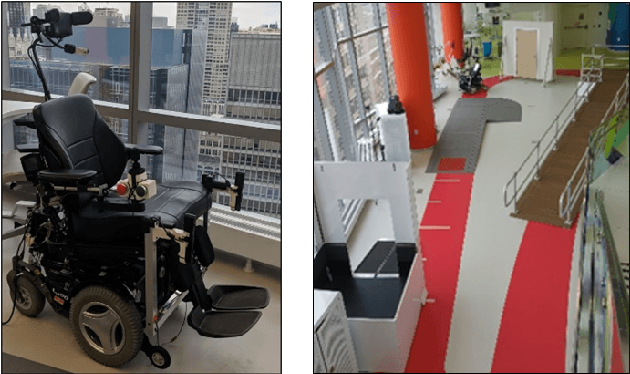

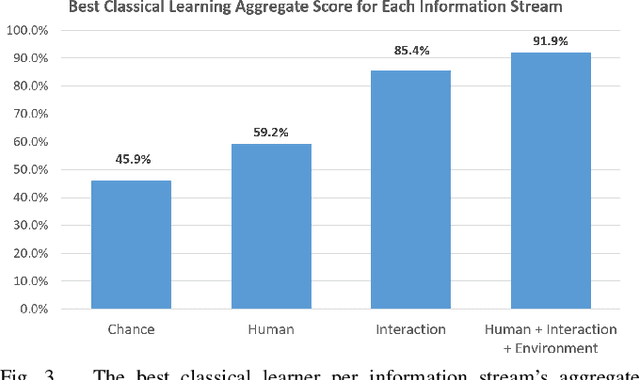

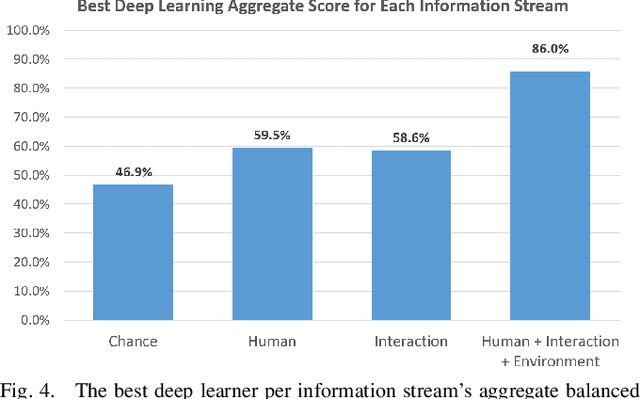

Abstract:A dynamic autonomy allocation framework automatically shifts how much control lies with the human versus the robotics autonomy, for example based on factors such as environmental safety or user preference. To investigate the question of which factors should drive dynamic autonomy allocation, we perform a human subject study to collect ground truth data that shifts between levels of autonomy during shared-control robot operation. Information streams from the human, the interaction between the human and the robot, and the environment are analyzed. Machine learning methods -- both classical and deep learning -- are trained on this data. An analysis of information streams from the human-robot team suggests features which capture the interaction between the human and the robotics autonomy are the most informative in predicting when to shift autonomy levels. Even the addition of data from the environment does little to improve upon this predictive power. The features learned by deep networks, in comparison to the hand-engineered features, prove variable in their ability to represent shift-relevant information. This work demonstrates the classification power of human-only and human-robot interaction information streams for use in the design of shared-control frameworks, and provides insights into the comparative utility of various data streams and methods to extract shift-relevant information from those data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge