Characterization of Assistive Robot Arm Teleoperation: A Preliminary Study to Inform Shared Control

Paper and Code

Jul 31, 2020

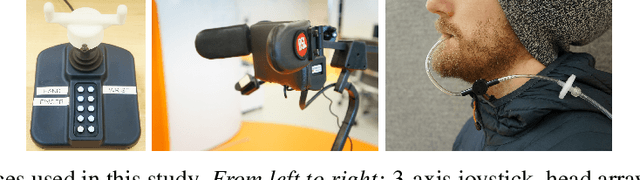

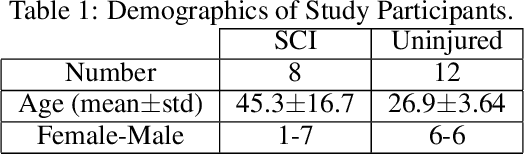

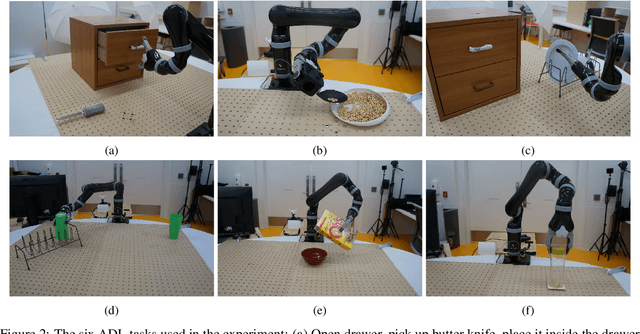

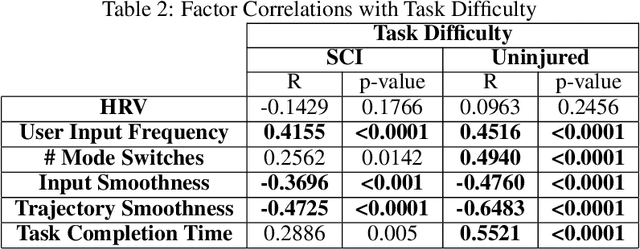

Assistive robotic devices can increase the independence of individuals with motor impairments. However, each person is unique in their level of injury, preferences, and skills, which moreover can change over time. Further, the amount of assistance required can vary throughout the day due to pain or fatigue, or over longer periods due to rehabilitation, debilitating conditions, or aging. Therefore, in order to become an effective team member, the assistive machine should be able to learn from and adapt to the human user. To do so, we need to be able to characterize the user's control commands to determine when and how autonomy should change to best assist the user. We perform a 20 person pilot study in order to establish a set of meaningful performance measures which can be used to characterize the user's control signals and as cues for the autonomy to modify the level and amount of assistance. Our study includes 8 spinal cord injured and 12 uninjured individuals. The results unveil a set of objective, runtime-computable metrics that are correlated with user-perceived task difficulty, and thus could be used by an autonomy system when deciding whether assistance is required. The results further show that metrics which evaluate the user interaction with the robotic device, robot execution, and the perceived task difficulty show differences among spinal cord injured and uninjured groups, and are affected by the type of control interface used. The results will be used to develop an adaptable, user-centered, and individually customized shared-control algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge